Observe Databricks

This document will walk you through monitoring, operationalizing, and optimizing your Databricks setup using the metrics that are given by ADOC.

Introduction to Databricks

The comprehensive data analytics and machine learning platform Databricks empowers data observability users and experts. It streamlines data ingestion, preprocessing, advanced analytics, and machine learning in an integrated environment.

Databricks offers data team collaboration, Apache Spark-based scalable data processing, and seamless connection with common data sources and storage options. Databricks helps data observability users track data quality, lineage, governance, and compliance.

Databricks' sophisticated machine learning model construction and deployment capabilities allow data observability users to exploit their data for predictive analytics and AI applications. Databricks helps organizations expedite data workflows, improve data reliability, and obtain deeper insights for data-driven decision-making and innovation. It allows data professionals to maximize data value with this flexible platform.

Databricks Integrations with ADOC

Integrating Databricks with Acceldata's ADOC (Acceldata Observability Cloud) provides various benefits for enterprises looking to improve data management and observability:

- Comprehensive Data Observability: By integrating Databricks' analytics and processing capabilities with ADOC's data quality monitoring, lineage tracing, and anomaly detection, the integration enables comprehensive data observability. This collaboration helps enterprises to maintain data reliability and integrity throughout the data pipeline.

- End-to-End Visibility: Organizations obtain end-to-end visibility into their data operations by integrating Databricks with ADOC. They may trace data from source to destination, discover bottlenecks or flaws in data conversions, and resolve data quality concerns proactively. This comprehensive perspective improves data governance and compliance initiatives.

- Improved Data Quality and Reliability: When combined with Databricks, ADOC's data quality monitoring capabilities enable real-time detection of data abnormalities and quality issues. This ensures that the data utilized for analytics and machine learning within Databricks is correct and reliable, resulting in more reliable insights and decision-making.

- Simplified Troubleshooting: The bundled solution simplifies troubleshooting by offering a unified platform for identifying and resolving data issues. Data teams can immediately identify the cause of data issues, whether they arise in Databricks workflows or elsewhere in the data pipeline, and take appropriate corrective steps.

- Optimized Performance: Databricks performance can be optimized through integration with ADOC. Data experts can improve Databricks job efficiency, minimize processing costs, and improve overall data pipeline performance by detecting and addressing data quality or processing bottlenecks.

In conclusion, combining Databricks and Acceldata's ADOC provides comprehensive data observability, end-to-end visibility, enhanced data quality, faster debugging, and optimized speed. This combination enables enterprises to maximize the value of their data analytics and machine learning workflows while maintaining data integrity and reliability.

Databricks Firewall Configuration Requirements

Overview

When integrating Databricks Compute with the ADOC platform, certain outbound firewall settings must be configured to allow necessary communication between your Databricks clusters and the Acceldata Control Plane. This ensures that essential agents can be downloaded during cluster startup or restart and that data can be transmitted securely.

Outbound Firewall Settings

Please ensure the following outbound access is permitted from your environment (e.g., Databricks subnet) on port 443:

- Agent Binaries Download

- URL:

acceldata-cloud-agent-binaries.s3.amazonaws.com - Purpose: Downloads agent binaries required during cluster startup or restart.

- Action Required: Allow outbound HTTPS access to this URL.

2. Agent Files Download

- URL: downloads.acceldata.one or IP Address: 138.201.81.125

- Purpose: Downloads essential files such as spark-listener-databricks-3.3.0_2.12-all-0.1.jar and databricks_gru_binaries.zip, which are crucial for data collection.

- Action Required: Allow outbound HTTPS access to this URL or IP address.

Why Outbound Access is Necessary

- Agent Download: The Databricks clusters initiate connections to download necessary agent files during startup or restart. Without outbound access to the specified URLs or IP addresses, the clusters cannot retrieve these files, leading to potential disruptions in data collection.

- Data Transmission: The agents send collected data back to the Acceldata Control Plane for processing and analysis. Outbound access ensures this data is transmitted securely and without interruption.

Steps to Configure Firewall Settings

Identify the Environment: Determine the network environment where your Databricks clusters are running (e.g., Databricks subnet).

Update Firewall Rules:

For URL Access:

- Add outbound rules allowing HTTPS (port 443) traffic to:

acceldata-cloud-agent-binaries.s3.amazonaws.comdownloads.acceldata.one

- Add outbound rules allowing HTTPS (port 443) traffic to:

For IP Access (if URL-based rules are not possible):

- Add an outbound rule allowing HTTPS (port 443) traffic to IP address:

138.201.81.125

- Add an outbound rule allowing HTTPS (port 443) traffic to IP address:

Verify Connectivity: Test the outbound connections to ensure that the Databricks clusters can reach the specified URLs/IP addresses.

Restart Databricks Clusters: After updating the firewall settings, restart your Databricks clusters to allow them to download the necessary agent files during startup.

It is important to regularly review and update your firewall settings to ensure continued connectivity for your Databricks clusters.

- Existing Control Plane IPs: These firewall settings are in addition to the Control Plane IPs already specified in the documentation. Ensure all required destinations are accessible.

- Security Compliance: Allowing outbound traffic only to specified destinations maintains your security posture while enabling necessary functionality.

- Regular Updates: If your security policies include regular reviews or updates to firewall rules, ensure these settings remain in place to prevent disruptions.

Download Databricks Visualization Data

ADOC allows you to download data from any of the visualizations. The visualization data is downloaded as a CSV file. The CSV file has the word DB (Databricks), followed by visualization name and the date and time of download. If you applied

You can use the download CSV button to download visualization data.

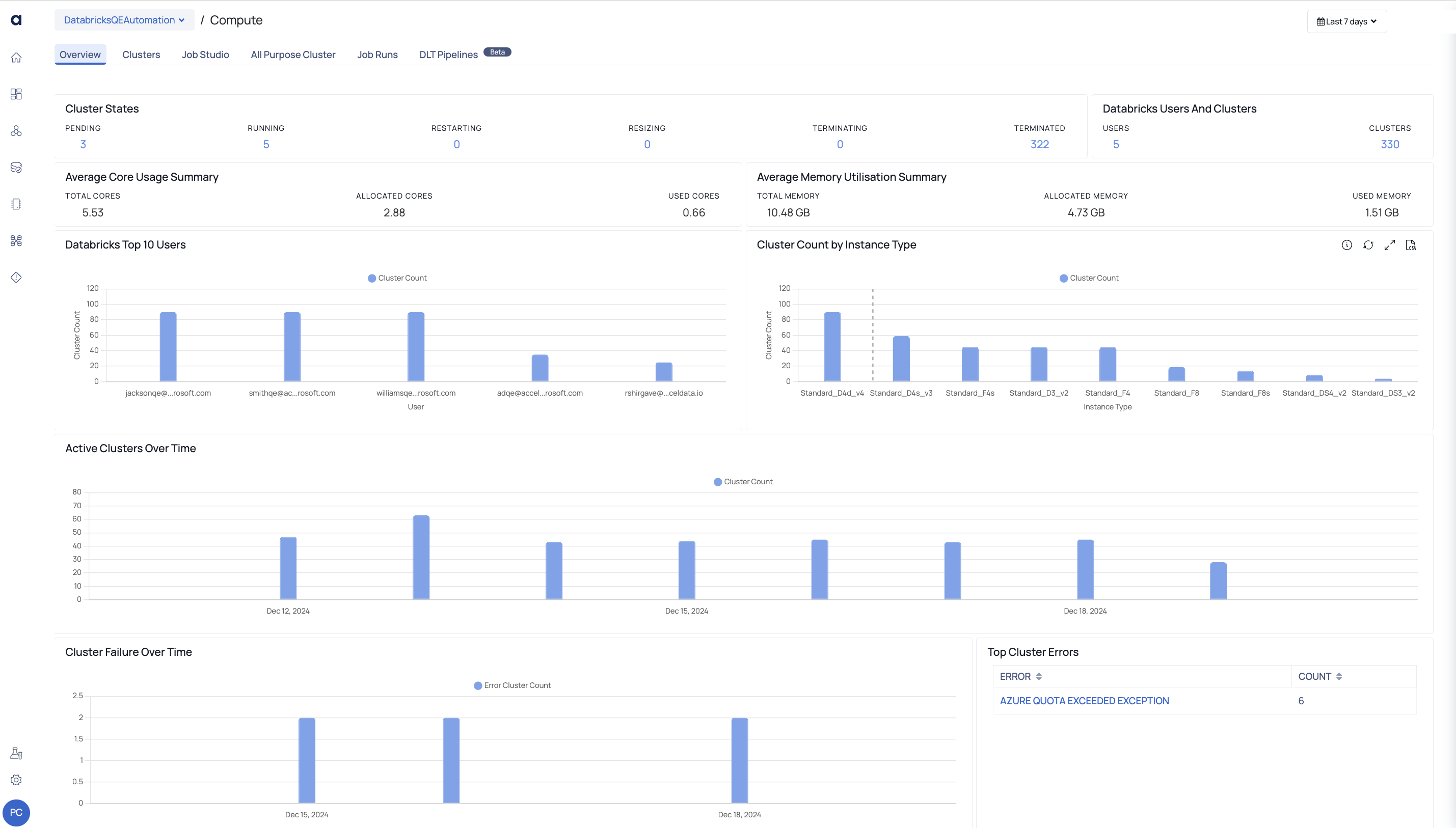

Databricks Compute and Cost Management

The Databricks Compute and Costs sections have been updated with various new features and enhancements targeted at delivering deeper insights and better control over your computing resources.

Enhanced API Integrations:

The Compute section now supports more detailed cost data retrieval via the cost management API. This allows users to obtain precise cost data for Databricks resources, resulting in a more accurate portrayal of expenditure incurred.

Users may now differentiate between Databricks and Cloud Vendor charges (including bandwidth, network, virtual machines, and storage). These costs are provided separately to provide a clearer picture of where expenditures are made.

New Cost Visualization and Analysis Tools:

The Databricks compute page now has updated widgets and graphs that describe expenses by cluster type, instance type, and workspace. This provides a cost summary that combines cost over multiple time periods, as well as cost breakdown by cluster type.

Cloud Vendor expense section is now added which displays the expenses of resources provided by the cloud vendors.

Databricks Query Studio Enhancements:

The Databricks Query Studio now provides expanded filtering capabilities, allowing users to customize their searches based on particular parameters such as cluster type, job instance and more. This aids in discovering cost-cutting options and maximizing resource allocations.

- Users are advised to set their system time to UTC to ensure an exact cost match between ADOC and the Azure Portal.

- Due to variances in update frequency between Databricks and the Azure Portal, there may be a minor mismatch (less than 0.5%) in Cloud Vendor charges. This is normally addressed within 24-48 hours of the data being updated.