Open Source Data Platform (ODP) is an open-source implementation of Apache Hadoop Distribution, built and provided as a single installation package by Acceldata. ODP is a software framework consisting of libraries and integration of latest upstream code from Apache projects to assist data driven enterprises in their data ingestion, storage, compute and security endeavors. Deployment and cluster management in ODP is provided using Ambari. Besides, additional components can also be added to the stack using custom Ambari management packs.

ODP also comes with Acceldata's Pulse support to allow enterprises with complete solution for management and observability of their data and data-driven operations.

Features of ODP

- ODP is completely open source - built and tested by Acceldata using the latest upstream code.

- Easy to deploy version controlled repository of community driven open source stack components for Enterprises.

- A simple, cost effective, scalable data platform solution.

- ODP uses the same stack deployed by various top organizations.

- Provide enterprises to continue with their Big Data Hadoop journey.

- ODP supports public, private and hybrid environments to meet the changing requirements of enterprises.

- Provides agility to run multiple instances of same or different version of any open source component seamlessly through mpacks.

- Enterprises may couple ODP implementation with customer support team having deep experience supporting huge clusters.

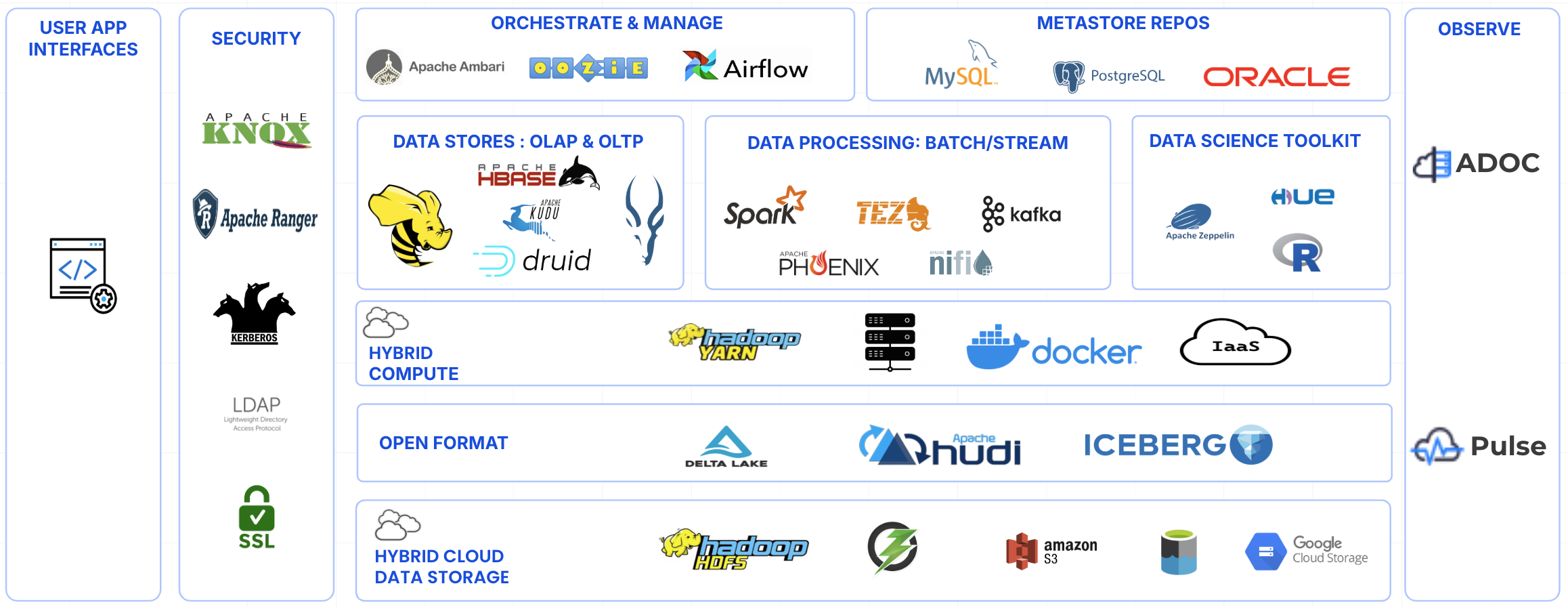

ODP Architecture

The following image displays the architecture of ODP.

ODP Architecture Diagram

Open Source Data Platform Specifications

Component Versions

This section provides a list of the official Apache component versions for the Open Source Data Platform (ODP). To ensure that you are working with the most recent stable software available, you must be familiar with the latest Apache component versions in ODP. Additionally, you should be aware of the available Technical Preview components and use them only in a testing environment.

The Acceldata approach is to provide patches only when necessary to ensure the interoperability of components. Unless you are explicitly directed by Acceldata Support to install a patch, each of the ODP components should remain at the following package version levels to ensure a certified and supported copy of ODP.

ODP Support Matrix with official Apache component versions is listed below.

| COMPONENTS | OS | ||

|---|---|---|---|

| Service | Version | RHEL 8/RL 8 | Ubuntu 18/20 |

| Ambari | 2.7.8.1-1 | ✅ | ✅ |

| Airflow (mpack) | 2.8.1 | ✅ | ✅ |

| Cruise Control | 2.5.84 | ✅ | ✅ |

| Druid | 27.0.0 | ✅ | ✅ |

| Hadoop | 3.2.3 | ✅ | ✅ |

| HBase | 2.4.11 | ✅ | ✅ |

| Hive | 3.1.4 (4.0.0-alpha1) | ✅ | ✅ |

| Httpfs (mpack) | 2.7.1 | ✅ | ✅ |

| Hue (mpack) | 4.10.0 | ✅ | ✅ |

| Impala (mpack) | 4.1.2 | ✅ | - |

| Infra Solr | 2.7.6.0 | ✅ | ✅ |

| Isilon (mpack) | 1.0.3 | ✅ | ✅ |

| Kafka | 2.8.2 | ✅ | ✅ |

| Knox | 1.6.1 | ✅ | ✅ |

| Kudu | 1.17.0 | ✅ | |

| Nifi (mpack) | 1.23.2 | ✅ | ✅ |

| Nifi Registry (mpack) | 1.23.2 | ✅ | ✅ |

| Oozie | 5.2.1 | ✅ | ✅ |

| Ozone (mpack) | 1.4.0 | ✅ | ✅ |

| Phoenix | 5.1.3 | ✅ | ✅ |

| Ranger | 2.3.0 | ✅ | ✅ |

| Schema Registry (mpack) | 1.0.0 | ✅ | ✅ |

| Spark 2 | 2.4.8 | ✅ | ✅ |

| Spark 3 (mpack) | 3.3.3 | ✅ | ✅ |

| Sqoop | 1.4.8 | ✅ | ✅ |

| Tez | 0.10.1 | ✅ | ✅ |

| Zeppelin | 0.10.1 | ✅ | ✅ |

| Zookeeper | 3.5.10 | ✅ | ✅ |

| ODP-UTILS | 1.1.0.22 | ✅ | ✅ |

| Spark2-warehouse-connector | 1.0.0 | ✅ | ✅ |

| Spark3-warehouse-connector | 1.0.0 | ✅ | ✅ |

- Hue, being a standalone service, can be installed on a separate node running Python 3.9. If your requirements include Hue, ensure at least one node with Python 3.9 where Hue will be installed for proper functionality.

- Spark2 jobs, due to it's distributed nature will encounter issues when used with Python 3.11 given its official support is until Python 3.7 and our extended support till Python 3.9. Users will have to maintain atleast one node with Python 3.9 where Spark client is installed and jobs are to be submitted. To mitigate those issues, following approaches must be taken:

- Utilize Node labeling to execute Spark2 specific jobs on nodes with Python 3.9 installed.

- Manually specify executor nodes in

spark-submitcommand to target nodes with Python 3.9. - Employ virtual environments across all nodes containing Python 3.9 dependencies.

- Alternatively, create a virtual environment with Python 3.9 dependencies, zip it, and distribute it using the

--archivesargument to ensure consistency across nodes.

Java Certifications

By default, OpenJDK8 does not enable support for weaker encryption types. For more information, see Release notes for Red Hat build of OpenJDK 8.0.392.

| Name | Status |

|---|---|

| Java | JDK 8 (Complete) JDK 11 (TBD) |

Azul JDK Azul Zulu | 8.70.0.23 |

Supported Databases

| Service | Version |

|---|---|

| MySQL | 8.x |

| PostgreSQL | 15.7 |

| MariaDB | 10.3, 10.11 |

| OracleDB | 12c and above |

Upcoming Releases

| Component | Release Date |

|---|---|

| Flink | Q3 2024 |

| Kafka3 | Q2 2024 |

ODP Process Methodology

ODP process

ODP Migration Options

Acceldata supports three types of migrations for ODP. The three migration methods are described as follows.

In-Place Upgrade

This is the fastest migration process and does not require you to uninstall the existing version. You can start this migration process without saving data beyond normal precautions.

In-place upgrade

Side-car Upgrade

You can use the side-car migration technique when you have tight service-level agreements (SLAs) that preclude an extended. This process minimizes downtime on individual workloads while providing a straightforward roll-back mechanism on a per-workload basis.

Side-car upgrade

Forklift Upgrade

Forklift upgrade requires major changes to your existing IT infrastructure. Forklift upgrades are a result of relatively minor enhancements that cannot be implemented piece by piece due to legacy systems. In such cases, the hardware and software needs to be updated simultaneously, creating a job so large that it requires a metaphorical forklift to carry it out.

Forklift upgrade

The content here and henceforth is intended to outline our general product direction. It is intended for information purposes only, and may not be incorporated into any contract.