Title

Create new category

Edit page index title

Edit category

Edit link

Configuring ODP with AWS S3 using S3A

Integrating Open Source Data Platform (ODP) with Amazon Web Services (AWS) Simple Storage Service (S3) through the S3A protocol is a crucial aspect of optimizing data management in a cloud environment. This configuration enables seamless interaction between Oracle's powerful data export/import tool and the scalable storage capabilities of AWS S3.

Configuration Steps

To access your data in the S3 bucket, you need to implement the following configuration changes.

Core-site.xml

<!-- Amazon S3 credentials --><property> <name>fs.s3a.access.key</name> <value>AWS_ACCESS_KEY_ID</value></property><property> <name>fs.s3a.secret.key</name <value>AWS_ACCESS_SECRET_KEY</value></property><!-- S3A file system implementation --><property> <name>fs.s3a.impl</name <value>org.apache.hadoop.fs.s3a.S3AFileSystem</value></property>mapred-site.xml

<!-- S3A file system implementation --><property> <name>fs.s3a.impl</name> <value>org.apache.hadoop.fs.s3a.S3AFileSystem</value></property>hive-site.xml

<!-- Amazon S3 credentials --><property> <name>fs.s3a.access.key</name> <value>AWS_ACCESS_KEY_ID</value></property><property> <name>fs.s3a.secret.key</name> <value>AWS_ACCESS_SECRET_KEY</value></property>After applying the required configurations, it is essential to perform a restart of all the necessary services.

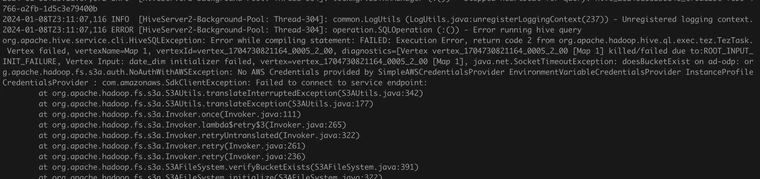

Troubleshooting

If you experience issues while attempting to write data using S3A in Hive, please refer to this link for guidance on resolving the issue.

To address this, you can create S3 credentials specific to the bucket in your core-site.xml configuration file as demonstrated below.

<configuration> <!-- Bucket-specific S3 credentials --> <property> <name>fs.s3a.bucket.ad-odp.access.key</name> <value>aws_access_key_id</value> </property> <property> <name>fs.s3a.bucket.ad-odp.secret.key</name> <value>aws_secret_access_key</value> </property></configuration>To ensure the changes take effect, restart your Hadoop and Hive services after making changes to the configuration.