To install Oozie on Acceldata's Open Source Data Platform, perform the following steps:

- Create Oozie users and database. Refer to Using an Existing Database with Oozie.

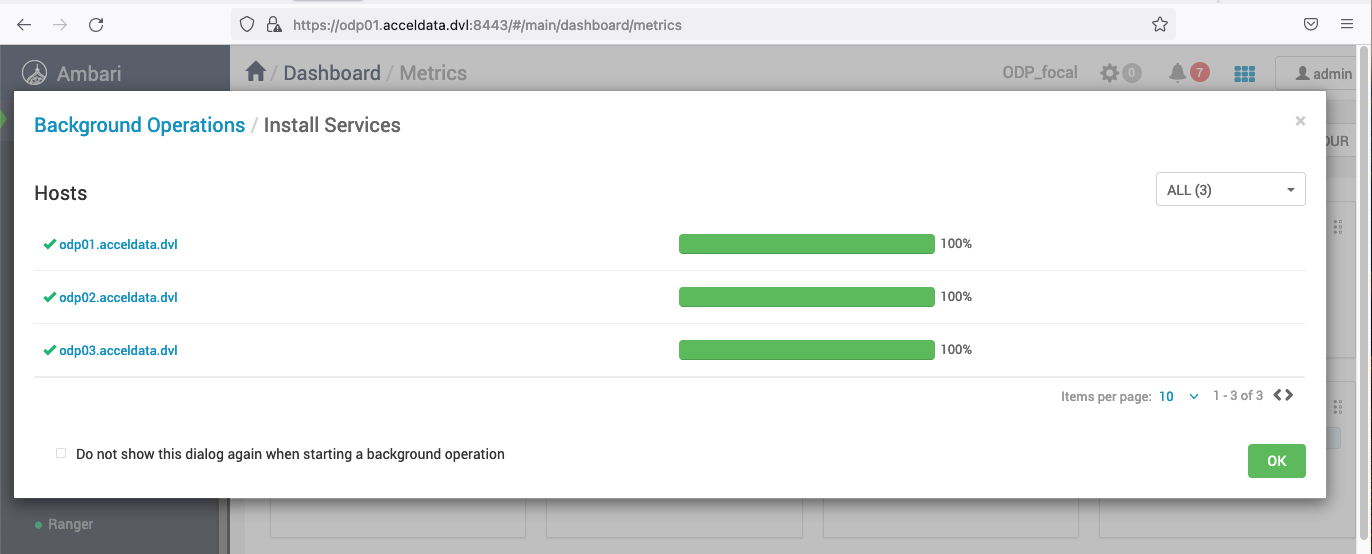

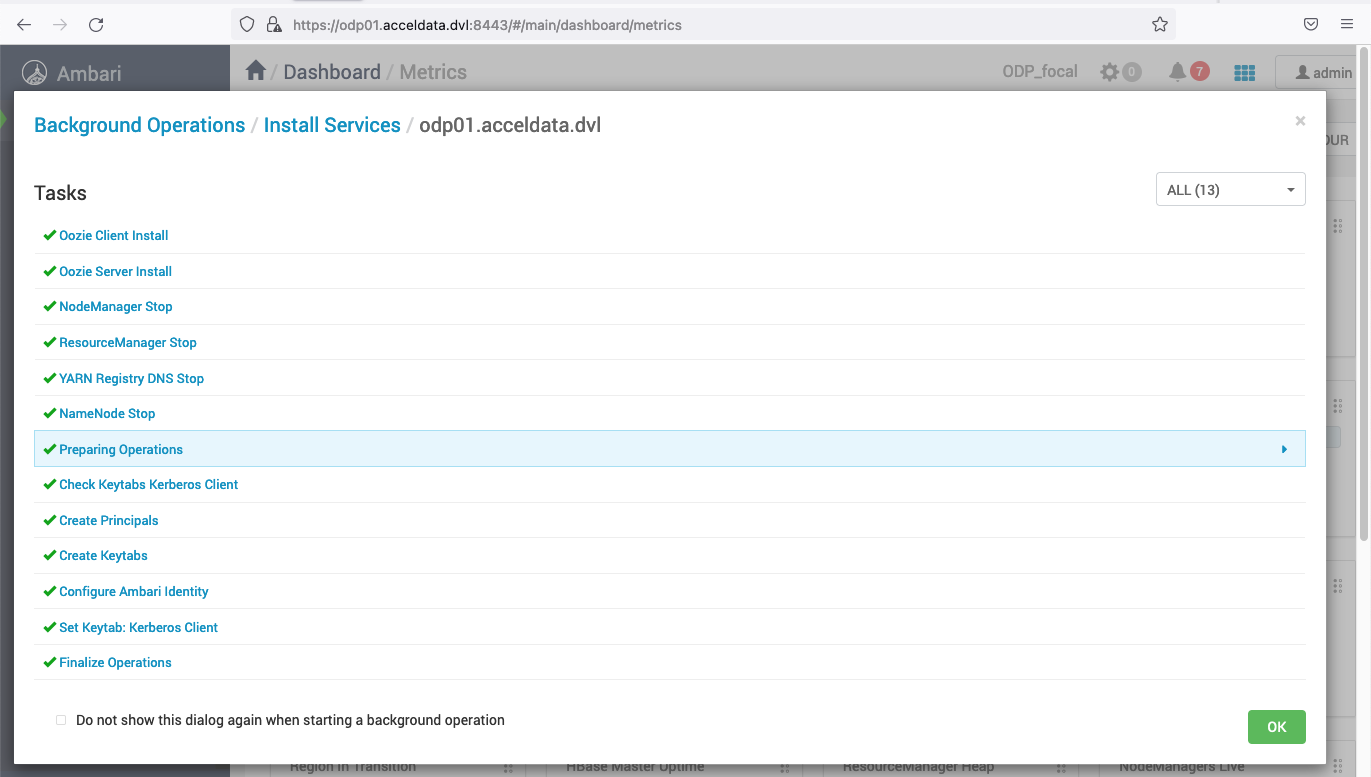

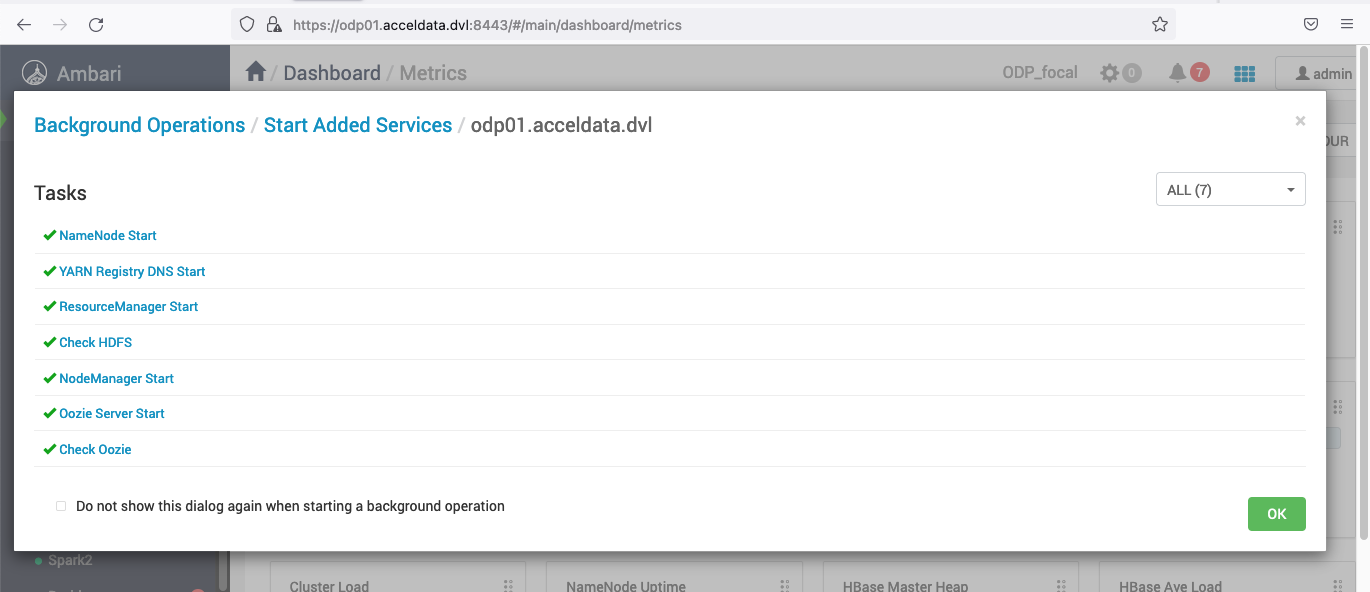

- Install Oozie from Ambari UI on the ODP cluster.

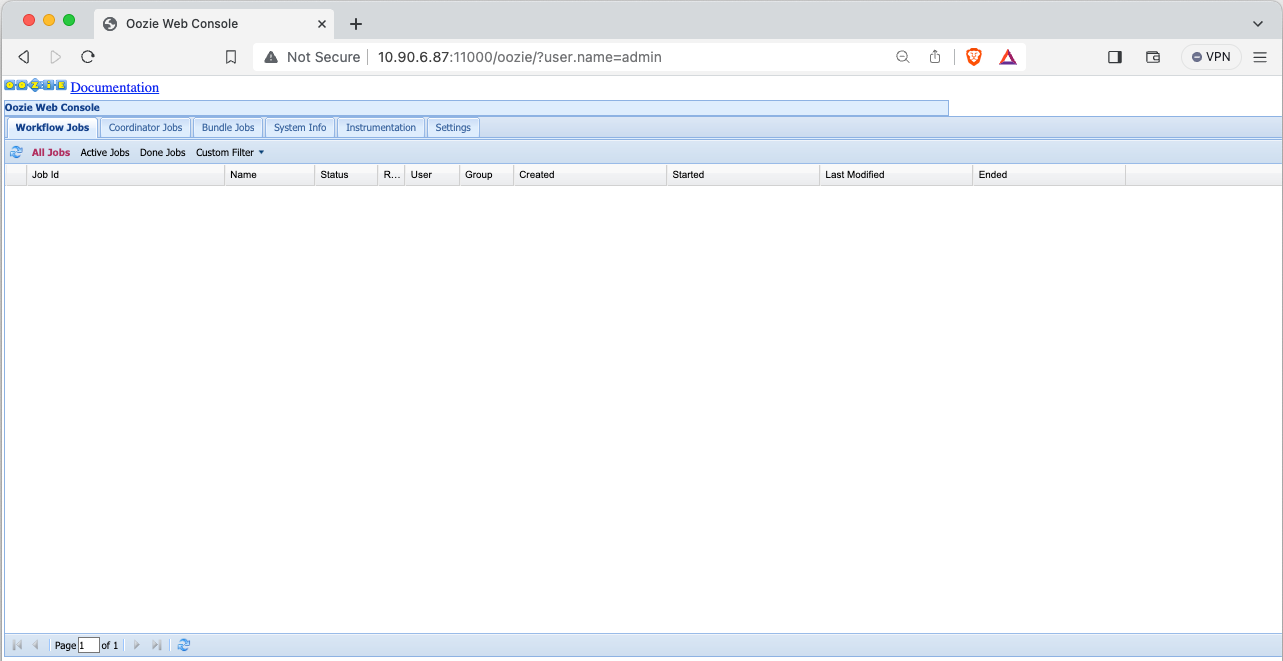

- Validate Oozie UI:

If the Oozie UI shows the following error in the Kerberized cluster:

{"errorMessage":"Authentication required","httpStatusCode":401}

Solution: In the Kerberized cluster, if HTTP authentication needs to be set as simple, follow these steps and restart the Oozie service:

- Navigate to Ambari UI.

- Go to Oozie > Configs > Security.

- Set

oozie.authentication.typetosimple. - Restart the Oozie service.

Alternatively, if oozie.authentication.type is set to kerberos, configure your browser with the relevant keytabs to access the Oozie UI.

Enabling SSL on Oozie

For this demonstration, the default directory for saving certificates is set to /opt/security/pki. Feel free to choose any path that suits your preferences.

- Generate the required keystore, truststore and cert.

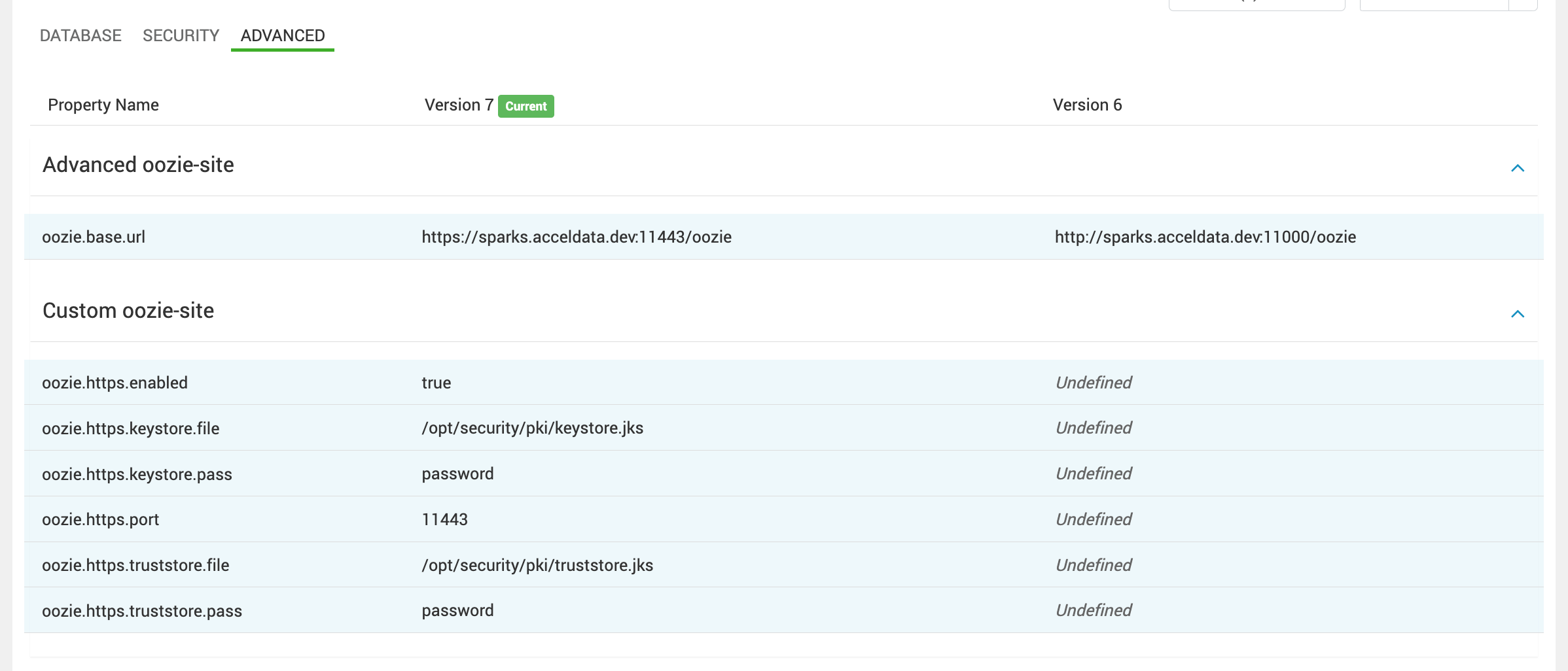

- Setup SSL using the existing jks by manually configuring the following properties in the Ambari UI > Oozie > Configs > Advanced page:

Advanced Oozie:

oozie.base.url=https://<oozie-server-hostname>:11443/oozie

Custom Oozie Site

oozie.https.enabled=true

oozie.https.keystore.file=/path/to/keystore.jks

oozie.https.keystore.pass=<password>

oozie.https.port=11443

oozie.https.truststore.file=/path/to/truststore.jks

oozie.https.truststore.pass=<password>

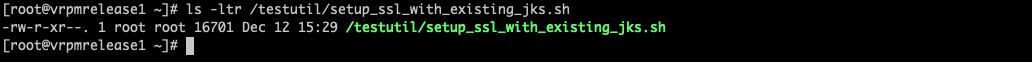

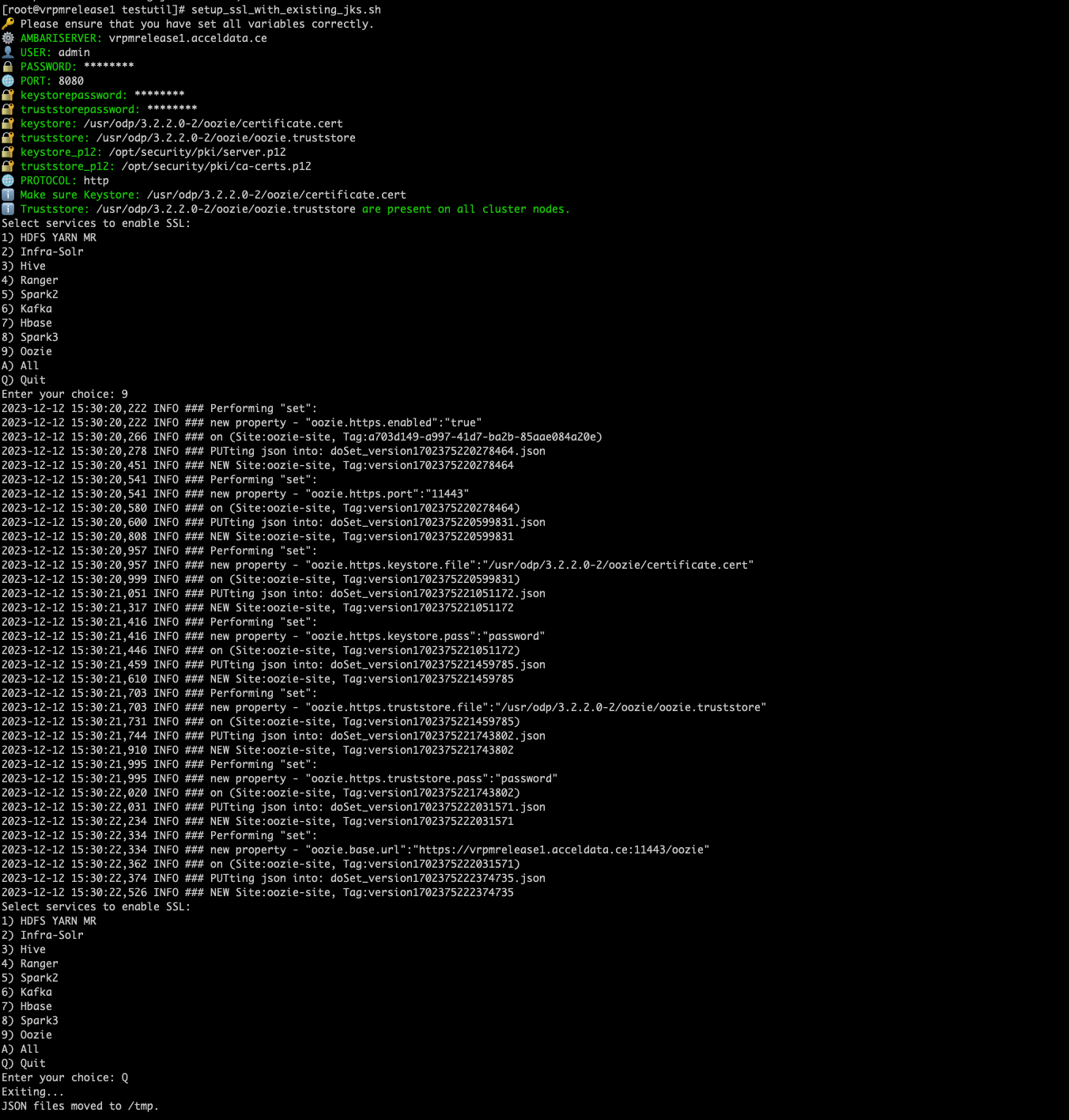

a. Download shell script on your oozie-server and change keystore and truststore configs as required https://github.com/acceldata-io/ce-utils/blob/main/ODP/scripts/setup_ssl_with_existing_jks.sh

b. Provide execution permissions to this bash file. chown g+x setup_ssl_with_existing_jks.sh

c. Run the bash script setup_ssl_with_existing_jks.sh

- Restart Oozie server from the Ambari UI.

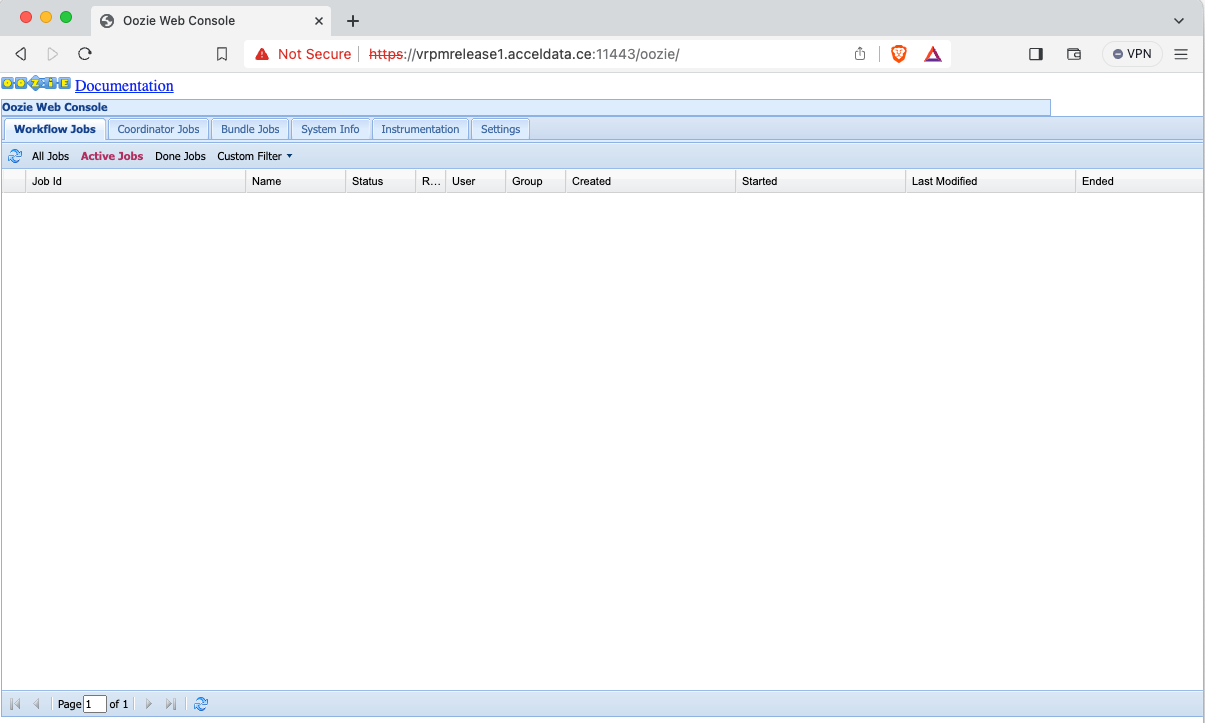

- Access Oozie UI with https and on port 11443.

- Add the certificate to java cacerts before Oozie job run.

# Import certificate to java cacertsecho -n | openssl s_client -connect <hostname>:11443 | sed -ne '/-BEGIN CERTIFICATE-/,/-END CERTIFICATE-/p' > /tmp/oozie.crtkeytool -import -noprompt -trustcacerts -alias oozie-cert -file "/tmp/oozie.crt" -keystore "$java_home/jre/lib/security/cacerts" -storepass changeitRunning hive2 example Oozie Jobs

Prepare environment to

https://<hostname>:11443/oozie/ in place of http://<hostname>:11000/oozie in the steps mentioned below:

- Setup sharelib.

- (Sharelib is readily available at

/usr/odp/current/oozie-server/share).

sudo su -l oozie -s /bin/bashcd /usr/odp/current/oozie-serverhadoop fs -put share /user/oozie# update sharelib./bin/oozie-setup.sh sharelib create -fs hdfs://<hostname>:8020oozie admin --oozie http://<oozie-server-hostname>:11000/oozie -sharelibupdateSample Output:

[ShareLib update status] sharelibDirOld = hdfs://odp01.acceldata.dvl:8020/user/oozie/share/lib/lib_20231103071723 host = http://0.0.0.0:11000/oozie sharelibDirNew = hdfs://odp01.acceldata.dvl:8020/user/oozie/share/lib/lib_20231122114629 status = Successful- Confirm hive2 example job related files:

oozie@odp01:/usr/odp/current/oozie-server$ ls -lrth /usr/odp/current/oozie-server/doc/examples/apps/hive2/total 24K-rw-r--r-x 1 root root 2.5K Oct 24 20:27 workflow.xml.security-rw-r--r-x 1 root root 2.1K Oct 24 20:27 workflow.xml-rw-r--r-x 1 root root 966 Oct 24 20:27 script.q-rw-r--r-x 1 root root 681 Oct 24 20:27 README-rw-r--r-x 1 root root 1.1K Oct 24 20:27 job.properties.security-rw-r--r-x 1 root root 1.1K Oct 24 20:27 job.properties- Create a text file for sample jobs:

This file is to be used by Oozie workflow for table inputs.

root@odp01 ~ # cat /usr/odp/current/oozie-server/doc/examples/input-data/table/t.txt102030- Validate HiverServer2 JDBC connection string with show tables command.

beeline -u "{{JDBC connection string}}"show tables;Sample (non-kerberized cluster):

root@odp01 ~# beeline -u "jdbc:hive2://odp02.ubuntu.ad.ce:10000/default;user=hive;password=hive;"SLF4J: Class path contains multiple SLF4J bindingsSLF4J: Found binding in [jar:file:/usr/odp/3.2.3.3-3/hive/lib/log4j-slf4j-impl-2.17.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]SLF4J: Found binding in [jar:file:/usr/odp/3.2.3.3-3/tez/lib/slf4j-reload4j-1.7.35.jar!/org/slf4j/impl/StaticLoggerBinder.class]SLF4J: Found binding in [jar:file:/usr/odp/3.2.3.3-3/hadoop/lib/slf4j-reload4j-1.7.35.jar!/org/slf4j/impl/StaticLoggerBinder.class]SLF4J: Found binding in [jar:file:/usr/odp/3.2.3.3-3/hadoop-hdfs/lib/slf4j-reload4j-1.7.35.jar!/org/slf4j/impl/StaticLoggerBinder.class]SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]SLF4J: Class path contains multiple SLF4J bindings.SLF4J: Found binding in [jar:file:/usr/odp/3.2.3.3-3/hive/lib/log4j-slf4j-impl-2.17.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]SLF4J: Found binding in [jar:file:/usr/odp/3.2.3.3-3/tez/lib/slf4j-reload4j-1.7.35.jar!/org/slf4j/impl/StaticLoggerBinder.class]SLF4J: Found binding in [jar:file:/usr/odp/3.2.3.3-3/hadoop/lib/slf4j-reload4j-1.7.35.jar!/org/slf4j/impl/StaticLoggerBinder.class]SLF4J: Found binding in [jar:file:/usr/odp/3.2.3.3-3/hadoop-hdfs/lib/slf4j-reload4j-1.7.35.jar!/org/slf4j/impl/StaticLoggerBinder.class]SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]Connecting to jdbc:hive2://odp02.ubuntu.ad.ce:10000/default;user=hive;password=hive;;Connected to: Apache Hive (version 3.1.4.3.2.3.3-3)Driver: Hive JDBC (version 3.1.4.3.2.3.3-3)Transaction isolation: TRANSACTION_REPEATABLE_READBeeline version 3.1.4.3.2.3.3-2 by Apache Hive0: jdbc:hive2://odp02.ubuntu.ad.ce:10000/def> show tables;Sample (kerberized cluster):

root@odp01 ~ # beeline -u "jdbc:hive2://odp02.acceldata.dvl:10000/default;principal=hive/_HOST@ADSRE.COM;"SLF4J: Class path contains multiple SLF4J bindings.SLF4J: Found binding in [jar:file:/usr/odp/3.2.3.3-3/hive/lib/log4j-slf4j-impl-2.17.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]SLF4J: Found binding in [jar:file:/usr/odp/3.2.3.3-3/tez/lib/slf4j-reload4j-1.7.35.jar!/org/slf4j/impl/StaticLoggerBinder.class]SLF4J: Found binding in [jar:file:/usr/odp/3.2.3.3-3/hadoop/lib/slf4j-reload4j-1.7.35.jar!/org/slf4j/impl/StaticLoggerBinder.class]SLF4J: Found binding in [jar:file:/usr/odp/3.2.3.3-3/hadoop-hdfs/lib/slf4j-reload4j-1.7.35.jar!/org/slf4j/impl/StaticLoggerBinder.class]SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]SLF4J: Class path contains multiple SLF4J bindings.SLF4J: Found binding in [jar:file:/usr/odp/3.2.3.3-3/hive/lib/log4j-slf4j-impl-2.17.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]SLF4J: Found binding in [jar:file:/usr/odp/3.2.3.3-3/tez/lib/slf4j-reload4j-1.7.35.jar!/org/slf4j/impl/StaticLoggerBinder.class]SLF4J: Found binding in [jar:file:/usr/odp/3.2.3.3-3/hadoop/lib/slf4j-reload4j-1.7.35.jar!/org/slf4j/impl/StaticLoggerBinder.class]SLF4J: Found binding in [jar:file:/usr/odp/3.2.3.3-3/hadoop-hdfs/lib/slf4j-reload4j-1.7.35.jar!/org/slf4j/impl/StaticLoggerBinder.class]SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]Connecting to jdbc:hive2://odp02.acceldata.dvl:10000/default;principal=hive/_HOST@ADSRE.COM;Connected to: Apache Hive (version 3.1.4.3.2.3.3-2)Driver: Hive JDBC (version 3.1.4.3.2.3.3-2)Transaction isolation: TRANSACTION_REPEATABLE_READBeeline version 3.1.4.3.2.3.3-2 by Apache Hive0: jdbc:hive2://odp02.acceldata.dvl:10000/def> show tables;INFO : Compiling command(queryId=hive_20231122122403_d3df1b9e-2596-4d40-b028-080886dff837): show tablesINFO : Semantic Analysis Completed (retrial = false)INFO : Created Hive schema: Schema(fieldSchemas:[FieldSchema(name:tab_name, type:string, comment:from deserializer)], properties:null)INFO : Completed compiling command(queryId=hive_20231122122403_d3df1b9e-2596-4d40-b028-080886dff837); Time taken: 0.032 secondsINFO : Executing command(queryId=hive_20231122122403_d3df1b9e-2596-4d40-b028-080886dff837): show tablesINFO : Starting task [Stage-0:DDL] in serial modeINFO : Completed executing command(queryId=hive_20231122122403_d3df1b9e-2596-4d40-b028-080886dff837); Time taken: 0.026 seconds+------------------+| tab_name |+------------------+| druid_t || emp_tlz4_1 || employee_trans1 || merge_demo1 || merge_demo2 || test_lz4_orc || test_lz4_orc_2 || test_mat || tm11 |+------------------+9 rows selected (0.143 seconds)- Configure workflow.xml file as per your cluster related configurations:

Sample workflow.xml file (simple auth cluster):

oozie@odp:~$ cat /usr/odp/current/oozie-server/doc/examples/apps/hive2/workflow.xml<?xml version="1.0" encoding="UTF-8"?><!-- Licensed to the Apache Software Foundation (ASF) under one or more contributor license agreements. See the NOTICE file distributed with this work for additional information regarding copyright ownership. The ASF licenses this file to you under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with the License. You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License.--><workflow-app xmlns="uri:oozie:workflow:1.0" name="hive2-wf"> <start to="hive2-node"/> <action name="hive2-node"> <hive2 xmlns="uri:oozie:hive2-action:1.0"> <resource-manager>${resourceManager}</resource-manager> <name-node>${nameNode}</name-node> <prepare> <delete path="${nameNode}/user/${wf:user()}/${examplesRoot}/output-data/hive2"/> <mkdir path="${nameNode}/user/${wf:user()}/${examplesRoot}/output-data"/> </prepare> <configuration> <property> <name>mapred.job.queue.name</name> <value>${queueName}</value> </property> </configuration> <jdbc-url>${jdbcURL}</jdbc-url> <script>script.q</script> <param>INPUT=/user/${wf:user()}/${examplesRoot}/input-data/table</param> <param>OUTPUT=/user/${wf:user()}/${examplesRoot}/output-data/hive2</param> </hive2> <ok to="end"/> <error to="fail"/> </action> <kill name="fail"> <message>Hive2 (Beeline) action failed, error message[${wf:errorMessage(wf:lastErrorNode())}]</message> </kill> <end name="end"/></workflow-app>Sample job.properties file (kerberized cluster):

oozie@odp:~$ cat /usr/odp/current/oozie-server/doc/examples/apps/hive2/workflow.xml<?xml version="1.0" encoding="UTF-8"?><!-- Licensed to the Apache Software Foundation (ASF) under one or more contributor license agreements. See the NOTICE file distributed with this work for additional information regarding copyright ownership. The ASF licenses this file to you under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with the License. You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License.--><workflow-app xmlns="uri:oozie:workflow:1.0" name="hive2-wf"> <credentials> <credential name="hs2-creds" type="hive2"> <property> <name>hive2.server.principal</name> <value>${jdbcPrincipal}</value> </property> <property> <name>hive2.jdbc.url</name> <value>${jdbcURL}</value> </property> </credential> </credentials> <start to="hive2-node"/> <action name="hive2-node" cred="hs2-creds"> <hive2 xmlns="uri:oozie:hive2-action:1.0"> <resource-manager>${resourceManager}</resource-manager> <name-node>${nameNode}</name-node> <prepare> <delete path="${nameNode}/user/${wf:user()}/${examplesRoot}/output-data/hive2"/> <mkdir path="${nameNode}/user/${wf:user()}/${examplesRoot}/output-data"/> </prepare> <configuration> <property> <name>mapred.job.queue.name</name> <value>${queueName}</value> </property> </configuration> <jdbc-url>${jdbcURL}</jdbc-url> <script>script.q</script> <param>INPUT=/user/${wf:user()}/${examplesRoot}/input-data/table</param> <param>OUTPUT=/user/${wf:user()}/${examplesRoot}/output-data/hive2</param> </hive2> <ok to="end"/> <error to="fail"/> </action> <kill name="fail"> <message>Hive2 (Beeline) action failed, error message[${wf:errorMessage(wf:lastErrorNode())}]</message> </kill> <end name="end"/></workflow-app>- Upload the examples to HDFS (Oozie takes workflow.xml from HDFS examples path or oozie.wf.application.path) and provides necessary permissions for smooth execution of jobs:

root@odp ~ # sudo su -l hdfs -s /bin/bash# Make example job workflows available on hdfs hdfs@odp:~$ hdfs dfs -copyFromLocal /usr/odp/current/oozie-server/doc/examples /user/oozie/examples# Change permissions for examples directoryhdfs@odp:~$ hdfs dfs -chown -R oozie:hadoop /user/oozie/examples# Confirm workflow.xml in hdfs is correct (workflow.xml for kerberized cluster is different)hdfs@odp:~$ hdfs dfs -cat /user/oozie/examples/apps/hive2/workflow.xmlexit- Run jobs:

sudo su -l oozie -s /bin/bashcd /usr/odp/current/oozie-server/doc/oozie job --oozie http://<hostname>:11000/oozie/ -config examples/apps/hive2/job.properties -run -verboseTroubleshooting

Issue with Oozie Job [options]

The following state the issues and solutions for while executing the job run/start/suspend/kill commands:

Error: IO_ERROR : java.io.IOException: Error while connecting Oozie server. No of retries = 4. Exception = Error while authenticating with endpoint: https://vrpmrelease1.acceldata.ce:11443/oozie/versions

[oozie@vrpmrelease1 doc]$ oozie job --oozie https://vrpmrelease1.acceldata.ce:11443/oozie/ -config examples/apps/hive2/job.properties -run -debug Auth type : nullSLF4J: Class path contains multiple SLF4J bindings.SLF4J: Found binding in [jar:file:/usr/odp/3.2.3.3-3/oozie/lib/slf4j-log4j12-1.6.6.jar!/org/slf4j/impl/StaticLoggerBinder.class]SLF4J: Found binding in [jar:file:/usr/odp/3.2.3.3-3/oozie/lib/slf4j-reload4j-1.7.35.jar!/org/slf4j/impl/StaticLoggerBinder.class]SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]Connection exception has occurred [ java.net.ConnectException Error while authenticating with endpoint: https://vrpmrelease1.acceldata.ce:11443/oozie/versions ]. Trying after 1 sec. Retry count = 1Connection exception has occurred [ java.net.ConnectException Error while authenticating with endpoint: https://vrpmrelease1.acceldata.ce:11443/oozie/versions ]. Trying after 2 sec. Retry count = 2Connection exception has occurred [ java.net.ConnectException Error while authenticating with endpoint: https://vrpmrelease1.acceldata.ce:11443/oozie/versions ]. Trying after 4 sec. Retry count = 3Connection exception has occurred [ java.net.ConnectException Error while authenticating with endpoint: https://vrpmrelease1.acceldata.ce:11443/oozie/versions ]. Trying after 8 sec. Retry count = 4java.net.ConnectException: Error while authenticating with endpoint: https://vrpmrelease1.acceldata.ce:11443/oozie/versions at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method) at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62) at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) at java.lang.reflect.Constructor.newInstance(Constructor.java:423) at org.apache.hadoop.security.authentication.client.KerberosAuthenticator.wrapExceptionWithMessage(KerberosAuthenticator.java:232) at org.apache.hadoop.security.authentication.client.KerberosAuthenticator.authenticate(KerberosAuthenticator.java:216) at org.apache.oozie.client.AuthOozieClient.createConnection(AuthOozieClient.java:197) at org.apache.oozie.client.OozieClient$1.doExecute(OozieClient.java:515) at org.apache.oozie.client.retry.ConnectionRetriableClient.execute(ConnectionRetriableClient.java:44) at org.apache.oozie.client.OozieClient.createRetryableConnection(OozieClient.java:517) at org.apache.oozie.client.OozieClient.getSupportedProtocolVersions(OozieClient.java:397) at org.apache.oozie.client.OozieClient.validateWSVersion(OozieClient.java:357) at org.apache.oozie.client.OozieClient.createURL(OozieClient.java:468) at org.apache.oozie.client.OozieClient.access$000(OozieClient.java:88) at org.apache.oozie.client.OozieClient$ClientCallable.call(OozieClient.java:562) at org.apache.oozie.client.OozieClient.run(OozieClient.java:884) at org.apache.oozie.cli.OozieCLI.jobCommand(OozieCLI.java:1127) at org.apache.oozie.cli.OozieCLI.processCommand(OozieCLI.java:727) at org.apache.oozie.cli.OozieCLI.run(OozieCLI.java:682) at org.apache.oozie.cli.OozieCLI.main(OozieCLI.java:245)Caused by: java.net.ConnectException: Connection refused (Connection refused) at java.net.PlainSocketImpl.socketConnect(Native Method) at java.net.AbstractPlainSocketImpl.doConnect(AbstractPlainSocketImpl.java:350) at java.net.AbstractPlainSocketImpl.connectToAddress(AbstractPlainSocketImpl.java:206) at java.net.AbstractPlainSocketImpl.connect(AbstractPlainSocketImpl.java:188) at java.net.SocksSocketImpl.connect(SocksSocketImpl.java:392) at java.net.Socket.connect(Socket.java:607) at sun.security.ssl.SSLSocketImpl.connect(SSLSocketImpl.java:293) at sun.security.ssl.BaseSSLSocketImpl.connect(BaseSSLSocketImpl.java:173) at sun.net.NetworkClient.doConnect(NetworkClient.java:180) at sun.net.www.http.HttpClient.openServer(HttpClient.java:463) at sun.net.www.http.HttpClient.openServer(HttpClient.java:558) at sun.net.www.protocol.https.HttpsClient.<init>(HttpsClient.java:264) at sun.net.www.protocol.https.HttpsClient.New(HttpsClient.java:367) at sun.net.www.protocol.https.AbstractDelegateHttpsURLConnection.getNewHttpClient(AbstractDelegateHttpsURLConnection.java:203) at sun.net.www.protocol.http.HttpURLConnection.plainConnect0(HttpURLConnection.java:1162) at sun.net.www.protocol.http.HttpURLConnection.plainConnect(HttpURLConnection.java:1056) at sun.net.www.protocol.https.AbstractDelegateHttpsURLConnection.connect(AbstractDelegateHttpsURLConnection.java:189) at sun.net.www.protocol.https.HttpsURLConnectionImpl.connect(HttpsURLConnectionImpl.java:167) at org.apache.hadoop.security.authentication.client.KerberosAuthenticator.authenticate(KerberosAuthenticator.java:189) ... 14 moreError: IO_ERROR : java.io.IOException: Error while connecting Oozie server. No of retries = 4. Exception = Error while authenticating with endpoint: https://vrpmrelease1.acceldata.ce:11443/oozie/versionsIssue/Solution: In a secure or kerberized cluster, Oozie job execution requires authentication. Authenticate by using the kinit command: kinit -kt </path/to/oozie.service.keytab> <oozie.principal>, and then proceed to re-run the Oozie job command.

java.io.IOException: configuration is not specifiedat org.apache.oozie.cli.OozieCLI.getConfiguration(OozieCLI.java:857)``

[oozie@vrpmrelease1 ~]$ oozie job --oozie https://vrpmrelease1.acceldata.ce:11443/oozie/ -config examples/apps/hive2/job.properties -run -debug Auth type : nulljava.io.IOException: configuration is not specified at org.apache.oozie.cli.OozieCLI.getConfiguration(OozieCLI.java:857) at org.apache.oozie.cli.OozieCLI.jobCommand(OozieCLI.java:1127) at org.apache.oozie.cli.OozieCLI.processCommand(OozieCLI.java:727) at org.apache.oozie.cli.OozieCLI.run(OozieCLI.java:682) at org.apache.oozie.cli.OozieCLI.main(OozieCLI.java:245)Issue/Solution: Oozie encounters difficulty locating or accessing configurations or the configuration file needed for job execution. Ensure that the path to the job.properties file is accurate. Additionally, validate and assign the required user permissions to the job.properties file.

Error: E0508 : E0508: User [?] not authorized for WF job [0000001-231212184930968-oozie-oozi-W]

[oozie@vrpmrelease1 ~]$ oozie job --oozie https://vrpmrelease1.acceldata.ce:11443/oozie/ -suspend 0000001-231212184930968-oozie-oozi-WSLF4J: Class path contains multiple SLF4J bindings.SLF4J: Found binding in [jar:file:/usr/odp/3.2.3.3-3/oozie/lib/slf4j-log4j12-1.6.6.jar!/org/slf4j/impl/StaticLoggerBinder.class]SLF4J: Found binding in [jar:file:/usr/odp/3.2.3.3-3/oozie/lib/slf4j-reload4j-1.7.35.jar!/org/slf4j/impl/StaticLoggerBinder.class]SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]Error: E0508 : E0508: User [?] not authorized for WF job [0000001-231212184930968-oozie-oozi-W]Issue/Solution: Oozie cannot renew an expired authentication token; therefore, it is necessary to delete the Oozie authentication token using the command rm -rf ~/.oozie-auth-token* and then rerun the command.

Issues Post Oozie Job Submission

If jobs are getting killed or stuck in an endless running state, manually check Oozie job logs using terminal.

tail -fn 500 /var/log/oozie/oozie.logScan logs for any exceptions.

Sample section from logs trace:

2023-11-27 11:08:25,793 INFO CallbackServlet:520 - SERVER[odp.manish1.ce] USER[-] GROUP[-] TOKEN[-] APP[-] JOB[0000005-231124153738389-oozie-oozi-W] ACTION[0000005-231124153738389-oozie-oozi-W@hive2-node] callback for action [0000005-231124153738389-oozie-oozi-W@hive2-node]2023-11-27 11:08:26,113 INFO Hive2ActionExecutor:520 - SERVER[odp.manish1.ce] USER[oozie] GROUP[-] TOKEN[] APP[hive2-wf] JOB[0000005-231124153738389-oozie-oozi-W] ACTION[0000005-231124153738389-oozie-oozi-W@hive2-node] action completed, external ID [application_1700820353491_0003]2023-11-27 11:08:26,115 WARN Hive2ActionExecutor:523 - SERVER[odp.manish1.ce] USER[oozie] GROUP[-] TOKEN[] APP[hive2-wf] JOB[0000005-231124153738389-oozie-oozi-W] ACTION[0000005-231124153738389-oozie-oozi-W@hive2-node] Launcher ERROR, reason: Main Class [org.apache.oozie.action.hadoop.Hive2Main], exit code [2]2023-11-27 11:08:26,169 INFO Hive2ActionExecutor:520 - SERVER[odp.manish1.ce] USER[oozie] GROUP[-] TOKEN[] APP[hive2-wf] JOB[0000005-231124153738389-oozie-oozi-W] ACTION[0000005-231124153738389-oozie-oozi-W@hive2-node] Action ended with external status [FAILED/KILLED]2023-11-27 11:08:26,191 INFO ActionEndXCommand:520 - SERVER[odp.manish1.ce] USER[oozie] GROUP[-] TOKEN[] APP[hive2-wf] JOB[0000005-231124153738389-oozie-oozi-W] ACTION[0000005-231124153738389-oozie-oozi-W@hive2-node] ERROR is considered as FAILED for SLA2023-11-27 11:08:26,255 INFO JPAService:520 - SERVER[odp.manish1.ce] USER[oozie] GROUP[-] TOKEN[] APP[hive2-wf] JOB[0000005-231124153738389-oozie-oozi-W] ACTION[0000005-231124153738389-oozie-oozi-W@hive2-node] No results found2023-11-27 11:08:26,311 INFO ActionStartXCommand:520 - SERVER[odp.manish1.ce] USER[oozie] GROUP[-] TOKEN[] APP[hive2-wf] JOB[0000005-231124153738389-oozie-oozi-W] ACTION[0000005-231124153738389-oozie-oozi-W@fail] Start action [0000005-231124153738389-oozie-oozi-W@fail] with user-retry state : userRetryCount [0], userRetryMax [0], userRetryInterval [10]Part A

Search external ID, identify Hadoop application ID from the given logs in oozie.log and run the below command to check error in detail.

yarn logs -applicationId <application_ID>Launcher ERROR, reason: Main Class [org.apache.oozie.action.hadoop.Hive2Main], exit code [2]

Here the error is as mentioned below:

oozie@odp:~$ yarn logs -applicationId application_1700820353491_0003...Connecting to jdbc:hive2://odp.manish2.ce:10000/defaultConnected to: Apache Hive (version 3.1.4.3.2.3.3-3)Driver: Hive JDBC (version 3.1.4.3.2.3.3-3)Transaction isolation: TRANSACTION_REPEATABLE_READ0: jdbc:hive2://odp.manish2.ce:10000/default> -- Foundation (ASF) under one.ce:10000/default> -- Licensed to the Apache Software...INFO : Query ID = hive_20231127110808_d12176e6-7c44-430d-b882-f92bac7b3a6cINFO : Total jobs = 1INFO : Launching Job 1 out of 1INFO : Starting task [Stage-1:MAPRED] in serial modeINFO : Subscribed to counters: [] for queryId: hive_20231127110808_d12176e6-7c44-430d-b882-f92bac7b3a6cINFO : Tez session hasn't been created yet. Opening sessionERROR : Failed to execute tez graph.org.apache.tez.dag.api.TezException: org.apache.hadoop.yarn.exceptions.InvalidResourceRequestException: Invalid resource request! Cannot allocate containers as requested resource is greater than maximum allowed allocation. Requested resource type=[memory-mb], Requested resource=<memory:5632, vCores:1>, maximum allowed allocation=<memory:5120, vCores:8>, please note that maximum allowed allocation is calculated by scheduler based on maximum resource of registered NodeManagers, which might be less than configured maximum allocation=<memory:5120, vCores:8> at org.apache.hadoop.yarn.server.resourcemanager.scheduler.SchedulerUtils.throwInvalidResourceException(SchedulerUtils.java:515) at org.apache.hadoop.yarn.server.resourcemanager.scheduler.SchedulerUtils.checkResourceRequestAgainstAvailableResource(SchedulerUtils.java:411) at org.apache.hadoop.yarn.server.resourcemanager.scheduler.SchedulerUtils.validateResourceRequest(SchedulerUtils.java:339) at org.apache.hadoop.yarn.server.resourcemanager.scheduler.SchedulerUtils.normalizeAndValidateRequest(SchedulerUtils.java:294) at org.apache.hadoop.yarn.server.resourcemanager.RMAppManager.validateAndCreateResourceRequest(RMAppManager.java:577) at org.apache.hadoop.yarn.server.resourcemanager.RMAppManager.createAndPopulateNewRMApp(RMAppManager.java:424) at org.apache.hadoop.yarn.server.resourcemanager.RMAppManager.submitApplication(RMAppManager.java:359) at org.apache.hadoop.yarn.server.resourcemanager.ClientRMService.submitApplication(ClientRMService.java:696) at org.apache.hadoop.yarn.api.impl.pb.service.ApplicationClientProtocolPBServiceImpl.submitApplication(ApplicationClientProtocolPBServiceImpl.java:290) at org.apache.hadoop.yarn.proto.ApplicationClientProtocol$ApplicationClientProtocolService$2.callBlockingMethod(ApplicationClientProtocol.java:611)....Issue/Solution: The job was terminated as it demanded resources exceeding the maximum allocation by the YARN container. To address this, adjust the following settings: Navigate to Ambari UI > Yarn > Configs > Settings > Container and augment the Maximum Container Size (Memory). Subsequently, restart Yarn.

Launcher ERROR, reason: Main Class [org.apache.oozie.action.hadoop.Hive2Main], exit code [2]

Here the error is as mentioned below:

oozie@odp:~$ yarn logs -applicationId application_1702456280585_0015...INFO : Compiling command(queryId=hive_20231214153027_36e6c141-0b7a-482f-9951-a14dd866d345): CREATE EXTERNAL TABLE test (a INT) STORED AS TEXTFILE LOCATION '/user/oozie/examples/input-data/table'INFO : Semantic Analysis Completed (retrial = false)INFO : Created Hive schema: Schema(fieldSchemas:null, properties:null)INFO : Completed compiling command(queryId=hive_20231214153027_36e6c141-0b7a-482f-9951-a14dd866d345); Time taken: 0.308 secondsINFO : Operation CREATETABLE obtained 1 locksINFO : Executing command(queryId=hive_20231214153027_36e6c141-0b7a-482f-9951-a14dd866d345): CREATE EXTERNAL TABLE test (a INT) STORED AS TEXTFILE LOCATION '/user/oozie/examples/input-data/table'INFO : Starting task [Stage-0:DDL] in serial modeERROR : Failedorg.apache.hadoop.hive.ql.metadata.HiveException: MetaException(message:java.security.AccessControlException: Permission denied: user=oozie, access=WRITE, inode="/warehouse/tablespace/managed/hive":hive:hadoop:drwx------ at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.check(FSPermissionChecker.java:496) at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkPermission(FSPermissionChecker.java:342)...Caused by: org.apache.hadoop.hive.metastore.api.MetaException: java.security.AccessControlException: Permission denied: user=oozie, access=WRITE, inode="/warehouse/tablespace/managed/hive":hive:hadoop:drwx------ at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.check(FSPermissionChecker.java:496) at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkPermission(FSPermissionChecker.java:342) at org.apache.ranger.authorization.hadoop.RangerHdfsAuthorizer$RangerAccessControlEnforcer.checkDefaultEnforcer(RangerHdfsAuthorizer.java:634) at org.apache.ranger.authorization.hadoop.RangerHdfsAuthorizer$RangerAccessControlEnforcer.checkRangerPermission(RangerHdfsAuthorizer.java:421) at org.apache.ranger.authorization.hadoop.RangerHdfsAuthorizer$RangerAccessControlEnforcer.checkPermission(RangerHdfsAuthorizer.java:248) at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkPermission(FSPermissionChecker.java:241) at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkPermission(FSDirectory.java:1927) at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkPermission(FSDirectory.java:1911) at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkPathAccess(FSDirectory.java:1861) at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkAccess(FSNamesystem.java:8136) at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.checkAccess(NameNodeRpcServer.java:2301) at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.checkAccess(ClientNamenodeProtocolServerSideTranslatorPB.java:1693) at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java) at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:549) at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:518) at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:1086) at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:1029) at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:957) at java.security.AccessController.doPrivileged(Native Method) at javax.security.auth.Subject.doAs(Subject.java:422) at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1762) at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2957)Issue/Solution: Oozie lacks authorization in Ranger policies for accessing the Hive warehouse in HDFS. Rectify this by establishing a policy in the Hadoop Ranger Service through the Ranger UI. Grant Oozie the necessary write permissions for the specified path. Allow time for policy synchronization and proceed to rerun the Oozie job.

Yarn UI shows application in accepted state for too long.

Application is added to the scheduler and is not yet activated. Skipping AM assignment as cluster resource is empty. Details : AM Partition = DEFAULT_PARTITION; AM Resource Request = memory:5120, vCores:1; Queue Resource Limit for AM = memory:0, vCores:0; User AM Resource Limit of the queue = memory:3532, vCores:8; Queue AM Resource Usage = memory:2574, vCores:1;Issue/Solution: The scheduler faces difficulty in allocating an Application Master as the requested AM resources surpass the available AM resources. Resolve this by either adjusting the job.properties to restrict resource allocation for Oozie jobs, following the guidance on limiting memory allocation for Oozie MapReduce jobs, or by elevating the maximum resource limit for AM in YARN. In this instance, the maximum resource limit for AM was expanded. Navigate to Ambari UI > Views (Drop-down menu) > YARN Queue Manager > Maximum AM Resource and augment the percentage.

Part B

If oozie.log lacks any logs indicating an external application ID or Hadoop application ID, it suggests that Oozie might be encountering difficulties connecting to the resource manager.

SQLException: Could not open client transport with JDBC Uri: jdbc:hive2://vrpmrelease2.acceldata.ce:10000/default;;principal=hive/_HOST@ADSRE.COM: java.net.ConnectException: Connection refused (Connection refused)

2023-12-14 15:38:10,363 WARN ActionStartXCommand:523 - SERVER[vrpmrelease1.acceldata.ce] USER[oozie] GROUP[-] TOKEN[] APP[hive2-wf] JOB[0000002-231214151510721-oozie-oozi-W] ACTION[0000002-231214151510721-oozie-oozi-W@hive2-node] Error starting action [hive2-node]. ErrorType [ERROR], ErrorCode [SQLException], Message [SQLException: Could not open client transport with JDBC Uri: jdbc:hive2://vrpmrelease2.acceldata.ce:10000/default;;principal=hive/_HOST@ADSRE.COM: java.net.ConnectException: Connection refused (Connection refused)]org.apache.oozie.action.ActionExecutorException: SQLException: Could not open client transport with JDBC Uri: jdbc:hive2://vrpmrelease2.acceldata.ce:10000/default;;principal=hive/_HOST@ADSRE.COM: java.net.ConnectException: Connection refused (Connection refused) at org.apache.oozie.action.ActionExecutor.convertException(ActionExecutor.java:452) at org.apache.oozie.action.hadoop.JavaActionExecutor.submitLauncher(JavaActionExecutor.java:1134) at org.apache.oozie.action.hadoop.JavaActionExecutor.start(JavaActionExecutor.java:1644) at org.apache.oozie.command.wf.ActionStartXCommand.execute(ActionStartXCommand.java:243) at org.apache.oozie.command.wf.ActionStartXCommand.execute(ActionStartXCommand.java:68) at org.apache.oozie.command.XCommand.call(XCommand.java:290) at org.apache.oozie.service.CallableQueueService$CompositeCallable.call(CallableQueueService.java:363) at org.apache.oozie.service.CallableQueueService$CompositeCallable.call(CallableQueueService.java:292) at java.util.concurrent.FutureTask.run(FutureTask.java:266) at org.apache.oozie.service.CallableQueueService$CallableWrapper.run(CallableQueueService.java:210) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) at java.lang.Thread.run(Thread.java:750)Caused by: java.sql.SQLException: Could not open client transport with JDBC Uri: jdbc:hive2://vrpmrelease2.acceldata.ce:10000/default;;principal=hive/_HOST@ADSRE.COM: java.net.ConnectException: Connection refused (Connection refused) at org.apache.hive.jdbc.HiveConnection.<init>(HiveConnection.java:413) at org.apache.hive.jdbc.HiveConnection.<init>(HiveConnection.java:282) at org.apache.hive.jdbc.HiveDriver.connect(HiveDriver.java:94) at java.sql.DriverManager.getConnection(DriverManager.java:664) at java.sql.DriverManager.getConnection(DriverManager.java:270) at org.apache.oozie.action.hadoop.Hive2Credentials.updateCredentials(Hive2Credentials.java:68) at org.apache.oozie.action.hadoop.JavaActionExecutor.setCredentialTokens(JavaActionExecutor.java:1546) at org.apache.oozie.action.hadoop.JavaActionExecutor.submitLauncher(JavaActionExecutor.java:1082) ... 11 moreCaused by: org.apache.thrift.transport.TTransportException: java.net.ConnectException: Connection refused (Connection refused) at org.apache.thrift.transport.TSocket.open(TSocket.java:243) at org.apache.thrift.transport.TSaslTransport.open(TSaslTransport.java:231) at org.apache.thrift.transport.TSaslClientTransport.open(TSaslClientTransport.java:39) at org.apache.hadoop.hive.metastore.security.TUGIAssumingTransport$1.run(TUGIAssumingTransport.java:51) at org.apache.hadoop.hive.metastore.security.TUGIAssumingTransport$1.run(TUGIAssumingTransport.java:48) at java.security.AccessController.doPrivileged(Native Method) at javax.security.auth.Subject.doAs(Subject.java:422) at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1762) at org.apache.hadoop.hive.metastore.security.TUGIAssumingTransport.open(TUGIAssumingTransport.java:48) at org.apache.hive.jdbc.HiveConnection.openTransport(HiveConnection.java:505) at org.apache.hive.jdbc.HiveConnection.<init>(HiveConnection.java:375) ... 18 moreCaused by: java.net.ConnectException: Connection refused (Connection refused) at java.net.PlainSocketImpl.socketConnect(Native Method) at java.net.AbstractPlainSocketImpl.doConnect(AbstractPlainSocketImpl.java:350) at java.net.AbstractPlainSocketImpl.connectToAddress(AbstractPlainSocketImpl.java:206) at java.net.AbstractPlainSocketImpl.connect(AbstractPlainSocketImpl.java:188) at java.net.SocksSocketImpl.connect(SocksSocketImpl.java:392) at java.net.Socket.connect(Socket.java:607) at org.apache.thrift.transport.TSocket.open(TSocket.java:238) ... 28 more2023-12-14 15:38:10,367 WARN ActionStartXCommand:523 - SERVER[vrpmrelease1.acceldata.ce] USER[oozie] GROUP[-] TOKEN[] APP[hive2-wf] JOB[0000002-231214151510721-oozie-oozi-W] ACTION[0000002-231214151510721-oozie-oozi-W@hive2-node] Setting Action Status to [DONE]Issue/Solution: The JDBC URI or principal provided in the passed configurations is incorrect. Please verify the configurations and rerun the job.

Example 4 (i): org.apache.oozie.action.ActionExecutorException: JA006: Connection refused

2023-11-24 19:04:05,742 WARN ActionStartXCommand:523 - SERVER[odp.manish1.ce] USER[oozie] GROUP[-] TOKEN[] APP[hive2-wf] JOB[0000004-231124153738389-oozie-oozi-W] ACTION[0000004-231124153738389-oozie-oozi-W@hive2-node] Error starting action [hive2-node]. ErrorType [TRANSIENT], ErrorCode [ JA006], Message [ JA006: Connection refused]org.apache.oozie.action.ActionExecutorException: JA006: Connection refused at org.apache.oozie.action.ActionExecutor.convertExceptionHelper(ActionExecutor.java:463) at org.apache.oozie.action.ActionExecutor.convertException(ActionExecutor.java:443) at org.apache.oozie.action.hadoop.JavaActionExecutor.submitLauncher(JavaActionExecutor.java:1134) at org.apache.oozie.action.hadoop.JavaActionExecutor.start(JavaActionExecutor.java:1644) at org.apache.oozie.command.wf.ActionStartXCommand.execute(ActionStartXCommand.java:243)...Issue/Solution: Validate the ResourceManager address in the job.properties file. Upon reviewing the job.properties file, a typo was identified in the ResourceManager address. Corrected the typo and reran the example job, which executed successfully.