Mongo Sharded Cluster

Objective

The goal is to integrate MongoDB Sharded Cluster with Pulse, a data observability solution by Acceldata. This integration is designed to provide users with enhanced scalability, performance, and fault tolerance for MongoDB database management.

Overview

Introducing MongoDB Sharded Cluster to Pulse v3.3.20 transforms database management. Traditional challenges of handling large data volumes on a single machine, such as scalability and performance issues, are addressed by distributing data across multiple machines through sharding. This, combined with Pulse's observability features, offers users unparalleled insights into their Hadoop cluster's health, performance, and activity.

Prerequisites

- Understanding of MongoDB: Users need a comprehensive grasp of MongoDB basics, like collections, documents, indexes, and queries, along with an understanding of MongoDB architecture elements like replica sets and sharding for successful implementation.

- Capacity Planning: Perform detailed capacity planning to assess the resource needs of the sharded cluster. This should cover expected data volume, query patterns, and future growth to ensure the cluster is appropriately sized and resourced.

- Networking Configuration: Proper network setup is essential for MongoDB node communication within the cluster. This involves setting up firewall rules, network security groups, and DNS configurations to ensure smooth interaction among cluster components.

- Hardware/Infrastructure: Confirm that there are enough hardware and infrastructure resources to deploy the sharded cluster, including sufficient CPU, memory, and storage to handle anticipated workloads and data volume.

- Security Measures: Adopt strong security practices to safeguard your MongoDB deployment, incorporating authentication, authorization, data encryption in transit and at rest, and access control to limit unauthorized cluster access.

Design Architecture Overview

Components:

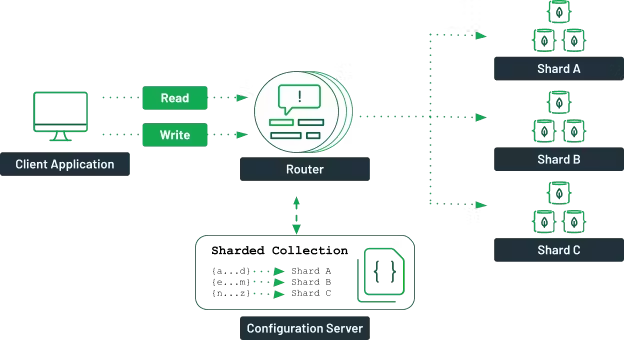

- Mongos Router: Serves as the gateway for database connections, directing operations to the appropriate shard. Deployment: Multiple Mongos routers can interface with the config server, facilitating operations within the sharded cluster without hosting any databases themselves.

- Config Server: Central to the sharded architecture, it stores metadata crucial for data routing and MongoDB read/write operations. Deployment: Config servers should be set up as a 3-node replica set, which is a mandatory configuration for maintaining cluster metadata.

- Shards: Represent the actual data storage units in a MongoDB sharded cluster, hosting databases and collections. Deployment: Adhering to MongoDB recommendations, each shard should ideally be a 3-node replica set to ensure data redundancy and availability.

Workflow Overview

Ingestion: Data is collected and ingested via the Pulse "Streaming" service, which acts as a data ingestor. The router facilitates interaction between applications and the MongoDB cluster, presenting the entire cluster as if it were a single, standalone MongoDB instance.

Querying: Data retrieval is executed through the router, which identifies the appropriate shard locations from the config servers based on the executed query.

Microservices Impact

The integration impacts all microservices reliant on MongoDB. Primarily, it affects the ad-streaming (Pulse data Ingestor), ad-graphql (Pulse UI), and accelo (Pulse CLI).

Potential Risks and Solutions

- Complexity: The intricacy of managing a sharded environment is notable. Risk mitigation involves thorough planning, comprehensive training, and robust documentation.

- Failure Recovery: Recovery from failures within a sharded setup may necessitate coordinated efforts. A well-defined disaster recovery plan is essential to minimize the risk of data loss.

- Shard Failure: Hardware malfunctions, network disruptions, or software issues can lead to shard failures, risking data unavailability and loss. Solution: Utilizing replica sets for each shard enhances availability and facilitates automatic failover.

- Query Routing Overhead: Accessing data across multiple shards can introduce latency, affecting performance. Solution: Optimize query performance by selecting efficient shard keys, creating appropriate indexes, and employing zone sharding to direct queries to specific shards, thus minimizing overhead.

Benchmarking Results

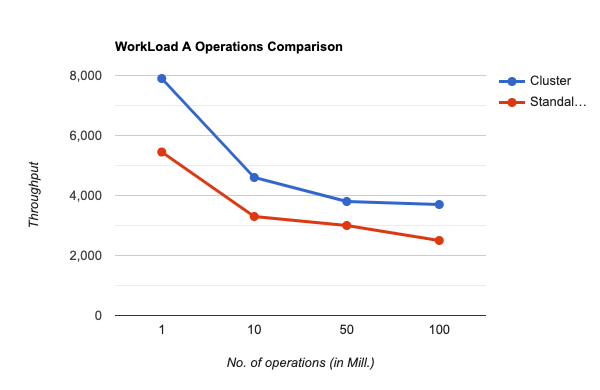

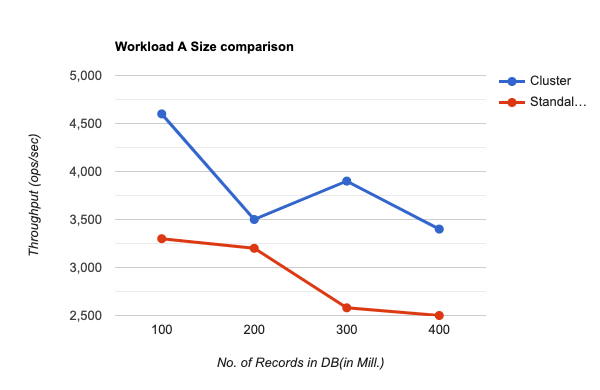

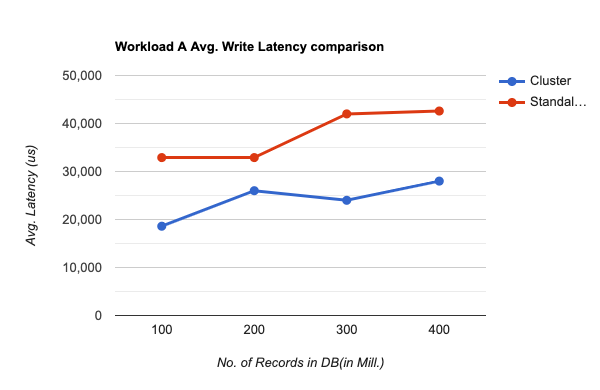

Tool Information: YCSB, or Yahoo! Cloud Serving Benchmark, stands out as a widely recognized open-source benchmarking tool. It's designed for assessing and contrasting the performance across various data storage solutions, including NoSQL databases, key-value stores, and file systems.

Benchmarks: The evaluated workload presents an equal distribution of reads and writes, offering insightful comparisons in terms of Throughput and Latency.

Throughput vs Operations

Throughput vs Size

Write Latency vs Size

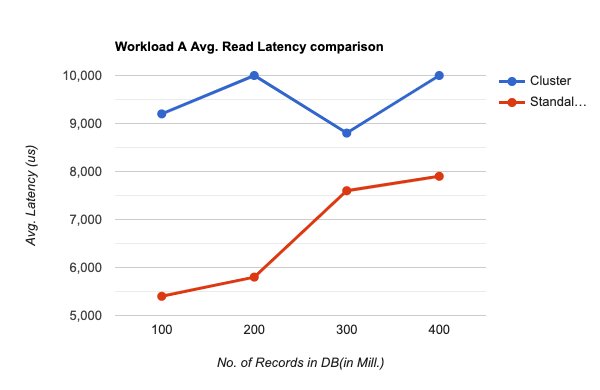

Read Latency vs Size

Response Time on Pulse Dataset

Insights

- When handling a vast quantity of records, the throughput of the shard cluster outperforms the standalone cluster, mainly because of enhanced performance and reduced latency for write operations.

- The Shard Cluster also ensures data replication, maintaining either superior or equivalent performance levels.

- With fewer records, the standalone cluster exhibits better performance than the sharded clusters.

Results

- Initially, the standalone deployment is more suited for applications on a smaller scale or scenarios with lower volumes of data.

- Yet, with increasing workloads and data sizes, the standalone deployment's limitations become apparent, with scalability and throughput issues indicating its inefficiency in managing large workloads.

- On the other hand, the sharded deployment shines in scalability and performance, effectively distributing data across multiple shards to meet the demands of expanding datasets and user requirements. MongoDB sharding utilizes horizontal scaling to adeptly manage significant workloads, offering increased throughput, lower latency, and improved fault tolerance.

- Looking ahead, adding new shards to the cluster could address performance bottlenecks by distributing data more evenly across shards, thereby enhancing performance (as indicated in trend 5 by a black circle).