ADOC offers a comprehensive data quality monitoring solution for your data lake and warehouse, enabling you to verify that your business decisions are based on high-quality data. Utilizing ADOC ensures that critical data sources are not neglected by providing tools to assess data quality in a data catalog. ADOC caters to all users, including analysts, data scientists, and developers, allowing them to depend on it for monitoring data flow in the warehouse or data lake, preventing any data loss.

The ADOC APIs are triggered by the adoc-java-sdk.

Features

The following features are provided by adoc-java-sdk:

Prerequisites

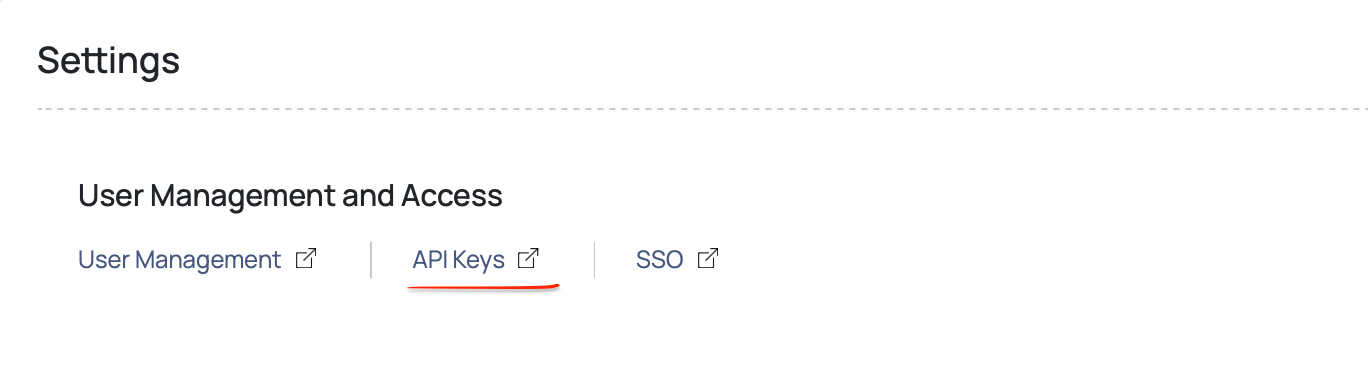

API keys are essential for authentication when making calls to ADOC.

Creating an API Key

You can create API keys using the API keys section of Admin Central in ADOC UI.

Before making calls to the Java SDK, ensure you have the necessary API keys and the ADOC Server URL readily available. These credentials are essential for establishing the connection and accessing ADOC services.

baseUrl - URL of the ADOC ServeraccessKey - API access key generated from AdocsecretKey - API secret key generated from Adocimport acceldata.adoc.client.AdocClient; String baseUrl = "URL of the Adoc server";String accessKey = "API access key generated from Adoc";String secretKey = "API secret key generated from Adoc";AdocClient adocClient = AdocClient.create(baseUrl, accessKey, secretKey).build();Installation

Maven

Add the below repository URLs in the <repositories> section of your project's pom.xml file.

<repositories> <repository> <id>central</id> <url>https://repo.maven.apache.org/maven2</url> </repository> <repository> <id>adoc-central</id> <url>https://repo1.acceldata.dev/repository/adoc-central</url> </repository></repositories>Add the below dependency under the <dependencies> section of your project's pom.xml file.

<dependency> <groupId>io.acceldata.adoc</groupId> <artifactId>java-sdk</artifactId> <version>0.0.1</version></dependency>Gradle

Add the below repository URLs in the repositories section of your project's pom.xml file.

repositories { mavenCentral() maven { url = uri("https://repo1.acceldata.dev/repository/adoc-central") }}Add the below dependency under the dependencies section of your project's build.gr

Asset APIs

The Acceldata Java SDK provides a variety of methods to fetch detailed asset information, ensuring the efficiency of your data management processes.

Obtaining Asset Details Using Asset ID or Asset UID

With the SDK, you can effortlessly obtain specific asset details by providing an asset identifier, be it the Asset ID or Asset UID.

// Retrieve an Asset by its IDString assetId = "965138";Asset assetById = adocClient.getAsset(assetId);// Retrieve an Asset by its UIDString assetUid = "java_sdk_snowflake_ds.CUSTOMERS_DATABASE.JAVA_SDK.DEPT_INFO";Asset assetByUid = adocClient.getAsset(assetUid);Discovering all Asset Types within ADOC

One of the key functionalities of the ADOC (Acceldata Operations Console) is the ability to discover all asset types within your data environment.

List<AssetType> assetTypes = adocClient.getAllAssetTypes();Exploring Asset Attributes: Tags, Labels, Metadata, and Sample Data

You have the capability to retrieve asset metadata, asset tags, labels, and sample metadata for a comprehensive understanding of your data assets.

// Retrieve the asset object using its unique identifier (Asset UID)String assetUid = "java_sdk_snowflake_ds.CUSTOMERS_DATABASE.JAVA_SDK.DEPT_INFO";Asset asset = adocClient.getAsset(assetUid);// Get metadata of the assetMetadata metadata = asset.getMetadata();// Retrieve all tags associated with the assetList<AssetTag> assetTags = asset.getTags();// Retrieve asset labelsList<AssetLabel> assetLabels = asset.getLabels();// Retrieve sample data for the assetMap<String, Object> sampleData = asset.sampleData();You can also add tags, labels, and custom metadata using the SDK, facilitating asset filtering and organization.

// Add a new tag to the assetAssetTag assetTag = asset.addTag("sdk-tag-3");// Add asset labelsList<AssetLabel> assetLabels = new ArrayList<>();// Define and add the first asset labelassetLabels.add(AssetLabel.builder().key("test12").value("shubh12").build());// Define and add the second asset labelassetLabels.add(AssetLabel.builder().key("test22").value("shubh32").build());// Perform the operation to add asset labels and capture the responseList<AssetLabel> addAssetLabelsResponse = asset.addLabels(assetLabels);// Add custom metadataList<CustomAssetMetadata> customAssetMetadataList = new ArrayList<>();// Define and add the first custom metadataCustomAssetMetadata customAssetMetadata1 = CustomAssetMetadata.builder().key("testcm1").value("shubhcm1").build();// Define and add the second custom metadataCustomAssetMetadata customAssetMetadata2 = CustomAssetMetadata.builder().key("testcm2").value("shubhcm2").build();// Add both custom metadata entries to the listcustomAssetMetadataList.add(customAssetMetadata1);customAssetMetadataList.add(customAssetMetadata2);// Perform the operation to add custom metadata and capture the response in the 'metadata' variableMetadata metadata = asset.addCustomMetadata(customAssetMetadataList);Initiating Asset Profiling and Sampling

Spark jobs executed on Livy enable the profiling and sampling of crawled assets.

//profile an asset, get profile request details, cancel the profile// assetProfile is the return object received on startProfile callProfile assetProfile = asset.startProfile(ProfilingType.FULL);//Get asset profiling statusassetProfile.getStatus();//Cancel profilingProfileCancellationResult cancelResponse = assetProfile.cancel();//Latest profile statusProfileRequest latestProfileStatus = asset.getLatestProfileStatus();// Latest profile status using adocclient adocClient.getProfileStatus(assetIdentifier, profileId);In summary, the Acceldata Java SDK equips you with a diverse range of capabilities to streamline and enhance your data asset management. Whether you're retrieving asset details via IDs or UIDs, exploring different asset types, examining attributes such as tags, labels, and metadata, or initiating profiling and sampling tasks, the SDK simplifies your data management journey.

With the Acceldata Java SDK, you can seamlessly fetch specific asset information, add tags, labels, and custom metadata, and delve into asset profiling and sampling. This versatile toolkit enables you to unlock the full potential of your data assets, ensuring that your data management tasks are optimized, and enhancing your overall data-driven operations.

Data Sources APIs

This document outlines the process of managing and crawling data sources within Acceldata. ADOC facilitates crawling across more than fifteen varied data sources. The following list consists of the data source APIs that seamlessly integrate with the SDK. It elaborates on acquiring data sources by name or ID, retrieving data sources of a specific type, initiating crawlers for designated data sources or assembly names, checking the crawling status, and deleting a data source process using the Java SDK. The accompanying code snippets illustrate these functionalities through both DataSource objects and the ADOC Client.

Obtaining a DataSource by its ID or Name

To get data sources by their name or IDs use the following code examples for each method:

// Either assemblyId or assemblyName is needed as an assemblyIdenfier.// properties should be passed as True to get assembly propertyString dataSourceName = "java_sdk_snowflake_ds";boolean fetchAssemblyPropertiesFlag = true; //1. Get datasource by nameDataSource dataSourceByName = adocClient.getDataSource(dataSourceName, Optional.of(fetchAssemblyPropertiesFlag));System.out.println(dataSourceByName);//2. Get data source by idLong dataSourceId = dataSourceByName.getId();DataSource dataSourceById = adocClient.getDataSource(String.valueOf(dataSourceId), Optional.of(fetchAssemblyPropertiesFlag));System.out.println(dataSourceById);Retrieve All Data Sources

The following sections explains how to retrieve all available data sources. It provides a code snippet to obtain a list of all data sources without type filtering.

AssetSourceType assetSourceType = AssetSourceType.AWS_S3;List<DataSource> dataSources = adocClient.getDataSources(Optional.of(assetSourceType));Initiate Crawler for a Specified DataSource or Assembly Name

To initiate a crawler for a designated data source or assembly name, use the provided code snippets as it demonstrates the process using both the data source object and the ADOC client.

//Using DataSource objectdataSource.startCrawler();//or//Using AdocClientadocClient.startCrawler(assemblyName);Retrieve Crawler Status for a Specified DataSource or Assembly Name

To check the status of the crawling process for a specific data source or assembly name for both the data source object and ADOC client, use the following code snippet:

//Using DataSource objectCrawlerStatus crawlerStatus = dataSource.getCrawlerStatus(); //or//Using AdocClientadocClient.getCrawlerStatus(assemblyName);Deleting a DataSource

Use the following code snippet to delete a particular data source within the Acceldata environment:

adocClient.deleteDataSource(dataSourceId);By following this guide, you can efficiently manage data sources and optimize their data operations in the Acceldata platform.

Pipeline APIs

The Acceldata SDK provides robust support for a diverse array of pipeline APIs, covering everything from pipeline creation to job and span addition, as well as the initiation of pipeline runs and more. With capabilities extending throughout the entire span lifecycle, the SDK exercises complete control over the entire pipeline infrastructure, generating various events as needed.

Each pipeline encapsulates the ETL (Extract, Transform, Load) process, constituting Asset nodes and associated Jobs. The collective definition of the pipeline shapes the lineage graph, providing a comprehensive view of data assets across the system.

Within this framework, a Job Node, or Process Node, acts as a pivotal component, executing specific tasks within the ETL workflow. These jobs receive inputs from various assets or other jobs and produce outputs that seamlessly integrate into additional assets or jobs. ADOC harnesses this network of job definitions within the workflow to construct lineage and meticulously monitors version changes for the pipeline, ensuring a dynamic and efficient data processing environment.

Retrieve All Pipelines

All pipelines can be accessed through the Acceldata SDK.

List<PipelineInfo> pipelineInfos = adocClient.getPipelines();Creating a Pipeline

This section pertains to the process of creating a pipeline using the Acceldata SDK. Creating a pipeline in the Acceldata SDK is a fundamental task in managing data workflows. To accomplish this, you need to construct a CreatePipeline object that encapsulates the essential information required for pipeline creation. Two mandatory parameters that must be defined when creating a pipeline are the Uid (Unique Identifier) and the Name.

By specifying the Uid and Name, you provide unique identification and a recognizable label to the new pipeline. Once the CreatePipeline object is properly configured, it can be used as input to initiate the pipeline creation process, allowing you to efficiently set up and manage your data workflows within Acceldata.

//Create PipelineCreatePipeline createPipeline = CreatePipeline.builder() .uid("adoc_etl_pipeline").name("ADOC ETL Pipeline") .description("ADOC ETL Pipeline ").build();Pipeline pipeline = adocClient.createPipeline(createPipeline);Retrieve a Pipeline

To access a specific pipeline, you can provide either the pipeline UID (Unique Identifier) or the pipeline ID as a parameter for retrieval. This flexibility allows you to easily obtain the details of a desired pipeline.

//Get PipelinePipeline pipeline = adocClient.getPipeline("adoc_etl_pipeline");Deleting a Pipeline

You can initiate the deletion process by using the code snippet below:

String identity="Pass pipeline id or pipeline uid here"Pipeline pipeline = adocClient.getPipeline(identity);adocClient.deletePipeline(pipeline.getId());Retrieve Pipeline Runs for a Pipeline

You can access all the pipeline runs associated with a specific pipeline. There are two ways to accomplish this:

- Using the pipeline object:

pipeline.getRuns() - Utilizing the AdocClient:

adocClient.getPipelineRuns(pipeline.getId())

Both methods allow you to retrieve information about pipeline runs.

//Using pipeline object pipeline.getRuns(); //Using AdocclientList<PipelineRun> runs = adocClient.getPipelineRuns(pipeline.getId());Initiating a Pipeline Run

A pipeline run signifies the execution of a pipeline. It's important to note that a single pipeline can be executed multiple times, with each execution, referred to as a "run," resulting in a new snapshot version. This process captures the evolving state of the data within the pipeline and allows for effective monitoring and tracking of changes over time.

To initiate a pipeline run, you can use the Pipeline object. When creating a run, there are two optional parameters that you can include:

optionalContextData: Additional context data that can be passed.optionalContinuationId: A continuation identifier, which is also an optional parameter. It is an identifier used to link multiple ETL pipelines together as a single pipeline in the ADOC UI.

Here's an example of how to create a pipeline run using the Acceldata SDK:

Optional<Map<String, Object>> optionalContextData = Optional.empty();Optional<String> optionalContinuationId = Optional.empty();PipelineRun pipelineRun = pipeline.createPipelineRun(optionalContextData, optionalContinuationId);Getting the Latest Run of the Pipeline

You can obtain the latest run of a pipeline using either the Pipeline object or the AdocClient from the adoc-java-sdk. Here are two ways to achieve this:

//Using pipeline object PipelineRun latestRun = pipeline.getLatestPipelineRun(); //Using the AdocClient PipelineRun latestRun = adocClient.getLatestPipelineRun(pipelineId);Getting a Particular Run of the Pipeline

You can retrieve a particular run of a pipeline by passing the unique pipeline run ID as a parameter.

//Using Pipeline objectPipelineRun pipelineRun = pipeline.getRun(25231L);adocClient.getRun(25231L); //Using the AdocClientPipelineRun pipelineRun = adocClient.getPipelineRun(25231L)Updating a Pipeline Run

The updatePipelineRun method is responsible for updating the attributes of a pipeline run, such as its result, status, and optional context data. It accepts the following parameters:

result: APipelineRunResultindicating the updated result of the pipeline run.status: APipelineRunStatusindicating the updated status of the pipeline run.optionalContextData: An optional map that can be used to pass additional context data for the update.

PipelineRunResult result = PipelineRunResult.SUCCESS;PipelineRunStatus status = PipelineRunStatus.COMPLETED;Optional<Map<String, Object>> optionalContextData = Optional.empty();pipelineRun.updatePipelineRun(result, status, optionalContextData);Create Job Using Pipeline

When creating a job using a Pipeline object, there are mandatory parameters that must be provided, including uid, name, and pipelineRunId. The adoc-java-sdk library offers the CreateJob class, which serves as the parameter for job creation. The required parameters are as follows:

uid: A unique identifier for the job.name: The name of the job.pipelineRunId: The ID of the associated pipeline run.

Several other parameters are available to define and configure the job. Below is a description of these parameters:

| Parameter | Description |

|---|---|

| uid | Unique ID of the job. It is a mandatory parameter. |

| name | Name of the job. It is a mandatory parameter. |

| pipelineRunId | The pipeline run's ID is required when you want to add a job. This is mandatory if a job is made using a pipeline object. However, it's optional if the job is created using a pipeline run. |

| description | Description of the job. It is an optional parameter. |

| inputs | Job input can take various forms. It might be the uid of an asset, specified using the asset_uid and source parameters in the Node object. Alternatively, it could be the uid of another job, specified using the jobUid parameter in the Node object. |

| outputs | Job output comes in different forms. It may be the uid of an asset, specified through the asset_uid and source parameters in the Node object. Alternatively, it could be the uid of another job, specified through the jobUid parameter in the Node object. |

| meta | It is the metadata of the job and is an optional parameter. |

| context | It is the context of the job and is an optional parameter. |

| boundedBySpan | This parameter is of boolean value. If the job needs to be bound by a span, this must be set to true. The default value for this parameter is false. It is an optional parameter. |

| spanUid | This is the new span's uid, which is a string. If the value of bounded by span i.e. boundedBySpan is set to true, this parameter is mandatory. |

import acceldata.adoc.client.models.job.Node;// Fetching the pipeline created using the UID "adoc_etl_pipeline"Pipeline pipeline = adocClient.getPipeline("adoc_etl_pipeline");// Creating a run for the pipeline to be consumed during job creationPipelineRun pipelineRun = pipeline.createPipelineRun(Optional.empty(), Optional.empty());// Creating a Job// 1. Constructing job inputsList<Node> inputs = Arrays.asList(Node.builder().asset_uid("datasource-name.database.schema.table_1").source("MySql").build());// 2. Constructing job outputsList<Node> outputs = Arrays.asList(Node.builder().jobUid("transform_data").build());// 3. Constructing job metadataJobMetadata jobMetadata = JobMetadata.builder().owner("Jake") .team("Backend").codeLocation("https://github.com/").build();// 4. Constructing a context mapMap<String, Object> contextMap = new HashMap<>();contextMap.put("key21", "value21");// 5. Getting the latest run of the pipeline to be passed to the CreateJob objectPipelineRun latestRun = pipeline.getLatestPipelineRun();// 6. Setting up CreateJob parametersCreateJob createJob = CreateJob.builder() .uid("source_data") .name("Source Data") .description("Source Data Job") .inputs(inputs) .outputs(outputs) .meta(jobMetadata) .context(contextMap) .boundedBySpan(true) .spanUid("source_data.span") .pipelineRunId(latestRun.getId()) .build();// 7. Creating a Jobacceldata.adoc.client.models.node.Node jobNode = pipeline.createJob(createJob);Spans

Hierarchical spans are a way to organize and track tasks within pipeline runs for observability. Each pipeline run has its own set of spans. Spans are like containers for measuring how tasks are performed. They can be very detailed, allowing us to keep a close eye on specific jobs and processes in the pipeline.

Spans can also be connected to jobs, which help us monitor when jobs start, finish, or encounter issues. Spans keep track of when a task begins and ends, giving us insight into how long each task takes.

The Acceldata SDK makes it easy to create new pipeline runs and add spans. It also helps us generate custom events and metrics for observing the performance of pipeline runs. In simple terms, hierarchical spans and the Acceldata SDK give us the tools to closely monitor and analyze the execution of tasks in our pipelines.

Creating Span for a Pipeline Run

The createSpan function, used within the context of a pipeline run, offers the following parameters:

| Parameter | Description |

|---|---|

| uid | This parameter represents the unique identifier (UID) for the newly created span. It is a mandatory field, and each span should have a distinct UID. |

| associatedJobUids | This parameter accepts a list of job UIDs that should be associated with the span. In the case of creating a span for a pipeline run, an empty list can be provided since spans are typically associated with jobs. |

| optionalContextData | This parameter is a dictionary that allows you to provide custom key-value pairs containing context information relevant to the span. It can be used to add additional details or metadata associated with the span. |

// Define associated job UIDsList<String> associatedJobUids = Arrays.asList("jobUid1");// Create a context mapMap<String, Object> contextMap = new HashMap<>();contextMap.put("key21", "value21");// Wrap the context map in an OptionalOptional<Map<String, Object>> optionalContextData = Optional.of(contextMap);// Create a new span context within the pipeline runSpanContext spanContext = pipelineRun.createSpan("adoc_etl_pipeline_root_span", optionalContextData, associatedJobUids);Obtaining the Root Span for a Pipeline Run

In this code snippet, the getRootSpan method is used to retrieve the root span associated with a specific pipeline run. The root span represents the top-level span within the execution hierarchy of the pipeline run. It provides essential context and observability data for the entire run. The resulting SpanContext object, stored in the rootSpanContext variable, represents the root span and can be used for further analysis and observability purposes. This code simplifies the process of accessing critical information about the pipeline run's top-level span.

SpanContext rootSpanContext = pipelineRun.getRootSpan();Checking if the Current Span is a Root Span

A root span is the top-level span that encompasses the entire task or process hierarchy.

The isRoot() method is used to make this determination. If the span is a root span, the isRootSpan variable is set to true, indicating its top-level status within the span hierarchy. This check provides insights into the context of the current span, which can be valuable for decision-making or monitoring in complex workflows.

boolean isRootSpan = spanContext.isRoot();Creating Child Span

The createChildSpan function within SpanContext is used to create a hierarchical structure of spans by placing a span under another span. It offers the following parameters:

| Parameter | Description |

|---|---|

| uid | This parameter represents the unique identifier (UID) for the newly created child span. It is a mandatory field, and each child span should have a distinct UID. |

| optionalContextData | This parameter is a dictionary that allows you to provide custom key-value pairs containing context information relevant to the child span. It can be used to add additional details or metadata associated with the span. |

| associatedJobUids | This parameter accepts a list of job UIDs that should be associated with the child span. This association allows you to link the child span with specific jobs. |

SpanContext spanContext = pipelineRun.getRootSpan();Optional<Map<String, Object>> optionalContextData = Optional.empty();List<String> associatedJobUids = Arrays.asList("source_data");spanContext.createChildSpan("source_data.span", optionalContextData, associatedJobUids );Retrieving Spans for a Given Pipeline Run

This code snippet demonstrates how to retrieve all the spans associated with a specific pipeline run. It begins by obtaining a reference to a PipelineRun object using the getRun method on a Pipeline object and providing the pipeline run's ID as a parameter. Subsequently, it calls the getSpans method on the pipelineRun object, which returns a list of Span objects related to the specified pipeline run. This functionality is valuable for accessing and analyzing span data to monitor the execution of the pipeline run.

//Get all the spans for a given pipeline runPipelineRun pipelineRun = pipeline.getRun(25232L);List<Span> spans = pipelineRun.getSpans();Sending Span Events

The SDK has the capability to send both custom and standard span events at different stages of a span's lifecycle. These events play a crucial role in gathering metrics related to pipeline run observability.

// Step 1: Prepare context data for the eventMap<String, Object> contextData = new HashMap<>();contextData.put("client_time", DateTime.now()); // Capture client timecontextData.put("row_count", 100); // Include the row count// Step 2: Send a generic event for the span// Create a generic event with the event name "source_data.span_start" and the context dataGenericEvent genericEvent = GenericEvent.createEvent("source_data.span_start", contextData);// Send the created event to the child span (assumed to be represented by childSpanContext)childSpanContext.sendEvent(genericEvent);This code snippet demonstrates the usage of the abort and failed methods within a SpanContext to send events for failed or aborted spans. It begins by preparing context data with information such as client time and row count. Including context data in these events provides essential information for tracking and handling span failures and aborts, aiding in observability and diagnostic purposes.

// Step 1: Prepare context data for the eventMap<String, Object> contextData = new HashMap<>();contextData.put("client_time", DateTime.now()); // Capture client timecontextData.put("row_count", 100); // Include the row count// Step 2: Fail Span// Send a failed event for the span and include the context dataspanContext.failed(Optional.of(contextData));// or// Step 2: Abort Span// Send an abort event for the span and include the context dataspanContext.abort(Optional.of(contextData));Ending the Span

We can end the span by using end method on the SpanContext object and passing the optionalContextData .

//Context dataMap<String, Object> contextData = new HashMap<>();contextData.put("client_time", DateTime.now());contextData.put("row_count", 100);spanContext.end(Optional.of(contextData));In conclusion, the Acceldata SDK equips users with powerful tools to manage and monitor data pipelines effectively. It enables seamless pipeline creation, job management, and hierarchical span organization, enhancing observability and control over data workflows. With the ability to generate custom events, the SDK offers a comprehensive solution for optimizing pipeline operations and ensuring data integrity.

Policy APIs

This section provides comprehensive information on the APIs and methods available for managing policies in your data management workflow. From a data observability perspective, the Policy APIs serve as a foundation for maintaining data integrity, ensuring data quality, and meeting compliance requirements. By harnessing these APIs, organizations can proactively detect and resolve data issues, ensuring the reliable and accurate flow of data through their systems, ultimately contributing to improved data observability and trust in data-driven decision-making.

Retrieving a Policy Based on Its Identifier

This API allows you to find a specific policy using its identifier, which can be either the policy ID or policy name. Whether you have the ID or name, you can efficiently retrieve the desired policy. This simplicity streamlines policy access within your application.

RuleResource policy = adocClient.getPolicy("153477");RuleResource policy = adocClient.getPolicy("redshift_automation_views_dq_null_check_01");Retrieving a Policy Based on Type and Identifier

This API allows you to find a specific policy based on its type and identifier. The identifier can be either the policy ID or policy name, offering flexibility in searching for policies.

RuleResource policy = adocClient.getPolicy(PolicyType.DATA_QUALITY, "153477");RuleResource policy = adocClient.getPolicy(PolicyType.DATA_QUALITY, "redshift_automation_views_dq_null_check_01");Executing a Policy

The Acceldata SDK provides a utility function called executePolicy that allows you to execute policies both synchronously and asynchronously. When you use this function, it returns an object on which you can call getResult and getStatus to obtain the execution's result and status.

The required parameters for using the executePolicy function typically include the following:

| Parameter | Description |

|---|---|

| policyType | This parameter is specified using an enum and is a required parameter. It accepts constant values as PolicyType.DATA_QUALITY or PolicyType.RECONCILIATION. It specifies the type of policy to be executed. |

| policyId | This is a required string parameter. It specifies the unique identifier (ID) of the policy that needs to be executed. |

| optionalSync | This is an optional Boolean parameter. It determines whether the policy should be executed synchronously or asynchronously. The default value is false. If set to true, the execution will return only after it has completed. If set to false, it will return immediately after the execution begins. |

| optionalIncremental | This is an optional enum parameter used to determine the behavior in the event of a failure. The default value is

|

| pipelineRunId | This is an optional parameter specified as a long. It represents the run ID of the pipeline where the policy is being executed. This parameter can be used to link the execution of the policy with a specific pipeline run, providing context for the execution. |

// Specify the policy type PolicyType policyType = PolicyType.RECONCILIATION;// Specify the unique identifier (ID) of the policy to be executedlong policyId = 154636L;// Set the execution mode to synchronous (wait for completion)boolean optionalSync = true;// Specify that the policy execution should be full, not incrementalboolean optionalIncremental = false;// Set the failure strategy to FailOnError (throw exception on error)FailureStrategy optionalFailureStrategy = FailureStrategy.FailOnError;// You can optionally specify a pipeline run ID if neededOptional<Long> pipelineRunId = Optional.empty();// Execute the policy with the specified parametersExecutor executor = adocClient.executePolicy( policyType, policyId, Optional.of(optionalSync), Optional.of(optionalIncremental), Optional.of(optionalFailureStrategy), pipelineRunId);Getting Policy Execution Result

To access the outcome of policy execution, you have two options. You can call adocClient.getPolicyExecutionResult method directly on the adocClient , or you can opt to use the getResult method provided by the executor object generated during the policy execution. Also in case the policy execution is in a non terminal state, it will be retried till the execution reaches a terminal state. The getPolicyExecutionResult parameters include:

| Parameter | Descritption |

|---|---|

| policyType | This parameter is mandatory and is specified using an enum, which is like a set of predefined options. It tells the system what kind of policy to apply. It can take two values: PolicyType.DATA_QUALITY or PolicyType.RECONCILIATION. |

| executionId | This is a required parameter, and it's a string value. It represents the unique identifier of the execution you want to query for results. You need to specify this identifier to retrieve specific results. |

| optionalFailureStrategy | This parameter is optional, and it's also an enum parameter. It helps decide what to do in case something goes wrong during the execution. The default behavior, if you don't specify this, is to not fail (FailureStrategy.DoNotFail). This means that if there is a problem, it will be logged, but the execution continues. |

// Specify the failure strategy as "FailOnError"Optional<FailureStrategy> optionalFailureStrategy = Optional.of(FailureStrategy.FailOnError);// Set sleep interval to 15 (optional)Optional<Integer> sleepInterval = Optional.of(15);// Set the retry count to 5 (optional)Optional<Integer> retryCount = Optional.of(5);// Call getResult on the executor object with optional parametersRuleResult ruleResult = executor.getResult( sleepInterval, retryCount, optionalFailureStrategy);// Alternatively, you can call getPolicyExecutionResult on the adocClient// to retrieve the policy execution resultRuleResult ruleResult = adocClient.getPolicyExecutionResult( PolicyType.DATA_QUALITY, executor.getId(), optionalFailureStrategy);Retrieving Current Policy Execution Status

To find out the current status of a policy execution, you have two options. You can either call the getPolicyStatus method on the adocClient, or you can call the getStatus method on the executor object. These methods will provide you with the current resultStatus of the execution, which gives information about where the execution stands at the moment.

The required parameters for getPolicyStatusinclude:

| Parameter | Description |

|---|---|

| policyType | The policy type is specified using an enum parameter. It is a required parameter. It is an enum that will accept constant values as PolicyType.DATA_QUALITY or PolicyType.RECONCILIATION |

| executionId | The execution id to be queried for the result is specified as a string parameter. It is a required parameter. |

//Using Executor object returned during executePolicy callPolicyExecutionStatus policyExecutionStatus = executor.getStatus();//or adocClient getStatus methodPolicyExecutionStatus policyExecutionStatus = adocClient.getPolicyExecutionStatus(PolicyType.DATA_QUALITY,executor.getId());Executing Policies Asynchronously

Here's an example that demonstrates how to initiate policy execution in an asynchronous manner.

// Define connection details and create an AdocClientString baseUrl = "baseUrl";String accessKey = "accessKey";String secretKey = "secretKey";// Create an AdocClient with the specified connection detailsAdocClient adocClient = AdocClient.create(baseUrl, accessKey, secretKey).build();Long policyId = 88888L;// Set optional parameters for asynchronous policy executionOptional<Boolean> optionalSync = Optional.of(false); // Set sync flag to false for async executionOptional<Boolean> optionalIncremental = Optional.of(false); // Optional: set execution mode to non-incrementalOptional<FailureStrategy> optionalFailureStrategy = Optional.of(FailureStrategy.FailOnError); // Optional: define failure strategyOptional<Long> optionalPipelineRunId = Optional.empty(); // Optional: specify a pipeline run ID// Get an asynchronous policy executorExecutor asyncExecutor = adocClient.executePolicy( PolicyType.DATA_QUALITY, policyId, optionalSync, optionalIncremental, optionalFailureStrategy, optionalPipelineRunId);// Wait for the execution to complete and get the final resultRuleResult ruleResult = asyncExecutor.getResult( optionalSleepInterval, // Optional: sleep interval optionalRetryCount, // Optional: retry count optionalFailureStrategy // Optional: failure strategy);// Get the current status of the asynchronous policy executionPolicyExecutionStatus policyExecutionStatus = asyncExecutor.getStatus();Executing Policies Synchronously

Here's an example that demonstrates how to initiate a synchronous policy execution, where the execution waits for completion before proceeding:

// Define connection details and create an AdocClientString baseUrl = "baseUrl";String accessKey = "accessKey";String secretKey = "secretKey";// Create an AdocClient with the specified connection detailsAdocClient adocClient = AdocClient.create(baseUrl, accessKey, secretKey).build();Long policyId = 88888L;//Set optional parameters for asynchronous policy executionOptional<Boolean> optionalSync = Optional.of(true); // Set sync flag to false for async executionOptional<Boolean> optionalIncremental = Optional.of(false); // Optional: set execution mode to non-incrementalOptional<FailureStrategy> optionalFailureStrategy = Optional.of(FailureStrategy.FailOnError); // Optional: define failure strategyOptional<Long> optionalPipelineRunId = Optional.empty(); // Optional: specify a pipeline run ID// Get an asynchronous policy executorExecutor syncExecutor = adocClient.executePolicy( PolicyType.DATA_QUALITY, policyId, optionalSync, optionalIncremental, optionalFailureStrategy, optionalPipelineRunId);// Wait for the execution to complete and get the final resultRuleResult ruleResult = syncExecutor.getResult( optionalSleepInterval, // Optional: sleep interval optionalRetryCount, // Optional: retry count optionalFailureStrategy // Optional: failure strategy);// Get the current status of the synchronous policy executionPolicyExecutionStatus policyExecutionStatus = syncExecutor.getStatus();Enabling a Policy

The provided code demonstrates how to enable a rule or policy.

String dqPolicyNameToEnableDisable = "sdk_dq_policy_to_enable_disable";RuleResource dqRule = adocClient.getDataQualityRule(dqPolicyNameToEnableDisable);Long policyId = dqRule.getRule().getId();GenericRule rule = adocClient.enableRule(policyId);Disabling a Policy

The provided code demonstrates how to disable a rule or policy.

String dqPolicyNameToEnableDisable = "sdk_dq_policy_to_enable_disable";RuleResource dqRule = adocClient.getDataQualityRule(dqPolicyNameToEnableDisable);Long policyId = dqRule.getRule().getId();GenericRule rule = adocClient.disableRule(policyId);Canceling Policy Execution

The provided code demonstrates how to cancel a policy execution using both the executor object and the adocClient.

Using the executor object:

// Execute a data quality policy asynchronouslyExecutor asyncExecutor = adocClient.executePolicy( PolicyType.DATA_QUALITY, 153477L, Optional.of(false), Optional.of(false), Optional.of(FailureStrategy.FailOnError), Optional.empty());// Cancel the execution using the executor objectCancelRuleResponse cancelRuleResponse = asyncExecutor.cancel();Using adocClient:

// Cancel a specific rule execution using the adocClientadocClient.cancelRuleExecution(153477L);Listing All Policies

Retrieving a list of all policies from your catalog server while applying specified filters.

| Parameter | Description |

|---|---|

| policyFilter | PolicyFilter can have the following parameters

|

| optionalPage | Specifies the page number of the query output, allowing you to navigate through paginated results. |

| optionalSize | Defines the size of each page, controlling how many policies are displayed on each page of the query output. |

| optionalWithLatestExecution | This option allows you to include the latest execution details of the policy in the query output. When enabled, it fetches information about the most recent execution of each policy. |

| optionalSortBy | This parameter determines the sorting order of the policies in the query output. You can specify the order in which policies are presented, for example, by name or execution date. |

// Define filter criteria using variablesPolicyType policyTypeFilter = PolicyType.DATA_QUALITY; // Filter by policy type (e.g., DATA_QUALITY)boolean activeFilter = true; // Filter to include only active policiesboolean enableFilter = true; // Filter to include only enabled policiesOptional<Integer> pageNumber = Optional.empty(); // Optional: Page number of the query outputOptional<Integer> pageSize = Optional.empty(); // Optional: Size of each pageboolean fetchLatestExecution = false; // Optional: To fetch the latest execution of the policy (set to false)Optional<String> sortingOrder = Optional.empty(); // Optional: Sorting order// Create a PolicyFilter using the defined variablesPolicyFilter policyFilter = PolicyFilter.builder() .policyType(policyTypeFilter) .active(activeFilter) .enable(enableFilter) .build();// List all policies based on the provided filter and optional parametersList<? extends Rule> allPolicies = adocClient.listAllPolicies( policyFilter, pageNumber, pageSize, Optional.of(fetchLatestExecution), sortingOrder);