Title

Create new category

Edit page index title

Edit category

Edit link

Copying the Encrypted Data Between Two ODP Clusters Using Ranger KMS

ODP Hadoop supports at-rest encryption by designating the HDFS directories as encryption zones. To facilitate this, Hadoop integrates with a Key Management Service (KMS) that stores and manages encryption keys securely. Ranger KMS, an open-source and scalable key management solution, seamlessly integrates with Hadoop. These capabilities simplify security for critical workloads running on Hadoop but also introduce complexities in fault tolerance and disaster recovery (DR) planning.

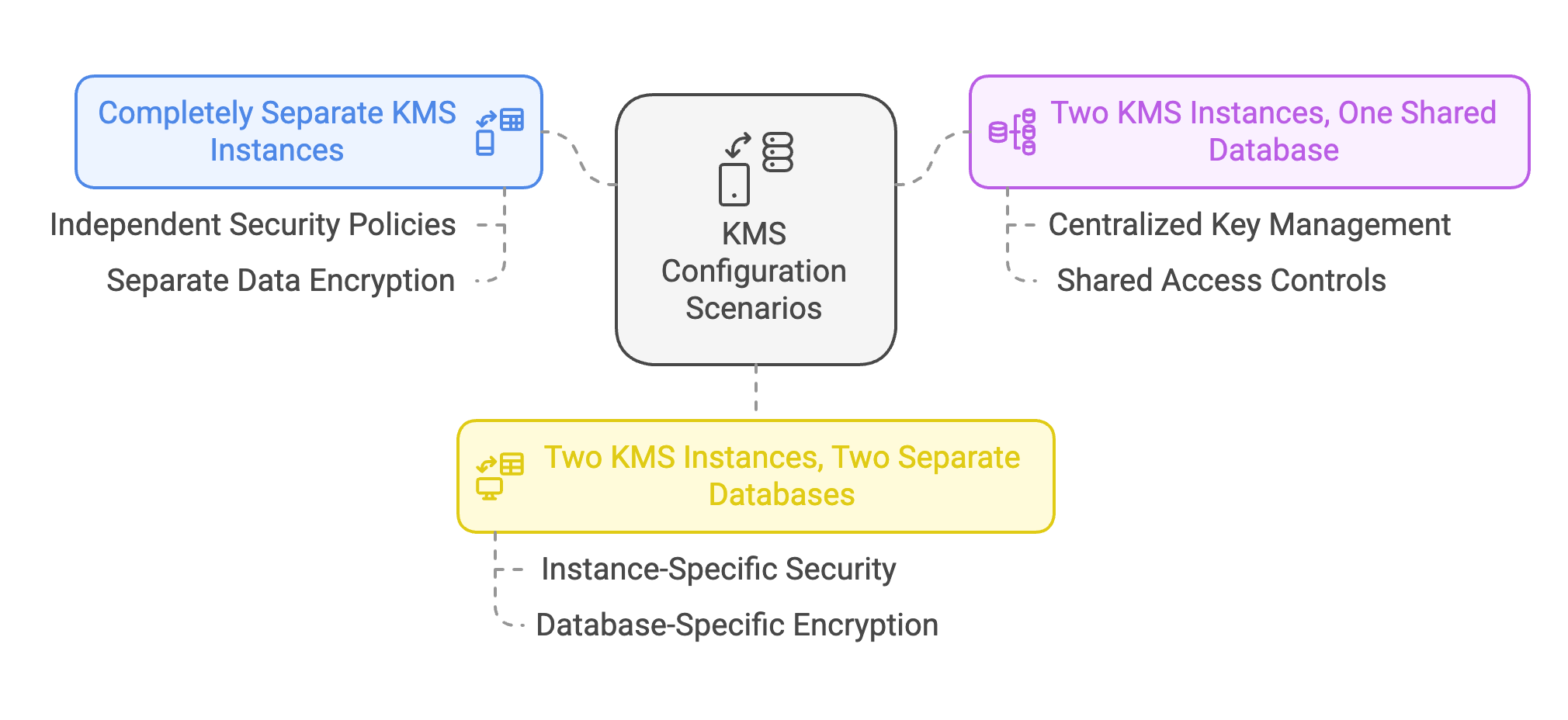

Organizations typically replicate data to an off-site DR cluster to ensure business continuity. However, this replication process becomes more involved when the data is encrypted. To retain data confidentiality and avoid unnecessary overhead, the organizations must consider how Ranger KMS keys are shared or managed between clusters. The following scenarios illustrate different approaches for copying encrypted data between two ODP clusters using Ranger KMS.

Scenario 1: Separate the KMS Instances Completely

In this scenario, Cluster A (Production) and Cluster B (DR) each run independent Ranger KMS instances, with no key exchange. This approach offers enhanced security isolation:

- If Cluster A’s KMS is compromised, Cluster B’s KMS remains unaffected.

- Each cluster generates unique encryption keys, even for identical HDFS paths.

However, this method introduces a performance overhead when replicating data because the data must be decrypted on Cluster A and re-encrypted on Cluster B.

Data Copy Procedure

Use DistCp as you would in a non-encrypted environment. DistCp automatically handles decryption on the source and encryption on the target:

# On ClusterA (Production):hadoop distcp -update \ hdfs://ClusterA:8020/data/encrypted/fileA1.txt \ hdfs://ClusterB:8020/data/encrypted/Scenario 2: Two KMS Instances, One Shared Databases

Here, Cluster A and Cluster B each run a separate Ranger KMS server but share the same database. Both KMS servers access identical keys, enabling transparent encryption and decryption across clusters.

Configure Ranger KMS on ClusterA (Production) Follow the standard ODP Ranger KMS setup process, including configuring the database.

Configure ClusterB (DR) to Use the Same Database

- Replicate the KMS database configuration from ClusterA to ClusterB.

- Disable any “Setup Database and Database User” option to prevent re-initializing or overwriting the shared database.

Create Encryption Keys and Zones

- On ClusterA (Production):

hadoop key create ProdKey1hdfs crypto -createZone -keyName ProdKey1 -path /data/encrypted- On ClusterB (DR):

hdfs crypto -createZone -keyName ProdKey1 -path /data/encrypted- Copy Data Without Re-Encryption: Since both clusters share the same keys, data can remain encrypted during transit by using the hidden

/.reserved/rawpath. Include the-pxflag to preserve extended attributes (which store the EDEK—Encrypted Data Encryption Key):

hadoop distcp -px \ hdfs://ClusterA:8020/.reserved/raw/data/encrypted/fileA1.txt \ hdfs://ClusterB:8020/.reserved/raw/data/encrypted/Scenario 3: Two KMS Instances, Two Separate Databases

In this scenario, each cluster operates its own Ranger KMS server and maintains independent databases. Keys must be exported from ClusterA (Production) and imported into ClusterB (DR) to allow ClusterB to decrypt the data.

- Set Up Each KMS Independently Install and configure both KMS servers according to the ODP documentation, including separate databases.

- Create Keys on ClusterA (Production)

- Export Keys from ClusterA (Production) on the node where the ClusterA KMS runs:

cd /usr/odp/current/ranger-kms./exportKeysToJCEKS.sh ClusterAProd.keystore JCEKSYou will be prompted for passwords for the keystore and for the individual keys.

- Securely Transfer the Keystore File

scp ClusterAProd.keystore ClusterBNode:/usr/odp/current/ranger-kms/- Import Keys into ClusterB (DR) on the node where the Cluster B KMS runs:

cd /usr/odp/current/ranger-kms./importJCEKSKeys.sh ClusterAProd.keystore JCEKSUse the same passwords entered during export.

- Create the Corresponding Encryption Zone on ClusterB

hdfs crypto -createZone -keyName ProdKey1 -path /data/encrypted- Copy Encrypted Data: Now that Cluster B’s KMS has the corresponding keys, the data can be replicated via

/.reserved/rawto avoid unnecessary decryption and re-encryption:

hadoop distcp -px \ hdfs://ClusterA:8020/.reserved/raw/data/encrypted/fileA1.txt \ hdfs://ClusterB:8020/.reserved/raw/data/encrypted/Each time new keys are created or existing keys are rotated on Cluster A (Production), you must repeat the export–import procedure to keep Cluster B’s KMS in sync.

Known Issues and Resolutions

Below are some common issues you might encounter when setting up or using Ranger KMS in ODP Hadoop, along with recommended solutions.

KMS Key Export Script Fails

- Symptom: Running the

exportKeysToJCEKS.shscript ends with an error or does not proceed. - Cause: JAVA_HOME is not set or not set correctly.

- Solution:

- Verify that Java is installed on the system.

- Export the correct JAVA_HOME path:

export JAVA_HOME=/path/to/java - Rerun the export script.

- Symptom: Running the

Export to JCEKS Fails with a “Rejected by the jceks.key.serialFilter” Exception

Symptom: The following exception appears:

- Caused by: java.security.UnrecoverableKeyException:

- Rejected by the jceks.key.serialFilter or jdk.serialFilter property

Cause: The JCEKS key serialization filter is rejecting the serialized object.

Solution:

- Edit the

java.securityfile (location may vary depending on your Java installation). - Add or modify the filter to allow the

org.apache.hadoop.crypto.keypackage:

- Edit the

jceks.key.serialFilter = java.lang.Enum; java.security.KeyRep; java.security.KeyRep$Type; javax.crypto.spec.SecretKeySpec; org.apache.hadoop.crypto.key.**;!*Save the changes and restart the KMS or relevant services.

- DistCp Job Fails with Ranger KMS HA Enabled: “Unable to Get Kerberos TGT”

- Symptom: A DistCp job aborts or fails to authenticate when using a high-availability (HA) Ranger KMS setup.

- Cause: Ranger KMS is not properly handling Kerberos tickets, and the Hadoop DistCp job cannot acquire valid credentials.

- Solution:

- Change the Ranger KMS authentication type from Kerberos to simple:

# In Ranger KMS configurationshadoop.authentication.type = simple- Restart the Ranger KMS service. - Rerun the DistCp job, which should now complete successfully.