High Availability for GraphQL

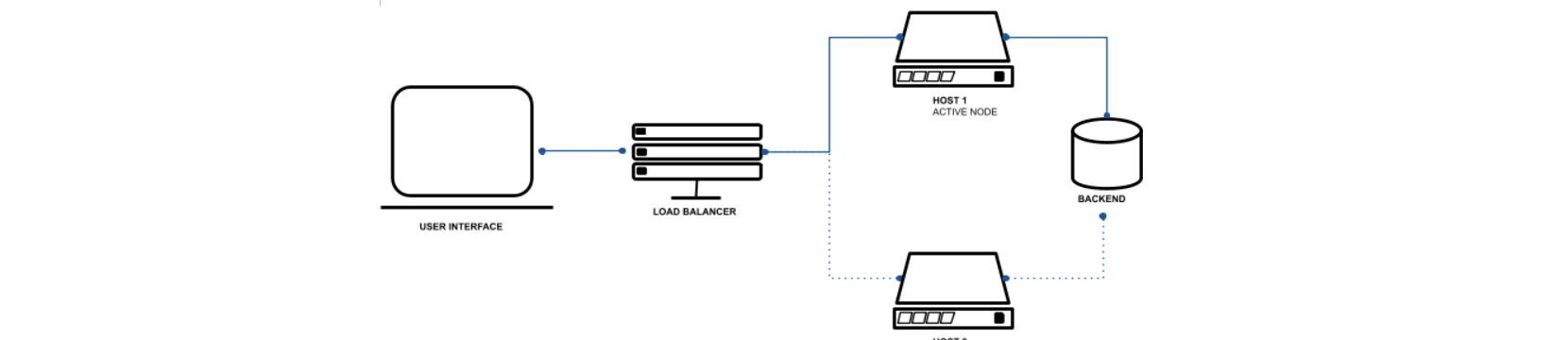

The objective of the High availability (HA) is to have the Pulse User Interface (UI) on a parallel node so that if one of the nodes goes down, the other node would be available and all the traffic can be diverted to it. This feature is available on Pulse version 1.7.0 onwards.

User handles the Additional Load Balancers to automatically distribute the workloads.

Prerequisites: The Accelo Utility must already be installed by the client and must be an active node. To install the Accelo Utility check the Prerequisites. For first time installation of Accelo Utility contact support@acceldata.io

Illustration: Load Balancer

Steps to Deploy High Availability

Follow the steps to deploy the HA for GraphQL.

Step 1: Amend the Active Node

Step 2: Amend the HA Node Post Deployment

First Host - Amend the Active Node

Execute the following steps on the Active node.

- Replace the ports and hostname values for influx, elastic and vm sections of the following services in the

<ACCELO_HOME>config/acceldata_<cluster_name>.conffile as described in the following table:

Replace the docker container-names, with the hostname on which the influx-db,__elastic, vm-select and vm-insert are deployed.

You must change the ports from the internal docker ports to the ports exposed on the active host. host6 has been used here as an example. Use the correct host name as per the your requirement.

| Before | After |

|---|---|

influx = [ { name = "default" host = "ad-tsdb" port = 8086 username = "<username>" password = "<password>" dbName = "<db_name>" }, { name = "kafka" host = "ad-tsdb" port = 8086 username = "<username>" password = "<password>" dbName = "<db_name>" }, { name = "graphite" host = "ad-tsdb" port = 8086 username = "<username>" password = "<password>" dbName = "<db_name>" }, { name = "nifi" host = "ad-tsdb" port = 8086 username = "<username>" password = "<password>" dbName = "<db_name>" }, { name = "dsConnection", host = "ad-tsdb", port = 8086, username = "<username>" password = "<password>" encrypted = false, dbName = "<db_name"> } ], elastic = [ { name = "default" host = "ad-elastic" port = 9200 }, { name = "fsanalytics" host = "ad-elastic" port = 9200 }, { name = "nifi" host = "ad-elastic" port = 9200 } ] vm = [ { name = "default", writeURL = "http://ad-vminsert:8480/insert/3609009724/influx", readURL = "http://ad-vmselect:8481/select/3609009724/prometheus", dbName = "ad_cdp710_demo", clusterNameHash = "3609009724" } | influx = [ { name = "default" host = "host6" port = 19009 username = "<username>" password = "<password>" dbName = "<db_name>" }, { name = "kafka" host = "host6" port = 19009 username = "<username>" password = "<password>" dbName = "<db_name>" }, { name = "graphite" host = "host6" port = 19009 username = "<username>" password = "<password>" dbName = "<db_name>" }, { name = "nifi" host = "host6" port = 19009 username = "<username>" password = "<password>" dbName = "<db_name>" }, { name = "dsConnection", host = "host6", port = 19009, username = "<username>" password = "<password>" encrypted = false, dbName = "<db_name>" } ], elastic = [ { name = "default" host = "host6" port = 19013 }, { name = "fsanalytics" host = "host6" port = 19013 }, { name = "nifi" host = "host6" port = 19013 } ] vm = [ { name = "default", writeURL = "http://host6:19043/insert/3609009724/influx", readURL = "http://host6:19042/select/3609009724/prometheus", dbName = "ad_cdp710_demo", clusterNameHash = "3609009724" } |

- Deploy the core, and type 'Y' in all prompts:

accelo deploy core- Now, restart all core components. The changes are now saved in the active node.

accelo restart allSecond Host - Amend the HA Node Post Deployment

- Docker Installation

- Pulse CLI download and initialization

- Create

licensefile under work directory and copy the contents from first hostwork/license - In the CLI, populate the configuration yaml file by running the following command:

accelo admin makeconfig ad-ha-graphql.This displays a prompt asking to overwrite the config file. - Type 'Y' to confirm.

- After the file is generated, perform the following changes highlighted in bold:

Add Line 17 and 18 if you are a Cloudera platform user.

Add the host and ports property for Alerts and Actions in the environment section.

| Before | After |

|---|---|

version: "2" services: ad-ha-graphql: image: ad-graphql container_name: ad-ha-graphql environment: MONGO_URI=ZN4v8cuUTXYvdnDJIDp+R8Z+ZsVXXjv8zDOvh8UwQXrj0RTboRglzSSmtH8Gu0LC MONGO_ENCRYPTED=true MONGO_SECRET=Ah+MqxeIjflxE8u+/wcqWA== UI_PORT=4000 LDAP_HOST=ad-ldap LDAP_PORT=19020 DS_HOST=ad-query-estimation DS_PORT=8181 'FEATURE_FLAGS={ "ui_regex": { "regex": "ip-([^.]+)", "index": 1 }, "rename_nav_labels":{}, "timezone": "", "experimental": true, "themes": false, "hive_const":{ "HIVE_QUERY_COST_ENABLED": false, "HIVE_MEMORY_GBHOUR_COST": 0, "HIVE_VCORE_HOUR_COST": 0 }, "spark_const": { "SPARK_QUERY_COST_ENABLED": false, "SPARK_MEMORY_GBHOUR_COST": 0, "SPARK_VCORE_HOUR_COST": 0 }, "queryRecommendations": false, "hostIsTrialORLocalhost": false, "data_temp_string": "" }' volumes: /etc/localtime:/etc/localtime:ro /data01/acceldata/work/license:/etc/acceldata/license:ro ulimits: {} ports: 4000:4000 depends_on: [] opts: {} restart: "" extra_hosts: [] network_alias: [] label: HA GraphQL | version: "2" services: ad-ha-graphql: image: ad-graphql container_name: ad-ha-graphql environment: MONGO_URI=ZN4v8cuUTXYvdnDJIDp+R8Z+ZsVXXjv8zDOvh8UwQXrj0RTboRglzSSmtH8Gu0LC MONGO_ENCRYPTED=true MONGO_SECRET=Ah+MqxeIjflxE8u+/wcqWA== UI_PORT=4000 LDAP_HOST=ad-ldap LDAP_PORT=19020 DS_HOST=ad-query-estimation DS_PORT=8181 DB_HOST=<DB_HOST_IP> DB_PORT=19030 CAP_SCHEDULER_ENABLED_YARN = false PLATFORM = CLDR #(HWX for HDP clusters) DASHPLOT_IP=<DASHPLOT_IP> DASHPLOT_PORT=18080 ALERTS_IP=<ALERTS_IP> ACTIONS_IP=<ACTIONS_IP> ALERTS_PORT=19015 ACTIONS_PORT=19016 'FEATURE_FLAGS={ "ui_regex": { "regex": "ip-([^.]+)", "index": 1 }, "rename_nav_labels":{}, "timezone": "", "experimental": true, "themes": false, "hive_const":{ "HIVE_QUERY_COST_ENABLED": false, "HIVE_MEMORY_GBHOUR_COST": 0, "HIVE_VCORE_HOUR_COST": 0 }, "spark_const": { "SPARK_QUERY_COST_ENABLED": false, "SPARK_MEMORY_GBHOUR_COST": 0, "SPARK_VCORE_HOUR_COST": 0 }, "queryRecommendations": false, "hostIsTrialORLocalhost": false, "data_temp_string": "" }' volumes: /etc/localtime:/etc/localtime:ro /data01/acceldata/config/hosts:/etc/hosts:ro /data01/acceldata/work/license:/etc/acceldata/license:ro ulimits: {} ports: 4000:4000 depends_on: [] opts: {} restart: "" extra_hosts: [] network_alias: [] label: HA GraphQL |

If the user has LDAP and Proxy enabled on the Active node, it must be mirrored in the HA node.

For optional services,

ad-ldapfollow LDAP integration similar to first host and updateconfig/ldap/ldap.confFor optional services,

ad-proxyfollow same steps for Pulse UI TLS integration (placing certs file and removing 4000 port from graphql yaml ) and one additional step for ad-ha-graphql- Update url mentioning

ad-graphqltoad-ha-graphqlin fileconfig/proxy/config.toml

- Update url mentioning

Update either

/etc/hostsentries or create a separate host file at locationconfig/hostto add following host entry:

<FIRST_NODE_IP> <FIRST_NODE_HOST_NAME> ad-db- Optional: In case of using

config/hostfile, update thevolumesfile mount inconfig/docker/addons/ad-ha-graphql.yml:

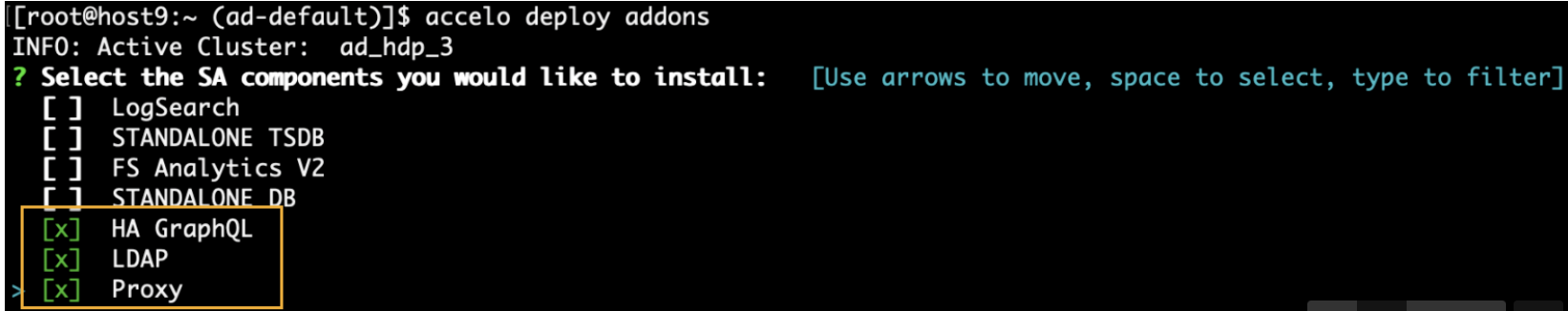

#Before - /etc/hosts:/etc/hosts:ro #After - /data01/acceldata/config/host:/etc/hosts:ro- Deploy respective addons to start UI service on second host, by selecting the ad-graphql, ad-ldap(optional), and ad-proxy(optional) services. ad-ha-graphql is now deployed:

The deployment of High availability for GraphQL is now complete.