This section provides detailed steps for installing and configuring Apache Airflow in your environment using RPM packages and Management Pack (Mpack). Apache Airflow is a powerful tool for managing complex workflows and data processing pipelines. By utilizing RPM packages and Mpack, the installation and integration processes are simplified, ensuring a smooth deployment. Follow these instructions to effectively set up and manage workflows using Apache Airflow on your system.

Here’s how to set up Airflow across two nodes:

Node 1:

- Airflow Scheduler

- Airflow Webserver

- Airflow Celery Worker

Node 2:

- Airflow Celery Worker

Configuration Details

Node 1

- Install Airflow.

- Configure Airflow to run the Scheduler, Webserver, and Worker components.

- Adjust the

airflow.cfgto set the correct hostname and port for the Webserver.

Node 2

- Install Airflow.

- Configure Airflow to run only the Worker component.

- Ensure the Worker’s configuration references the correct broker URL for communications with the Scheduler and the message queue (e.g., RabbitMQ).

General Configuration

- Install all necessary dependencies on both nodes, including Python packages, database connectors, and other required libraries.

- If using a database backend, set up the database instances and configure the connection settings in

airflow.cfg. - Confirm network settings allow for proper communication between Node 1 and Node 2, focusing on required Airflow ports and any related services (e.g., RabbitMQ).

- Test the configuration thoroughly to ensure all Airflow components communicate effectively across both nodes.

This setup distributes Airflow components over two nodes, optimizing resource utilization and component management based on your specific infrastructure and needs.

RHEL8 Setup for Node 1

Prerequisites

Before starting, install the necessary development tools and Python packages. Ensure you have Python 3.11 or newer, as Airflow supports Python versions from 3.11 onwards.

sudo yum install python3.11-devel -ysudo yum -y groupinstall "Development Tools"Database Setup

For effective testing and operation, configure a database backend, choosing between PostgreSQL and MySQL. While Airflow defaults to SQLite for development, it supports:

- PostgreSQL: Versions 12 through 16

- MySQL: Version 8.0, Innovation

- MSSQL (experimental, discontinuing in version 2.9.0): Versions 2017 and 2019

- SQLite: Version 3.15.0 and above

Ensure the database version you select is compatible with Airflow.

- MySQL: Version 8.0 or higher

- PostgreSQL: Version 12 or higher

Follow the respective instructions below for your chosen database system to initialize and configure it for use with Apache Airflow.

PostgreSQL Database Setup

To use PostgreSQL with Apache Airflow, perform the following steps to install and configure it:

- Install

psycopg2-binaryPython Package:

pip3.11 install psycopg2-binary- Install PostgreSQL:

# Install the repository RPM:sudo dnf install -y https://download.postgresql.org/pub/repos/yum/reporpms/EL-8-x86_64/pgdg-redhat-repo-latest.noarch.rpm# Disable the built-in PostgreSQL module:sudo dnf -qy module disable postgresql# Install PostgreSQL:sudo dnf install -y postgresql12-server- Initialize and Start PostgreSQL:

# Optionally initialize the database and enable automatic start:sudo /usr/pgsql-12/bin/postgresql-12-setup initdbsudo systemctl enable postgresql-12sudo systemctl start postgresql-12- Create PostgreSQL Database and User for Airflow:

To set up the database and user for Apache Airflow in PostgreSQL, perform the following steps:

- Access the PostgreSQL Shell:

sudo -u postgres psql- Inside the PostgreSQL shell, execute the following commands:

-- Create the Airflow database:CREATE DATABASE airflow;-- Create the Airflow user with a password:CREATE USER airflow WITH PASSWORD 'airflow';-- Set client encoding, default transaction isolation, and timezone for the Airflow user:ALTER ROLE airflow SET client_encoding TO 'utf8';ALTER ROLE airflow SET default_transaction_isolation TO 'read committed';ALTER ROLE airflow SET timezone TO 'UTC';-- Grant all privileges on the Airflow database to the Airflow user:GRANT ALL PRIVILEGES ON DATABASE airflow TO airflow;-- Exit the PostgreSQL shell:\qIf you are using Postgres-15 then run the below commands:

-- Create the Airflow database:CREATE DATABASE airflow;-- Create the Airflow user with a password:CREATE USER airflow WITH PASSWORD 'airflow';-- Set client encoding, default transaction isolation, and timezone for the Airflow user:ALTER ROLE airflow SET client_encoding TO 'utf8';ALTER ROLE airflow SET default_transaction_isolation TO 'read committed';ALTER ROLE airflow SET timezone TO 'UTC';-- Grant all privileges on the Airflow database to the Airflow user:GRANT ALL PRIVILEGES ON DATABASE airflow TO airflow;-- To use airflow database\c airflow;You are now connected to database "airflow" as user "postgres".airflow=# GRANT ALL ON SCHEMA public TO airflow;GRANT-- Exit the PostgreSQL shell:airflow=# \qThe PostgreSQL database named 'airflow' and the user 'airflow' with the designated settings and privileges are now established. Continue with the following steps to configure Apache Airflow to use this PostgreSQL database.

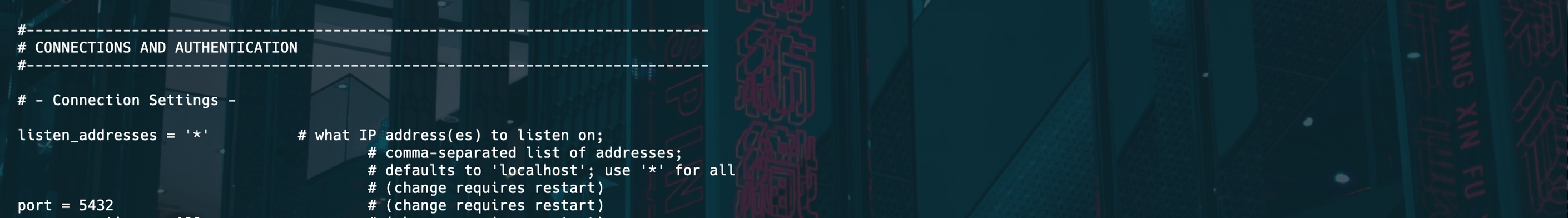

- Configure PostgreSQL Settings for Airflow:

Once the Airflow database and user have been set up in PostgreSQL, adjust the PostgreSQL configuration to permit connections from the Apache Airflow server. Proceed with the following steps:

- Open the PostgreSQL Configuration File:

vi /var/lib/pgsql/12/data/postgresql.conf- Inside the file, modify the following settings:

# Change and uncomment the listen_addresses to '*':listen_addresses = '*'•# Uncomment the following line (remove the '#' at the beginning):# port = 5432- Save and close the file.

- Open the pg_hba.conf file:

vi /var/lib/pgsql/12/data/pg_hba.conf- Add this line at the end of the file and replace {host_IP} with the actual IP address of the machine running Apache Airflow.

host airflow airflow {host_IP}/32 md5- Add entries for all the nodes in the database:

host airflow airflow {host_IP}/32 md5- Add IPs for all the nodes and save and close the file.

- Restart PostgreSQL to apply changes:

sudo systemctl restart postgresql-12MySQL Database Setup for Airflow

To set up MySQL as the database backend for Apache Airflow, perform the following steps:

- Install MySQL Server:

sudo yum install mysql-serversudo yum install mysql-devel -y or sudo yum install mariadb-devel -y- Install the

mysqlclientPython package:

pip3.11 install mysqlclient- Start the MySQL service:

sudo systemctl start mysqld- Install MySQL Connector for Python:

pip3.11 install pymysql- Secure MySQL installation (optional but recommended):

sudo mysql_secure_installationFollow the prompts to secure the MySQL installation, including setting a root password.

- Create Database and User for Airflow:

sudo mysql -u root -pEnter the root password when prompted. Perform the following inside MySQL shell:

CREATE DATABASE airflow CHARACTER SET utf8 COLLATE utf8_unicode_ci;CREATE USER 'airflow'@'%' IDENTIFIED BY 'airflow';GRANT ALL PRIVILEGES ON airflow.* TO 'airflow'@'%';FLUSH PRIVILEGES;EXIT;If you are using a multinode setup, add users for other nodes as well:

sudo mysql -e "CREATE USER 'airflow'@'IP_other_node' IDENTIFIED BY 'password'; GRANT ALL PRIVILEGES ON airflowdb.* TO 'airflow'@'IP_other_node';"- Restart MySQL to Apply Changes:

sudo systemctl restart mysqldThe MySQL database has been configured with a database named 'airflow' and a user named 'airflow' who has the required privileges. Next, configure Apache Airflow to utilize this MySQL database as its backend.

Apache Airflow Installation using Mpack

Ensure to establish symbolic links for Python to utilize a version exceeding Python 3.11.

sudo ln -sf /usr/bin/python3.11 /usr/bin/python3sudo ln -sf /usr/bin/pip3.11 /usr/bin/pip3The following steps outline the installation and setup process for Apache Airflow using Management Pack (Mpack) on an Ambari-managed cluster.

Install Mpack:

ambari-server install-mpack --mpack=ambari-mpacks-airflow-2.8.1.tar.gz --verboseUninstall the previous Mpack, if required:

ambari-server uninstall-mpack --mpack-name=airflow-ambari-mpackChange Simlinks:

cd /var/lib/ambari-server/resources/stacks/ODP/3.0/servicesunlink AIRFLOWln -s /var/lib/ambari-server/resources/mpacks/airflow-ambari-mpack-2.8.1/common-services/AIRFLOW/2.8.1 AIRFLOWcd /var/lib/ambari-server/resources/stacks/ODP/3.1/servicesunlink AIRFLOWln -s /var/lib/ambari-server/resources/mpacks/airflow-ambari-mpack-2.8.1/common-services/AIRFLOW/2.8.1 AIRFLOWcd /var/lib/ambari-server/resources/stacks/ODP/3.2/servicesunlink AIRFLOWln -s /var/lib/ambari-server/resources/mpacks/airflow-ambari-mpack-2.8.1/common-services/AIRFLOW/2.8.1 AIRFLOWcd /var/lib/ambari-server/resources/stacks/ODP/3.3/servicesunlink AIRFLOWln -s /var/lib/ambari-server/resources/mpacks/airflow-ambari-mpack-2.8.1/common-services/AIRFLOW/2.8.1 AIRFLOWRestart Ambari Server:

ambari-server restartYour Apache Airflow installation, utilizing RPM packages and Mpack, is now ready for use on your Ambari-managed cluster.

Before starting the installation or setting up the Airflow service through the Ambari UI, make sure that the RabbitMQ configuration on the master node is complete.

RHEL8 Setup for Node 2

Prerequisites

sudo yum install python3.11-devel -ysudo yum -y groupinstall "Development Tools"Install the necessary database connectors for the database you are using.

# Postgresql pip3.11 install psycopg2-binary# MySQLpip3.11 install mysqlclientpip3.11 install pymysqlBefore proceeding with the Airflow installation from Ambari, ensure that you have set up the Apache Airflow repository on both nodes.

Install Apache Airflow using the Ambari UI

- Add Service:

- Select Airflow:

- Select Scheduler and Webserver nodes:

The webserver can be hosted on multiple nodes, but the scheduler must be located on the master node.

- Select the nodes for the Celery worker, as we are employing a two-node setup in our case.

- Enter the database and Rabbit MQ details:

Database Options:

- Select either MySQL or PostgreSQL for your backend database:

- Set up the Airflow backend database connection string and Celery configurations. You will need to enter specific details such as the database name, password, username, type of database (MySQL or PostgreSQL), and host IP. The script provided will automatically generate the necessary configuration details for the database connection string and Celery settings.

Input Database Information in the Ambari UI:

- Database Name

- Password

- Username

- Database Type: Select MySQL or PostgreSQL

- Host IP

If you are using RabbitMQ, configure and add the following RabbitMQ settings:

- RabbitMQ Username

- RabbitMQ Password

- RabbitMQ Virtual Host

- Celery Broker