Spark

Spark Dashboard

Apache Spark is an open-source data processing engine that process data in real-time across various clusters of computers. Spark in Pulse provides an overview of the Spark jobs, job status, and other metrics such as memory usage in applications. Pulse also provides you with a separate window to analyze metrics on Spark Streaming data.

To view the Spark Dashboard, click Spark --> Dashboard. The dashboard consists of summary panels, a Sankey Diagram with various metrics, and charts that display information about jobs based on other criteria such as memory and core utilization. The default time range is Last 24 hrs. To change the time range, click the down arrow in the time selection menu.

Summary Panels

The following table provides a description of the metrics displayed in the summary panel of the dashboard:

| Metric | Description |

|---|---|

| Users | The total number of users |

| # of Jobs | The total number of jobs. |

| Avg CPU Allocated | The average CPU time allocated. |

| Avg Mem Allocated | The average memory allocated. |

| Succeeded | The total number of jobs that were successfully completed. |

| Running | The total number of jobs that are currently active. |

| Failed | The total number of failed jobs. |

| Finishing | The total number of jobs that are in the process of finishing. |

| Killed | The total number of jobs that were killed. |

| App Exception | The total number of application-level exceptions. |

| Yarn Exception | The total number of YARN exceptions. |

| Dynamic Executors | The number of Dynamic executor queries with the value True is displayed. |

Click the number to view the details of the jobs in the particular category. The Spark Job Details page is displayed with the applicable filter.

Metric Distributions

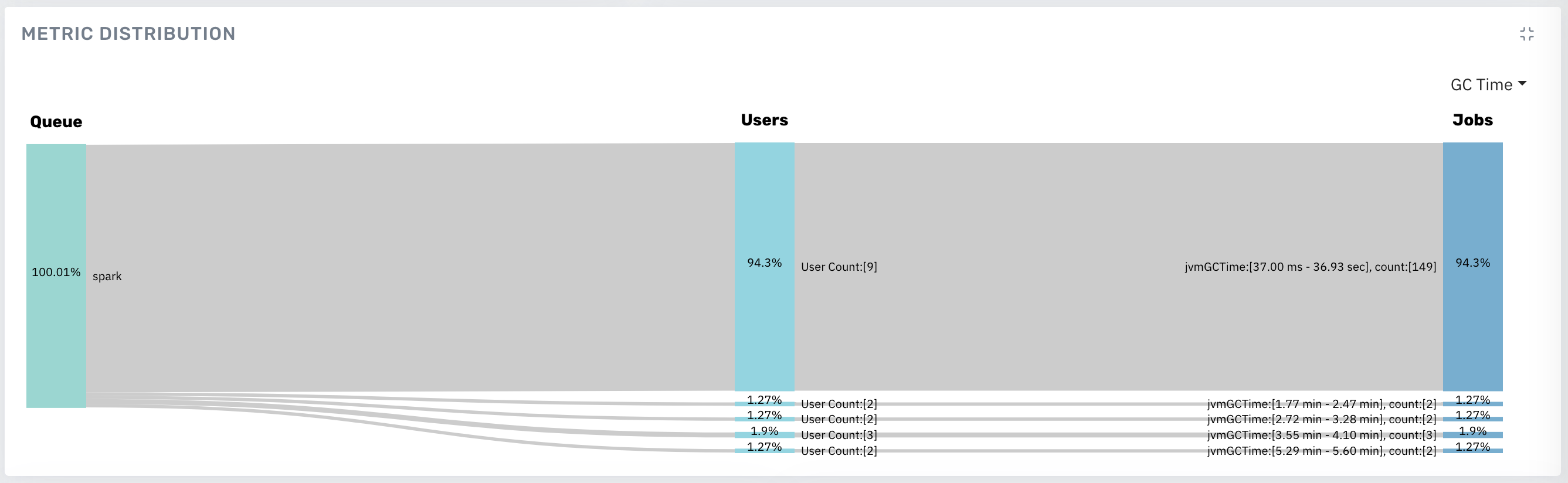

The Metric distributions panel displays the summary of jobs as a Sankey diagram. By default, the chart displays the distribution by Duration. You can choose to display the distribution by VCore, VCore Time, Memory, Memory Time, Used Containers, and GC Time.

Sample of a Sankey Diagram and how to read it

Sample Sankey Diagram

You can gather the following information from this diagram:

- The distribution is displayed by GC Time.

- All jobs are running on the queue named spark.

- The GCTime for 151 jobs (94.38% of the jobs) is between 37 ms to 50.26 seconds.

- 94.38% of the jobs are being run by 9 users.

- A very small percent of the jobs is time-consuming. (0.63% of the jobs took about 6.37 mins of GC time.)

Other Spark Charts

The following table provides description of the charts displayed on the Spark Dashboard:

| Chart Name | Description |

|---|---|

| VCore Usage | The number of physical virtual cores used by a queue in the cluster. |

| Memory Usage | The amount of memory used by a queue in the cluster in a particular timeframe. |

| Error Categories | The number of YARN exceptions and App exceptions within a timeframe. |

| Average Job Time | The average and total time taken to execute a job within a timeframe. The chart displays the Average Execution Time and Total Execution Time of the spark job. |

| Top 20 Users (By Query) | The top 20 users that ran the highest number of queries within the selected timeframe. By default, you can see the top 20 users from the last 24 hours. |

| Job Execution Count | The total number of Spark jobs executed within a timeframe. |

The metric charts may not populate or have enough data points to be of much relevance for short applications with a lifetime shorter or close to the metric polling interval.

- These metrics are conditional to a configuration parameter:

spark.metrics.executorMetricsSource.enabled(default value is true) - ExecutorMetrics are updated as part of heartbeat processes scheduled for the executors and for the driver at regular intervals:

spark.executor.heartbeatInterval(default value is 10 seconds) - An optional faster polling mechanism is available for executor memory metrics, it can be activated by setting a polling interval (in milliseconds) using the configuration parameter

spark.executor.metrics.pollingInterval.

Queues

In the Queues panel, you can see the root queue, the default queue, and any custom queue(s) defined by the cluster administrator.

Root: This is a predefined queue that is a parent of all the available queues in your cluster. This queue uses 100% of the resources.

Default: A designated queue defined by the administrator. This queue contains jobs that do not have a queue allocated.

To view data on the dashboard for the particular queue, perform the following:

- Click

to view the list of queues. Click to hide the queues. - Click on the name of the queue. The data corresponding to the selected queue is displayed in the dashboard.

- (Optional) You can search for the name of the queue by using the search box.