Title

Create new category

Edit page index title

Edit category

Edit link

Single Node Installation

RHEL 8 Setup

Prerequisites

To establish the necessary development tools and Python packages, ensure the installation of Python packages with versions 3.8 and above. Note that while this documentation employs Python 3.8, Apache Airflow is compatible with Python versions starting from 3.8 and higher.

sudo dnf module enable python3.8 -ysudo dnf install -y python3.8sudo yum install python38-devel -ysudo yum -y groupinstall "Development Tools"Check if the Python 3.8 executables are present in both locations, as shown, before proceeding with the Airflow installation.

ll /usr/local/bin/python3.8*-rwxr-xr-x 1 root root 5490520 Mar 10 11:20 /usr/local/bin/python3.8*ll /usr/bin/python3.8*-rwxr-xr-x 1 root root 5490520 Feb 4 16:02 /usr/bin/python3.8*Database Setup

For an optimal test drive experience of Airflow, choose a robust database backend such as PostgreSQL or MySQL. By default, Airflow uses SQLite, which is mainly intended for development.

Airflow supports only specific versions of database engines. Verify that your database version is compatible as older versions might not support certain SQL features:

- PostgreSQL: Versions 12, 13, 14, 15, 16

- MySQL: Version 8.0, Innovation

- MSSQL (experimental, support ending in version 2.9.0): 2017, 2019

- SQLite: Version 3.15.0 or later

MariaDB is not a supported backend. Despite similarities with MySQL, there are known incompatibilities (for example, index handling), and our migration scripts and application workflows are not tested against MariaDB.

Deployments using MariaDB have historically resulted in significant operational issues. As a result, its use is strongly discouraged, and no official support or compatibility guarantees should be expected.

For reference, see Choosing Database Backend for Airflow.

Before installing Apache Airflow, set up a compatible database. Choose PostgreSQL or MySQL based on your needs and ensure that your database version meets the following minimum requirements:

- MySQL: Version 8.0 or higher

- PostgreSQL: Version 12 or higher

Refer: Setup a Database Backend-Apache Airflow

Follow the respective instructions below for your chosen database system to initialise and configure it for use with Apache Airflow.

PostgreSQL Database Setup

To integrate PostgreSQL with Apache Airflow, complete the following steps to install and configure it:

- Install PostgreSQL:

# Install the repository RPM:sudo dnf install -y https://download.postgresql.org/pub/repos/yum/reporpms/EL-8-x86_64/pgdg-redhat-repo-latest.noarch.rpm# Disable the built-in PostgreSQL module:sudo dnf -qy module disable postgresql# Install PostgreSQL:sudo dnf install -y postgresql12-server- Initialize and Start PostgreSQL:

# Optionally initialize the database and enable automatic start:sudo /usr/pgsql-12/bin/postgresql-12-setup initdbsudo systemctl enable postgresql-12sudo systemctl start postgresql-12- Create PostgreSQL Database and User for Airflow:

To set up the database and user for Apache Airflow in PostgreSQL, follow these steps:

Access the PostgreSQL Shell:sudo -u postgres psqlInside the PostgreSQL Shell, execute the following commands: -- Create the Airflow database:CREATE DATABASE airflow;-- Create the Airflow user with a password:CREATE USER airflow WITH PASSWORD 'airflow';-- Set client encoding, default transaction isolation, and timezone for the Airflow user:ALTER ROLE airflow SET client_encoding TO 'utf8';ALTER ROLE airflow SET default_transaction_isolation TO 'read committed';ALTER ROLE airflow SET timezone TO 'UTC';-- Grant all privileges on the Airflow database to the Airflow user:GRANT ALL PRIVILEGES ON DATABASE airflow TO airflow;-- Exit the PostgreSQL shell:\qNow, the PostgreSQL database named airflow and the user airflow with the specified settings and privileges have been created. Proceed with the next steps to configure Apache Airflow with this PostgreSQL database.

- Configure PostgreSQL Settings for Airflow: After creating the Airflow database and user in PostgreSQL, modify the PostgreSQL configuration to allow connections from the Apache Airflow server. Follow these steps:

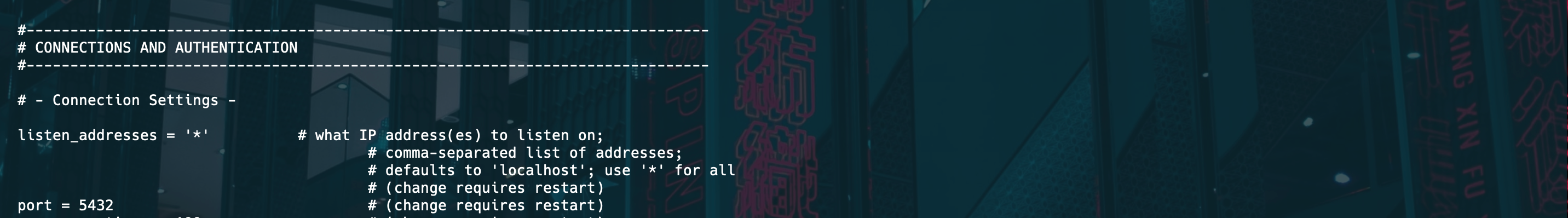

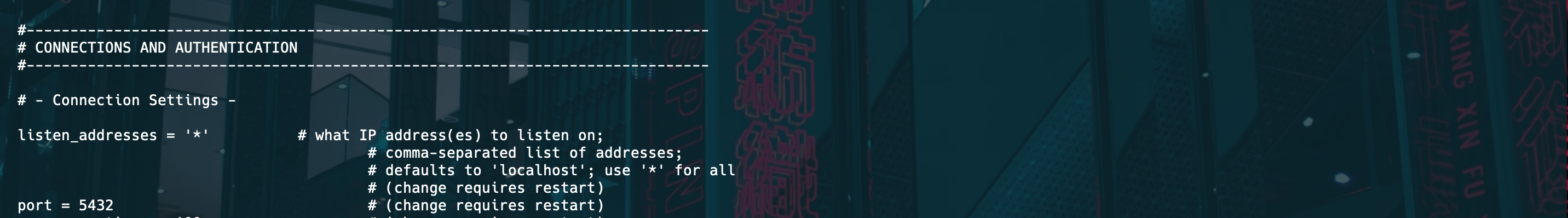

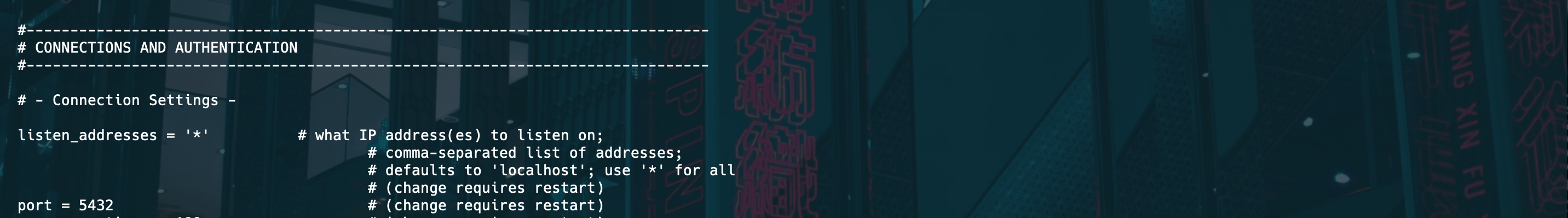

Open the PostgreSQL Configuration File:vi /var/lib/pgsql/12/data/postgresql.confInside the File, Modify the Following Settings:# Change and uncomment the listen_addresses to '*':listen_addresses = '*'# Uncomment the following line (remove the '#' at the beginning):# port = 5432Save and close the file.

Open the pg_hba.conf File:vi /var/lib/pgsql/12/data/pg_hba.conf# Add this line at the end of the file:host airflow airflow {host_IP}/32 md5Replace {host_IP} with the actual IP address of the machine running Apache Airflow.Save and close the file.

- Restart PostgreSQL to Apply Changes:

sudo systemctl restart postgresql-12MySQL Database Setup for Airflow

To configure MySQL as the database backend for Apache Airflow, follow these steps:

- Install MySQL Server:

sudo yum install mysql-serversudo yum install mysql-devel -y or sudo yum install mariadb-devel -y- Install the mysqlclient Python package:

pip3.8 install mysqlclient- Start the MySQL service:

sudo systemctl start mysqld- Secure MySQL Installation (Optional but Recommended):

sudo mysql_secure_installationFollow the prompts to secure the MySQL installation, including setting a root password.

- Create Database and User for Airflow:

sudo mysql -u root -pEnter the root password when prompted. Inside the MySQL shell:

CREATE DATABASE airflow CHARACTER SET utf8 COLLATE utf8_unicode_ci;CREATE USER 'airflow'@'%' IDENTIFIED BY 'airflow';GRANT ALL PRIVILEGES ON airflow.* TO 'airflow'@'%';FLUSH PRIVILEGES;EXIT;Welcome to the MySQL monitor. Commands end with ; or \g.Your MySQL connection id is 102Server version: 5.7.42 MySQL Community Server (GPL)Copyright (c) 2000, 2023, Oracle and/or its affiliates.Oracle is a registered trademark of Oracle Corporation and/or itsaffiliates. Other names may be trademarks of their respectiveowners.Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.mysql> SHOW GLOBAL VARIABLES LIKE '%timestamp%';+---------------------------------+-------+| Variable_name | Value |+---------------------------------+-------+| explicit_defaults_for_timestamp | OFF || log_timestamps | UTC |+---------------------------------+-------+2 rows in set (0.01 sec)mysql> SET GLOBAL explicit_defaults_for_timestamp = 1;Query OK, 0 rows affected (0.00 sec)mysql> SHOW GLOBAL VARIABLES LIKE '%timestamp%';+---------------------------------+-------+| Variable_name | Value |+---------------------------------+-------+| explicit_defaults_for_timestamp | ON || log_timestamps | UTC |+---------------------------------+-------+2 rows in set (0.00 sec)mysql> exit;Bye- Restart MySQL to Apply Changes:

sudo systemctl restart mysqldNow, the MySQL database is set up with a database named airflow and a user named airflow with the necessary privileges. Proceed to configure Apache Airflow to use this MySQL database as its backend.

CentOS 7 Setup

Prerequisites

Install Dependencies:# Install development tools and dependenciesyum install gcc openssl-devel wget bzip2-devel libffi-devel zlib-devel -y#Install SQLite:# Download SQLite sourcecd /optwget https://www.sqlite.org/src/tarball/sqlite.tar.gz?r=release --no-check-certificatemv sqlite.tar.gz?r=release sqlite.tar.gztar xzf sqlite.tar.gz# Navigate to the SQLite directorycd sqlite/# Configure SQLite./configure --prefix=/usr# Install Tcl (a dependency for SQLite)sudo yum install tcl -y# Build and install SQLitemakesudo make install# Check SQLite versionsqlite3 --version# Print the current PATHecho $PATH# Change to the /opt directorycd /opt# Install required dependenciessudo yum install gcc openssl-devel bzip2-devel libffi-devel zlib-devel -y# Download Python 3.8.12 source tarballcurl -O https://www.python.org/ftp/python/3.8.12/Python-3.8.12.tgz# Extract the tarballtar -zxvf Python-3.8.12.tgz# Change into the Python source directorycd Python-3.8.12/# Configure the build, enabling shared libraries./configure --enable-shared# Build Pythonmake# Install Pythonsudo make install# Copy libpython3.8.so to /lib64/sudo cp --no-clobber ./libpython3.8.so* /lib64/# Set the correct permissions for libpython3.8.sosudo chmod 755 /lib64/libpython3.8.so*# Add the path to the shared libraries to LD_LIBRARY_PATH in .bashrcecho "export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/usr/local/lib/" >> ~/.bashrc# Reload .bashrc to apply changessource ~/.bashrc# Create a symbolic link from /usr/local/bin/python3.8 to /usr/bin/python3.8sudo ln -s /usr/local/bin/python3.8 /usr/bin/python3.8# Set permissions for the Python library directorysudo chmod -R 755 /usr/local/lib/python3.8# Check Python and SQLite versionspython3.8 --versionsqlite3 --version# Run ldconfig to update the system library cachesudo ldconfig# Check the SQLite version using Python 3.8python3.8 -c "import sqlite3; print(sqlite3.sqlite_version)"# Install additional development toolssudo yum -y groupinstall "Development Tools"Check if the Python 3.8 executables are present in both locations, as shown, before proceeding with the Airflow installation.

ll /usr/local/bin/python3.8*-rwxr-xr-x 1 root root 5490520 Mar 10 11:20 /usr/local/bin/python3.8*ll /usr/bin/python3.8*-rwxr-xr-x 1 root root 5490520 Feb 4 16:02 /usr/bin/python3.8*Database Setup

Follow the respective instructions below for your chosen database system to initialize and configure it for use with Apache Airflow.

PostgreSQL Database Setup.

To use PostgreSQL with Apache Airflow, follow these steps to install and configure it:

- Install

psycopg2-binaryPython Package:

pip3.8 install psycopg2-binary- Install PostgreSQL:

# Install the repository RPM:sudo yum -y install https://download.postgresql.org/pub/repos/yum/reporpms/EL-7-x86_64/pgdg-redhat-repo-latest.noarch.rpm# Install the built-in PostgreSQL module:sudo yum -y install epel-release yum-utilssudo yum-config-manager --enable pgdg12# Install PostgreSQL:sudo yum install postgresql12-server postgresql12 -y- Initialize and Start PostgreSQL:

# Optionally initialize the database and enable automatic start:sudo /usr/pgsql-12/bin/postgresql-12-setup initdbsudo systemctl enable --now postgresql-12sudo systemctl start postgresql-12- Create PostgreSQL Database and User for Airflow.

To set up the database and user for Apache Airflow in PostgreSQL, follow these steps:

Access the PostgreSQL Shell:sudo -u postgres psqlInside the PostgreSQL Shell, execute the following commands: -- Create the Airflow database:CREATE DATABASE airflow;-- Create the Airflow user with a password:CREATE USER airflow WITH PASSWORD 'airflow';-- Set client encoding, default transaction isolation, and timezone for the Airflow user:ALTER ROLE airflow SET client_encoding TO 'utf8';ALTER ROLE airflow SET default_transaction_isolation TO 'read committed';ALTER ROLE airflow SET timezone TO 'UTC';-- Grant all privileges on the Airflow database to the Airflow user:GRANT ALL PRIVILEGES ON DATABASE airflow TO airflow;-- Exit the PostgreSQL shell:\qNow, the PostgreSQL database named airflow and the user airflow with the specified settings and privileges have been created. Proceed with the next steps to configure Apache Airflow with this PostgreSQL database.

- Configure PostgreSQL Settings for Airflow:

After creating the Airflow database and user in PostgreSQL, modify the PostgreSQL configuration to allow connections from the Apache Airflow server. Follow these steps:

Open the PostgreSQL Configuration File:vi /var/lib/pgsql/12/data/postgresql.confInside the File, Modify the Following Settings:# Change and uncomment the listen_addresses to '*':listen_addresses = '*'# Uncomment the following line (remove the '#' at the beginning):# port = 5432Save and close the file.

Open the pg_hba.conf File:vi /var/lib/pgsql/12/data/pg_hba.conf# Add this line at the end of the file:host airflow airflow {host_IP}/32 md5Replace {host_IP} with the actual IP address of the machine running Apache Airflow.Save and close the file.

- Restart PostgreSQL to Apply Changes:

sudo systemctl restart postgresql-12MySQL Database Setup for Airflow

To set up MySQL as the database backend for Apache Airflow, follow these steps:

- Install MySQL Server:

sudo yum install mysql-serversudo yum install mysql-devel -y or sudo yum install mariadb-devel -y- Install the mysqlclient Python package:

pip3.8 install mysqlclient- Start the MySQL service:

sudo systemctl start mysqld- Install MySQL Connector for Python:

pip3.8 install mysql-connector-pythonpip3.8 install pymysql- Secure MySQL Installation (Optional but Recommended):

sudo mysql_secure_installationFollow the prompts to secure the MySQL installation, including setting a root password.

- Create Database and User for Airflow:

sudo mysql -u root -pEnter the root password when prompted. Inside the MySQL shell:

CREATE DATABASE airflow CHARACTER SET utf8 COLLATE utf8_unicode_ci;CREATE USER 'airflow'@'%' IDENTIFIED BY 'airflow';GRANT ALL PRIVILEGES ON airflow.* TO 'airflow'@'%';FLUSH PRIVILEGES;EXIT;- Restart MySQL to Apply Changes:

sudo systemctl restart mysqldNow, the MySQL database is set up with a database named airflow and a user named airflow with the necessary privileges. Proceed to configure Apache Airflow to use this MySQL database as its backend.

Ubuntu20.04 Setup

Prerequisites

apt updatesudo add-apt-repository ppa:deadsnakes/ppaapt install python3.8 -ysudo apt install python3.8-venvsudo apt install -y python3.8-devpython3.8 --versionpip3.8 --versionCheck if the Python 3.8 executables are present in both locations, as shown, before proceeding with the Airflow installation.

ll /usr/local/bin/python3.8*-rwxr-xr-x 1 root root 5490520 Mar 10 11:20 /usr/local/bin/python3.8*ll /usr/bin/python3.8*-rwxr-xr-x 1 root root 5490520 Feb 4 16:02 /usr/bin/python3.8*Database Setup

Follow the respective instructions below for your chosen database system to initialize and configure it for use with Apache Airflow.

PostgreSQL Database Setup

To use PostgreSQL with Apache Airflow, follow these steps to install and configure it:

- Install

psycopg2-binaryPython Package:

pip3.8 install psycopg2-binary- Install PostgreSQL:

sudo apt install postgresql-12 postgresql-client-12- Create PostgreSQL Database and User for Airflow:

To set up the database and user for Apache Airflow in PostgreSQL, follow these steps:

Access the PostgreSQL Shell:sudo -u postgres psqlInside the PostgreSQL Shell, execute the following commands: -- Create the Airflow database:CREATE DATABASE airflow;-- Create the Airflow user with a password:CREATE USER airflow WITH PASSWORD 'airflow';-- Set client encoding, default transaction isolation, and timezone for the Airflow user:ALTER ROLE airflow SET client_encoding TO 'utf8';ALTER ROLE airflow SET default_transaction_isolation TO 'read committed';ALTER ROLE airflow SET timezone TO 'UTC';-- Grant all privileges on the Airflow database to the Airflow user:GRANT ALL PRIVILEGES ON DATABASE airflow TO airflow;-- Exit the PostgreSQL shell:\qNow, the PostgreSQL database named airflow and the user airflow with the specified settings and privileges have been created. Proceed with the next steps to configure Apache Airflow with this PostgreSQL database.

- Configure PostgreSQL Settings for Airflow:

After creating the Airflow database and user in PostgreSQL, modify the PostgreSQL configuration to allow connections from the Apache Airflow server. Follow these steps:

Open the PostgreSQL Configuration File:vi /etc/postgresql/12/main/postgresql.confInside the File, Modify the Following Settings:# Change and uncomment the listen_addresses to '*':listen_addresses = '*'# Uncomment the following line (remove the '#' at the beginning):# port = 5432Save and close the file.

Open the pg_hba.conf File:vi /etc/postgresql/12/main/pg_hba.conf# Add this line at the end of the file:host airflow airflow {host_IP}/32 md5Replace {host_IP} with the actual IP address of the machine running Apache Airflow.Save and close the file.

- Restart PostgreSQL to Apply Changes:

sudo systemctl restart postgresql.serviceMySQL Database Setup for Airflow (Optional)

To set up MySQL as the database backend for Apache Airflow, follow these steps:

- Install MySQL Server:

wget http://repo.mysql.com/mysql-apt-config_0.8.12-1_all.debsudo dpkg -i mysql-apt-config_0.8.12-1_all.debsudo dpkg-reconfigure mysql-apt-configsudo apt updatesudo apt-key adv --keyserver keyserver.ubuntu.com --recv-keys B7B3B788A8D3785Csudo apt updatesudo apt-cache policy mysql-serversudo apt install -f mysql-client=5.7*sudo apt install -f mysql-community-server=5.7*sudo apt install -f mysql-server=5.7*wget https://downloads.mysql.com/archives/get/p/3/file/mysql-connector-j_8.0.32-1ubuntu20.04_all.debsudo dpkg -i mysql-connector-j_8.0.32-1ubuntu20.04_all.debls /usr/share/java/mysql- connector-java.jarchmod 644 /usr/share/java/mysql-connector-j-8.0.32.jar- Install

mysqlclientPython Package:

pip3.8 install mysqlclient- Start MySQL Service:

sudo systemctl start mysqld- Install MySQL Connector for Python:

pip3.8 install mysql-connector-pythonpip3.8 install pymysql- Secure MySQL Installation (Optional but Recommended):

sudo mysql_secure_installation- Create Database and User for Airflow:

sudo mysql -u root -pEnter the root password when prompted. Inside the MySQL shell:

CREATE DATABASE airflow CHARACTER SET utf8 COLLATE utf8_unicode_ci;CREATE USER 'airflow'@'%' IDENTIFIED BY 'airflow';GRANT ALL PRIVILEGES ON airflow.* TO 'airflow'@'%';FLUSH PRIVILEGES;EXIT;- Restart MySQL to Apply Changes:

sudo systemctl restart mysqld- Now, the MySQL database is set up with a database named

airflowand a user namedairflowwith the necessary privileges. Proceed to configure Apache Airflow to use this MySQL database as its backend.

Apache Airflow Installation using Mpack on Ambari

Create symbolic links for Python to use Python 3.8:

sudo ln -sf /usr/bin/python3.8 /usr/bin/python3sudo ln -sf /usr/bin/pip3.8 /usr/bin/pip3This following provides the steps for installing and setting up Apache Airflow using Management Pack (Mpack) on an Ambari-managed cluster.

Install and Configure Mpack:

- Install Mpack:

ambari-server install-mpack --mpack=ambari-mpacks-airflow-2.8.1.tar.gz --verbose- Uninstall Previous Mpack (if needed):

ambari-server uninstall-mpack --mpack-name=airflow-ambari-mpack- Change Symlinks:

- Navigate to the services directory and update the Airflow symlink for each service version:

cd /var/lib/ambari-server/resources/stacks/ODP/3.0/servicesunlink AIRFLOWln -s /var/lib/ambari-server/resources/mpacks/airflow-ambari-mpack-2.8.1/common-services/AIRFLOW/2.8.1 AIRFLOWcd /var/lib/ambari-server/resources/stacks/ODP/3.1/servicesunlink AIRFLOWln -s /var/lib/ambari-server/resources/mpacks/airflow-ambari-mpack-2.8.1/common-services/AIRFLOW/2.8.1 AIRFLOWcd /var/lib/ambari-server/resources/stacks/ODP/3.2/servicesunlink AIRFLOWln -s /var/lib/ambari-server/resources/mpacks/airflow-ambari-mpack-2.8.1/common-services/AIRFLOW/2.8.1 AIRFLOWcd /var/lib/ambari-server/resources/stacks/ODP/3.3/servicesunlink AIRFLOWln -s /var/lib/ambari-server/resources/mpacks/airflow-ambari-mpack-2.8.1/common-services/AIRFLOW/2.8.1 AIRFLOW- Restart Ambari Server:

ambari-server restartYour Apache Airflow installation is now configured and ready for use on your Ambari-managed cluster.

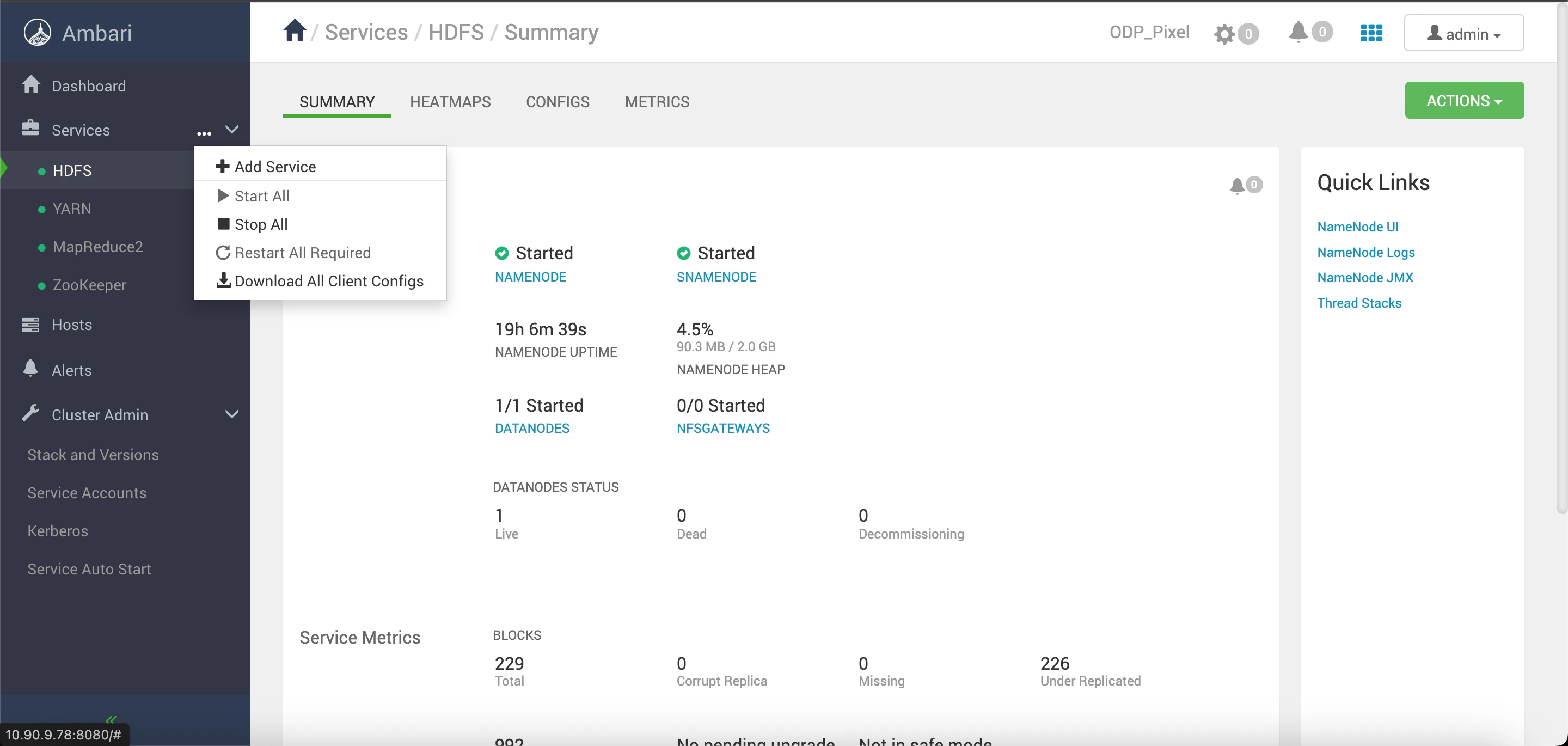

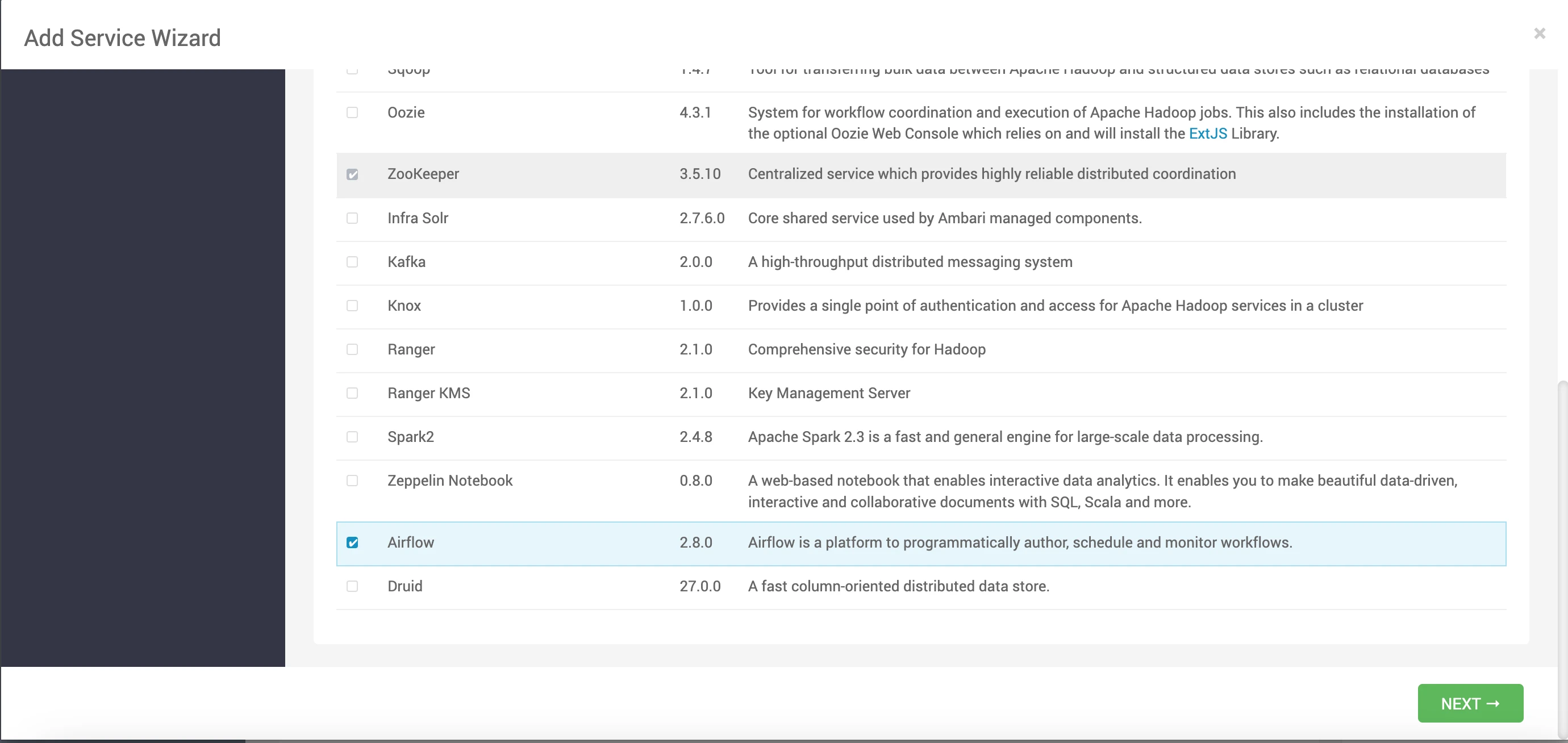

Steps to install Apache Airflow from the Ambari UI

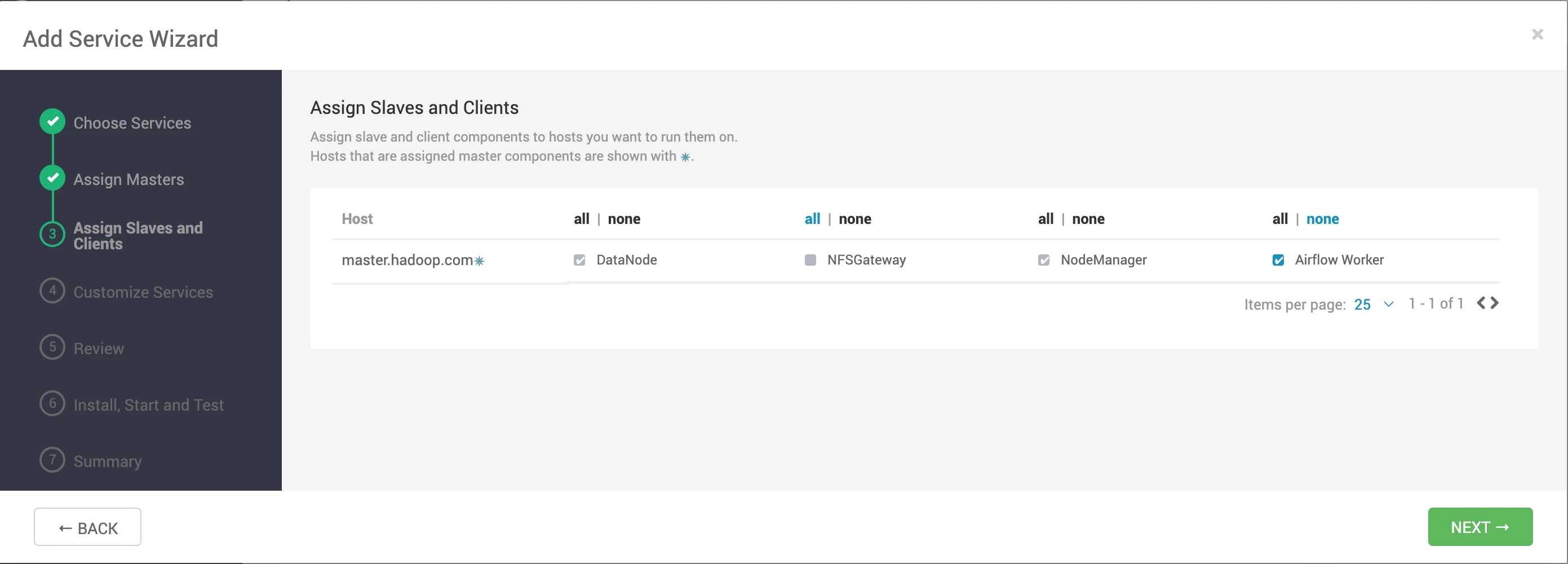

- Add the Airflow service from the Ambari UI.

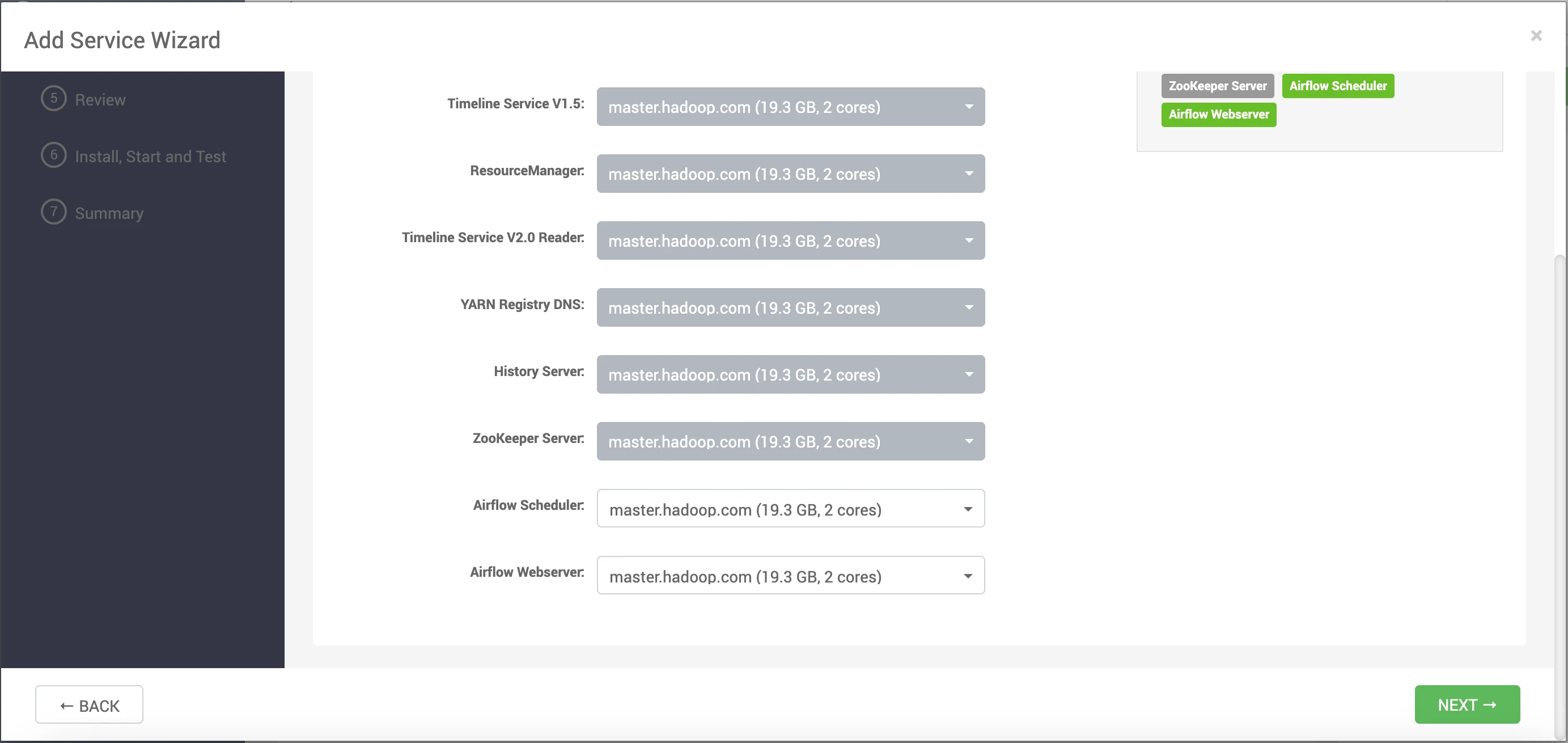

- Specify the host details for the Airflow Scheduler and Airflow Webserver.

- Choose the slave client configuration.

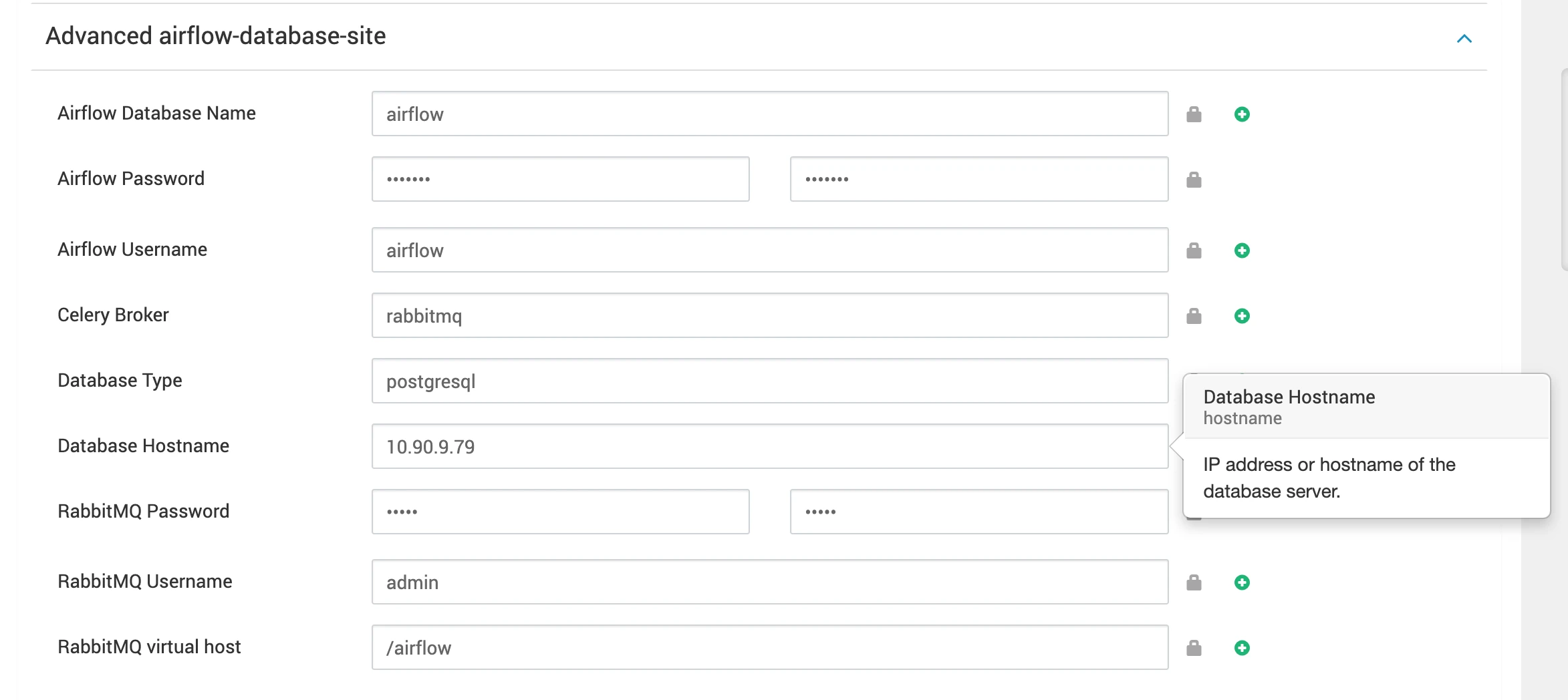

- Modify or customize the fields as needed.

Database Options:

Choose between MySQL or PostgreSQL as the backend database:

Configuring the Airflow backend database connection string and Celery settings. Users will be prompted to input specific information, including the database name, password, username, database type (choose between MySQL or PostgreSQL), and host IP. The provided script will then automatically generate the necessary configuration details for the database connection string and Celery settings.

Enter Database Information in ambari UI.

- Database Name

- Password

- Username

- Database Type: Choose between mysql or postgresql.

- Host IP

If you are using RabbitMQ then you have to setup and add RabbitMQ configurations.

- RabbitMQ Username

- RabbitMQ Password

- RabbitMQ virtual host

- Celery Broker

Once you have provided all the necessary details, click on the Next button.

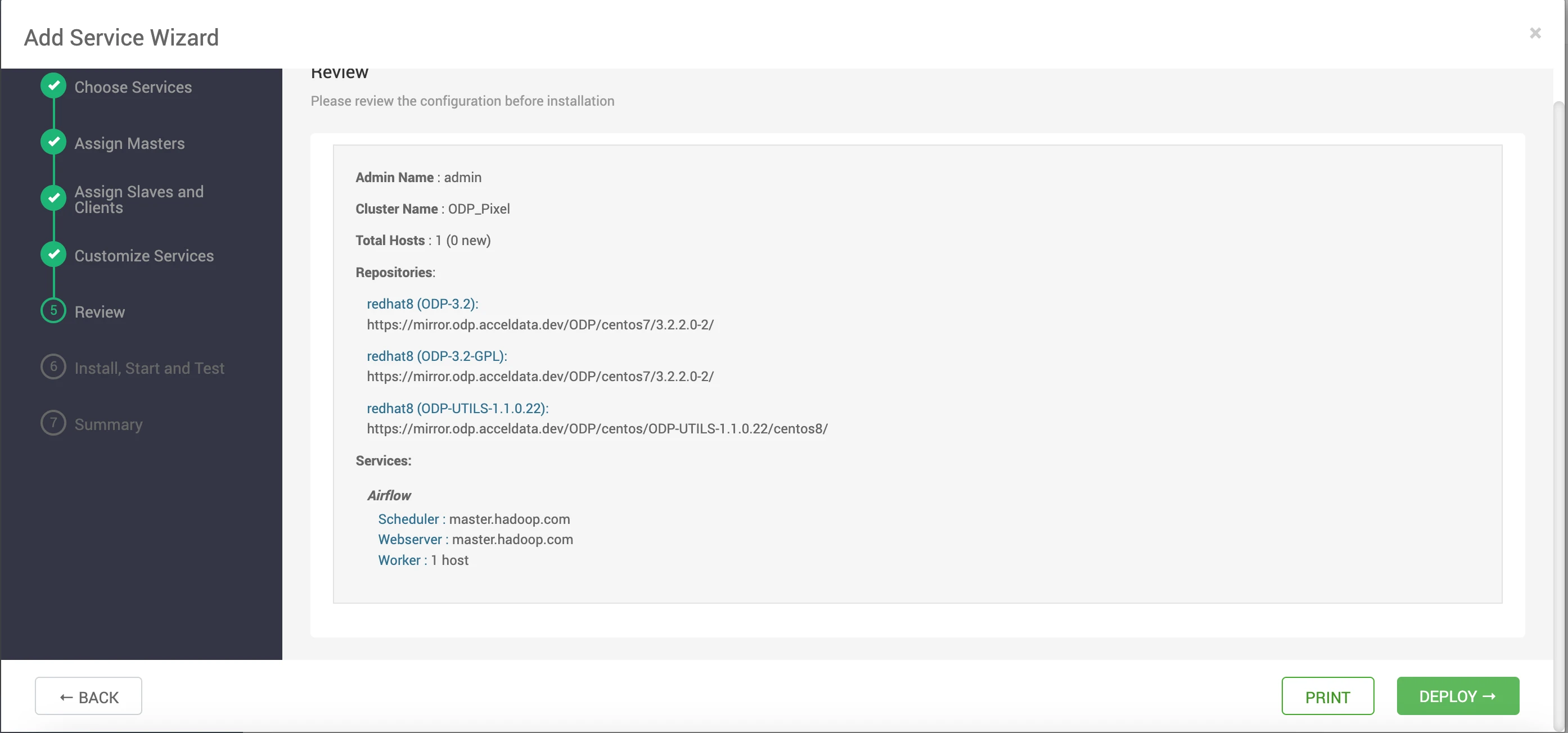

- Deploy the Airflow service.

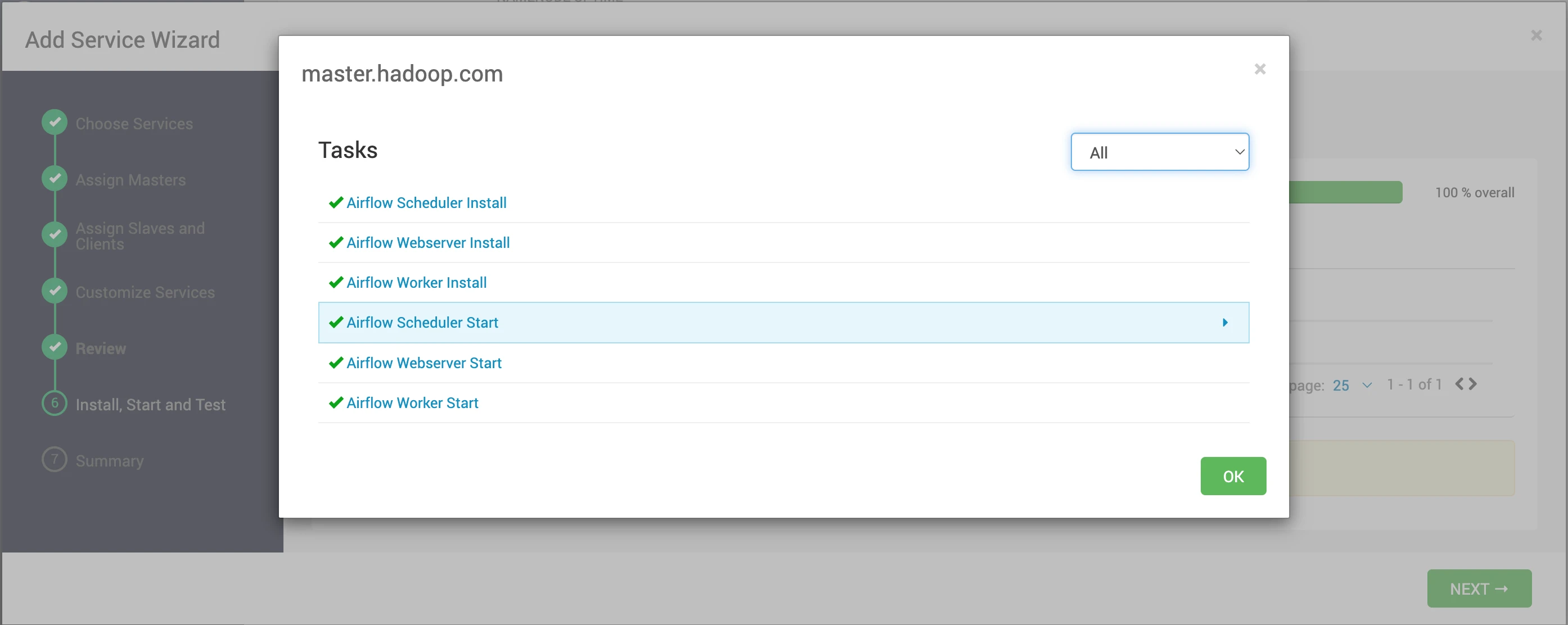

- This step will install all the necessary components and initiate the service.

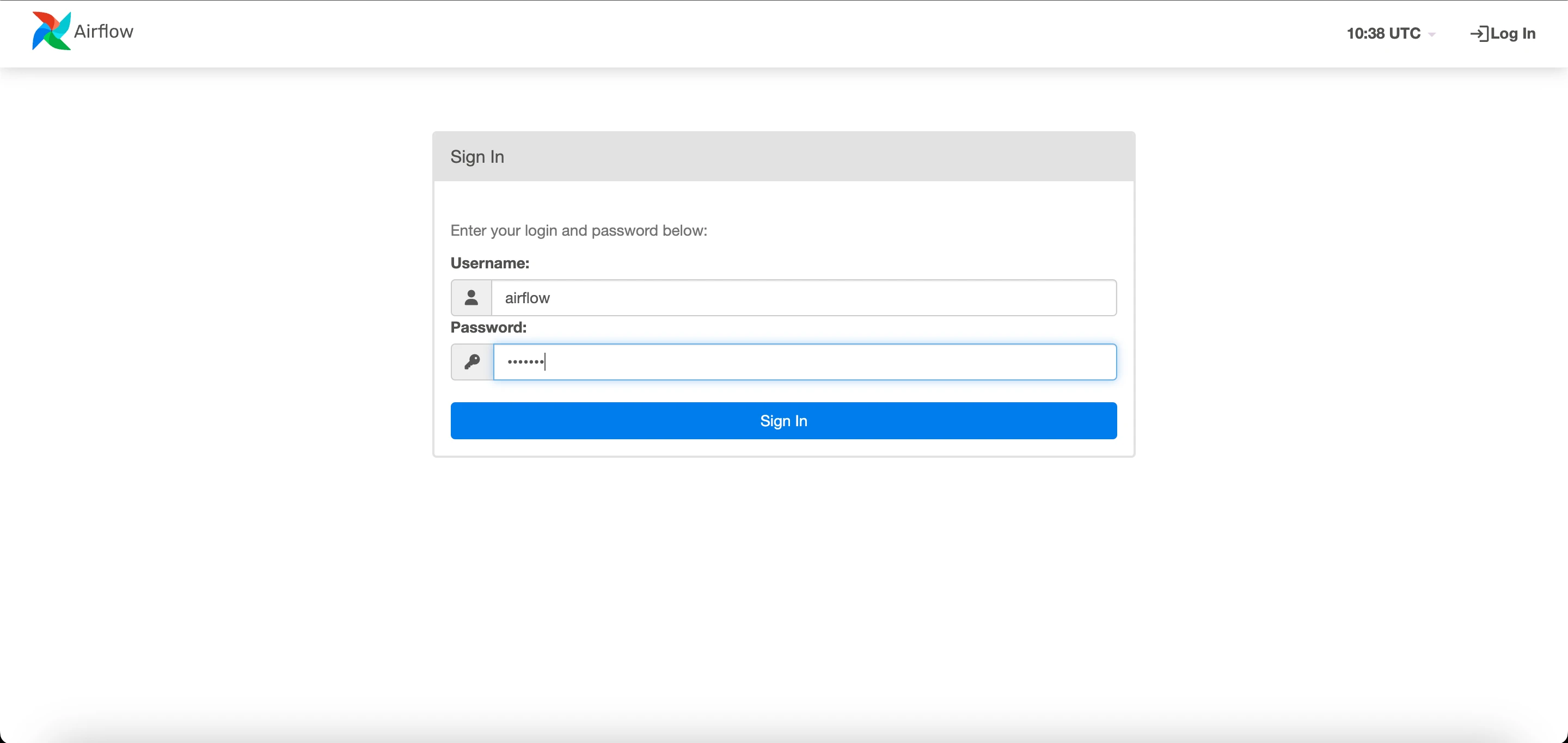

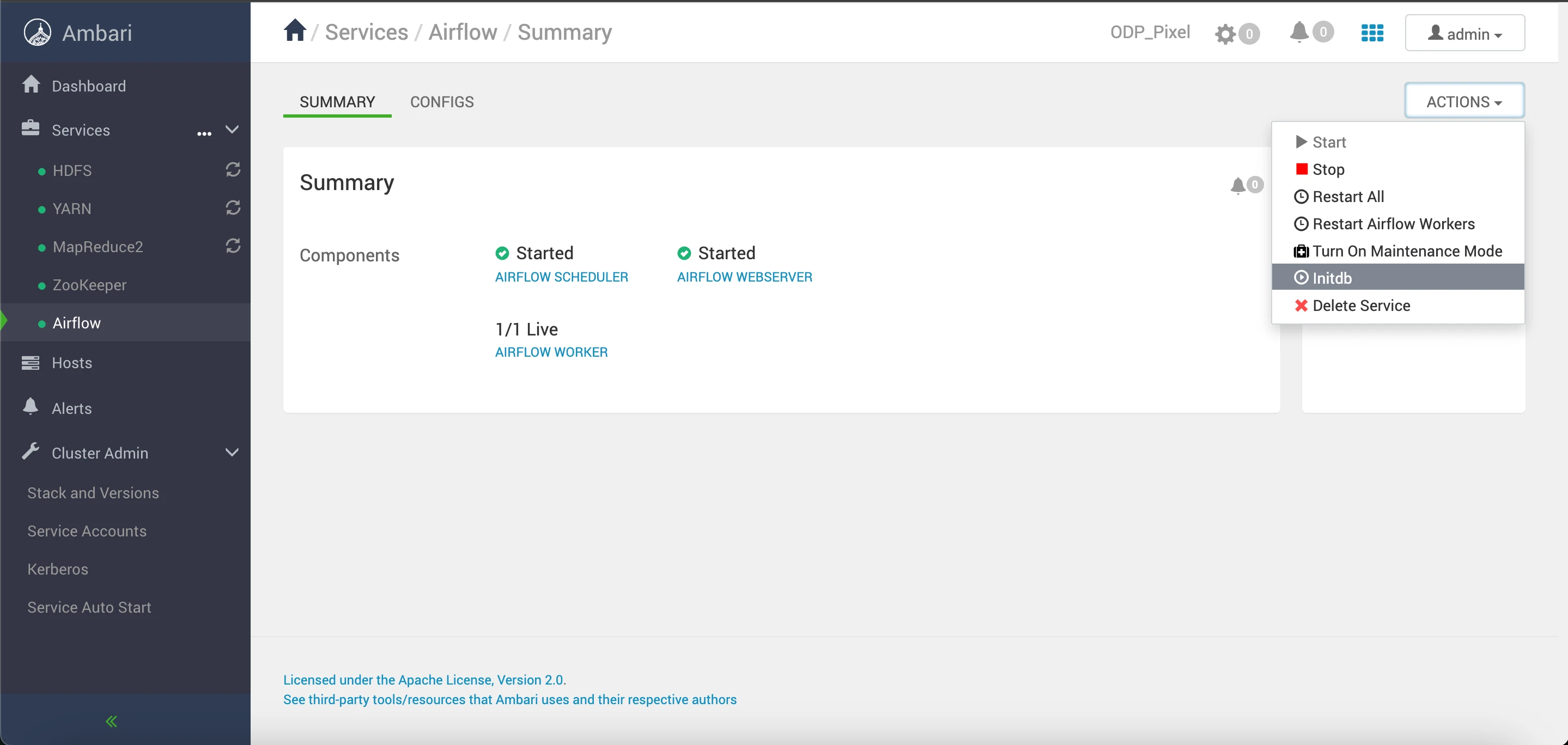

- The Airflow webserver is up and running. To access the UI, you need to create a username and password. To create the admin user, you must run the

initdbcommand from the Ambari UI.

This command will generate an admin user named "airflow" with the password "airflow."

You can utilize these credentials (username: "airflow", password: "airflow") to log in and access the Airflow webserver UI.

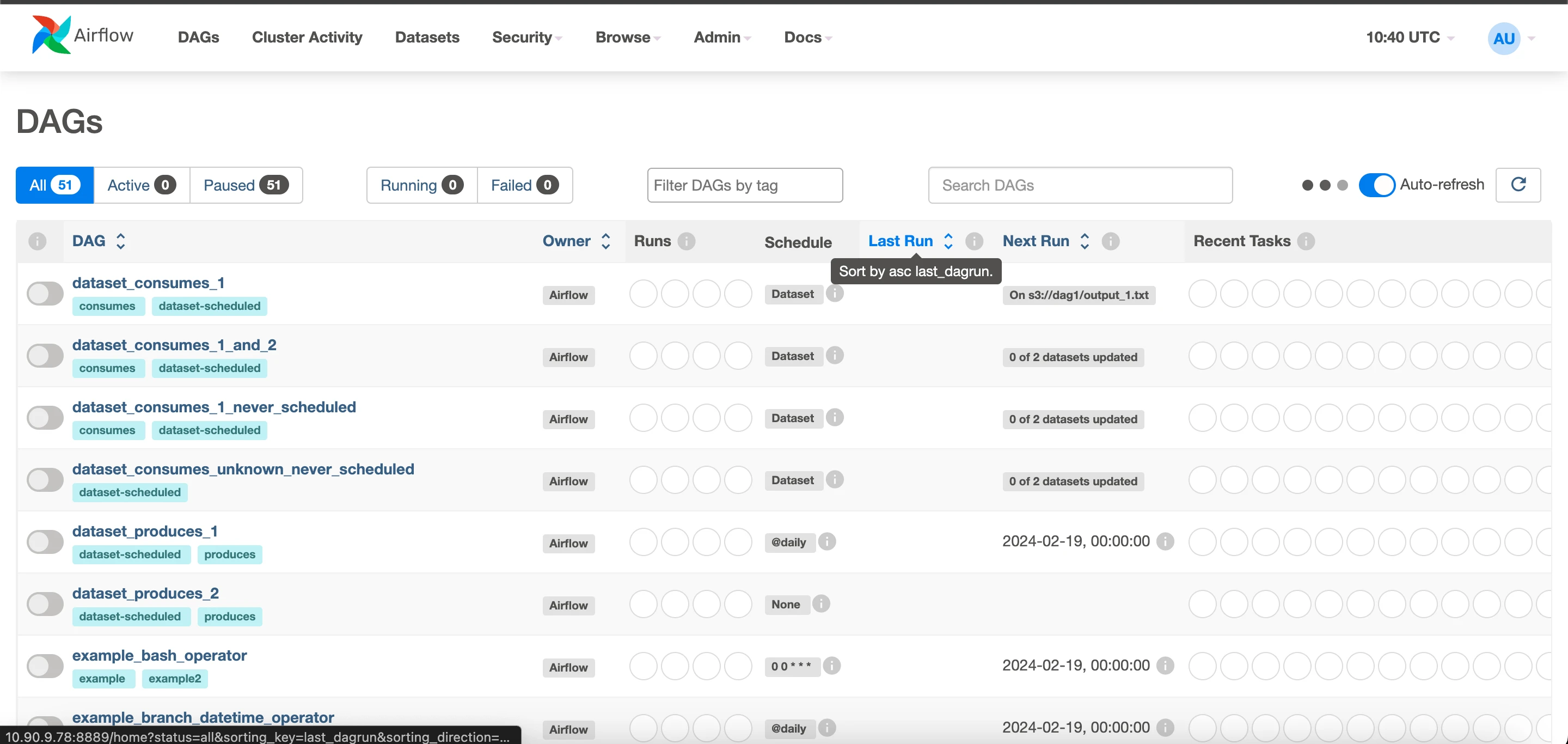

On completion of the database initialization, you can access the Airflow Webserver UI. Enter the provided credentials ("airflow" as the username and "airflow" as the password) to log in and access the Airflow webserver UI.