Documentation

ODP 3.3.6.3-1

Release Notes

What is ODP

Installation

Component User guide and Installation Instructions

Upgrade Instructions

Downgrade Instructions

Reference Guide

Security Guide

Troubleshooting Guide

Uninstall ODP

Title

Message

Create new category

What is the title of your new category?

Edit page index title

What is the title of the page index?

Edit category

What is the new title of your category?

Edit link

What is the new title and URL of your link?

Leverage HDFS as Deep Storage for Real-Time Tables

Summarize Page

Copy Markdown

Open in ChatGPT

Open in Claude

Connect to Cursor

Connect to VS Code

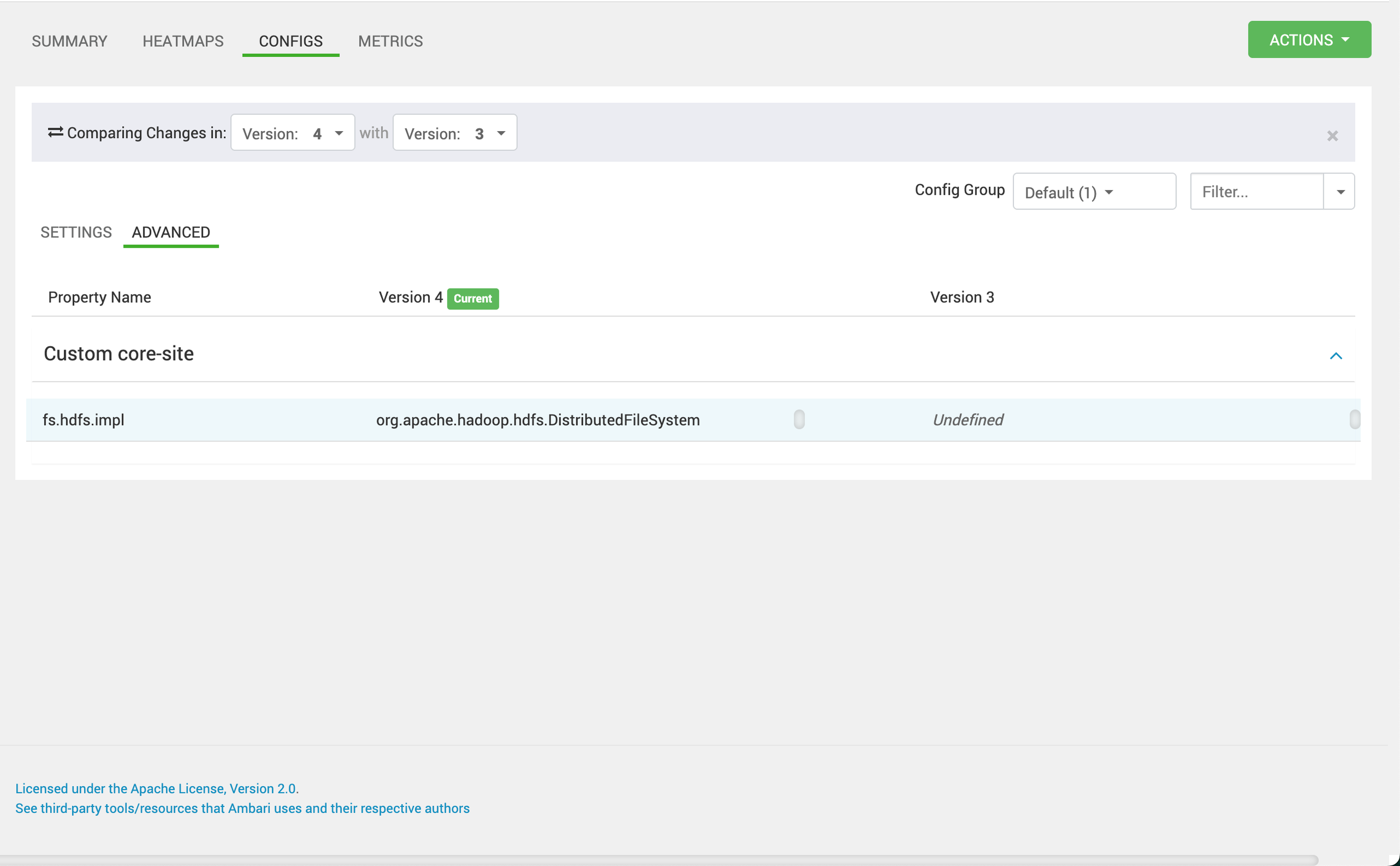

Configure Hadoop Filesystem Settings

Ensure that the core-site.xml and hdfs-site.xml files are correctly configured and accessible. These files define the Hadoop filesystem settings, including the default filesystem URI and any necessary authentication configurations.

Bash

fs.hdfs.impl=org.apache.hadoop.hdfs.DistributedFileSystem

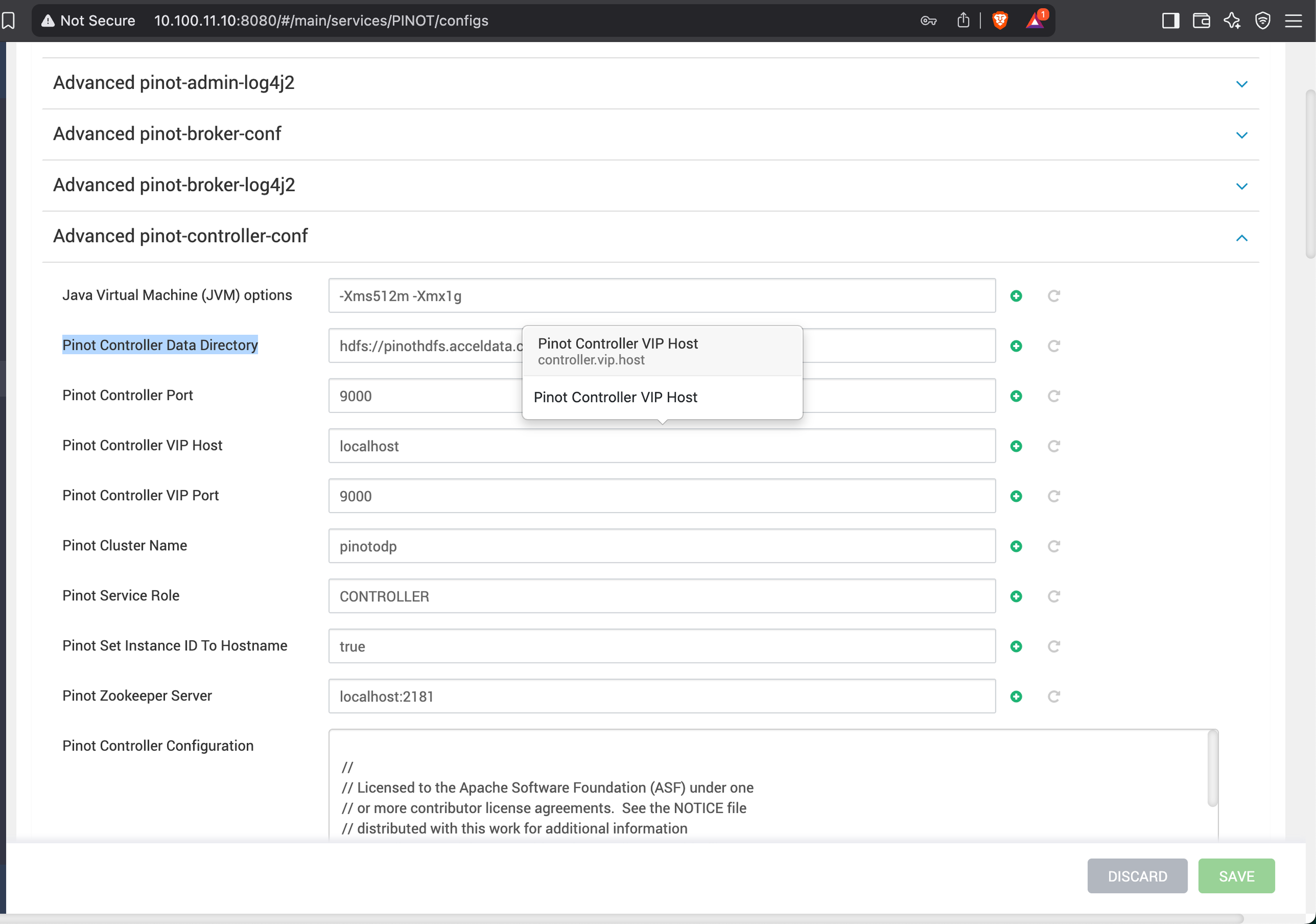

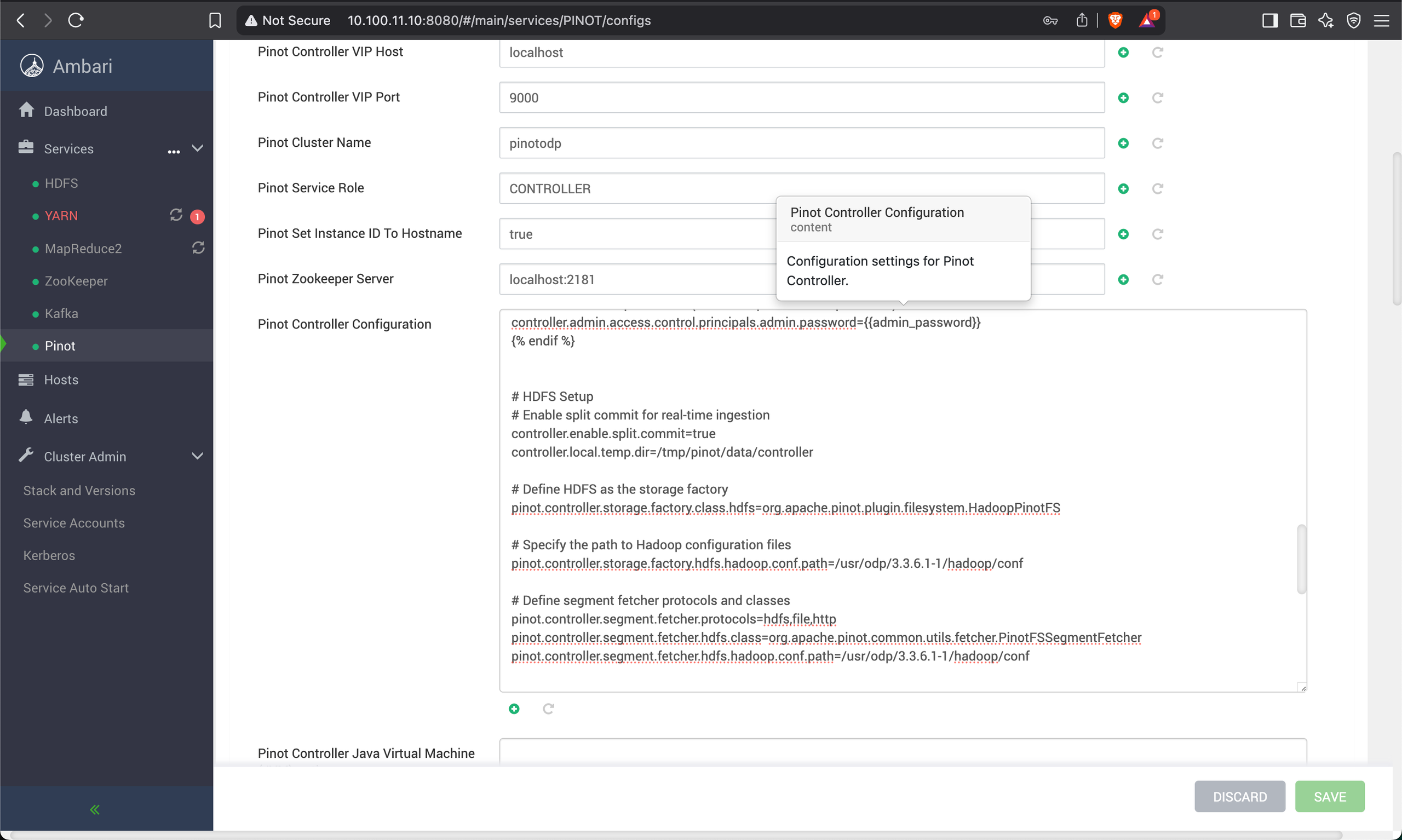

Update Pinot Controller Configuration

Modify the controller.conf file to include the following properties.

Bash

Pinot Controller Data Directory=hdfs://pinothdfs.acceldata.ce/pinot/segments

Bash

x

# HDFS Setup# Enable split commit for real-time ingestioncontroller.enable.split.commit=truecontroller.local.temp.dir=/tmp/pinot/data/controller# Define HDFS as the storage factorypinot.controller.storage.factory.class.hdfs=org.apache.pinot.plugin.filesystem.HadoopPinotFS# Specify the path to Hadoop configuration filespinot.controller.storage.factory.hdfs.hadoop.conf.path=/usr/odp/3.3.6.3-1/hadoop/conf# Define segment fetcher protocols and classespinot.controller.segment.fetcher.protocols=hdfs,file,httppinot.controller.segment.fetcher.hdfs.class=org.apache.pinot.common.utils.fetcher.PinotFSSegmentFetcherpinot.controller.segment.fetcher.hdfs.hadoop.conf.path=/usr/odp/3.3.6.3-1/hadoop/conf

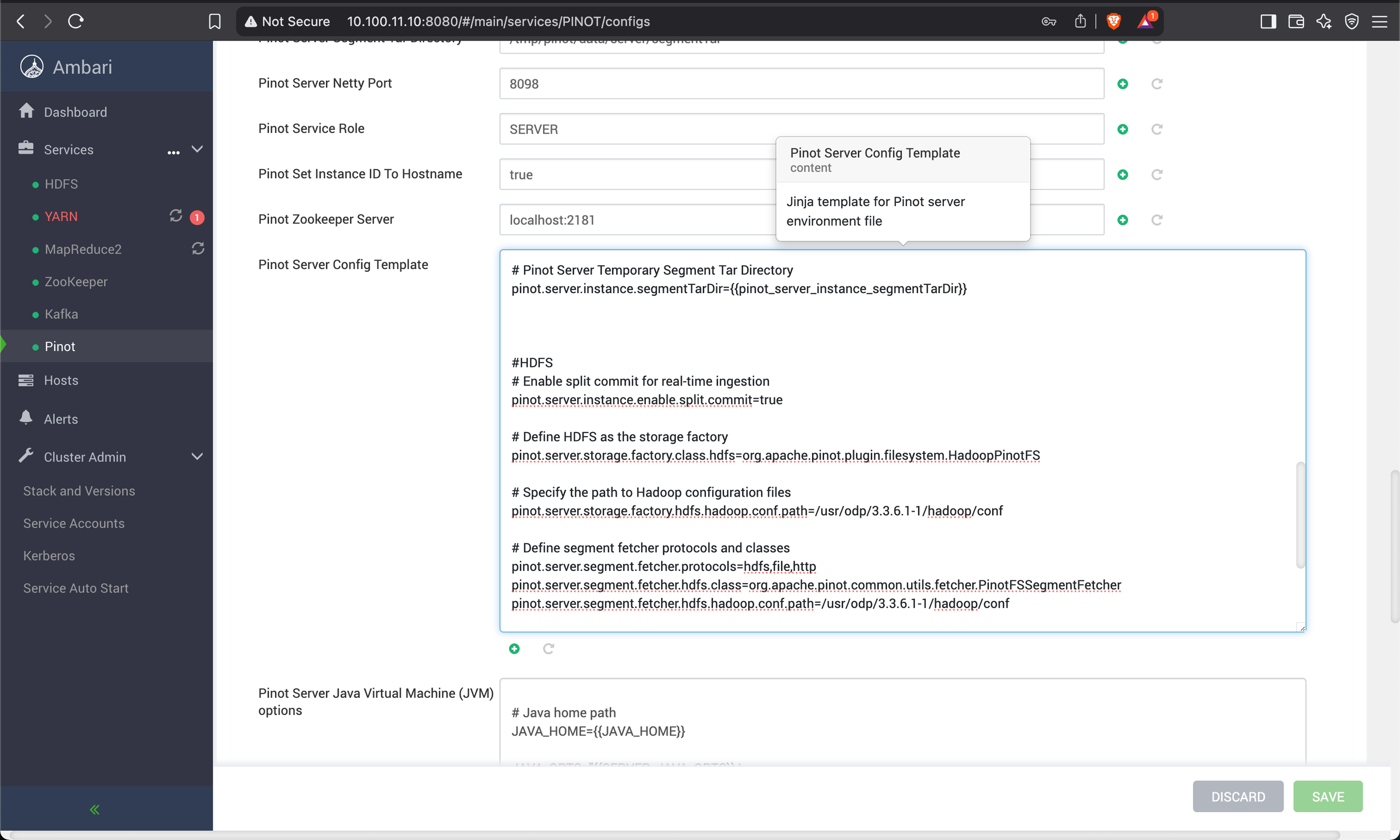

Update Pinot Server Configuration

Modify the server.conf file to include the following properties.

Bash

#HDFS# Enable split commit for real-time ingestionpinot.server.instance.enable.split.commit=true# Define HDFS as the storage factorypinot.server.storage.factory.class.hdfs=org.apache.pinot.plugin.filesystem.HadoopPinotFS# Specify the path to Hadoop configuration filespinot.server.storage.factory.hdfs.hadoop.conf.path=/usr/odp/3.3.6.3-1/hadoop/conf# Define segment fetcher protocols and classespinot.server.segment.fetcher.protocols=hdfs,file,httppinot.server.segment.fetcher.hdfs.class=org.apache.pinot.common.utils.fetcher.PinotFSSegmentFetcherpinot.server.segment.fetcher.hdfs.hadoop.conf.path=/usr/odp/3.3.6.3-1/hadoop/conf

Restart HDFS and Pinot

Create real-time table for KAFKA- Define Pinot Schema.

Create a file /tmp/pinot/schema-stream.json.

Bash

{ "schemaName": "eventsnew", "dimensionFieldSpecs": [ { "name": "uuid", "dataType": "STRING" } ], "metricFieldSpecs": [ { "name": "count", "dataType": "INT" } ], "dateTimeFieldSpecs": [{ "name": "ts", "dataType": "TIMESTAMP", "format": "1:MILLISECONDS:EPOCH", "granularity": "1:MILLISECONDS" }]}Define Pinot Table Config

Create a file /tmp/pinot/table-config-stream.json.

Update the following in table-config-stream.json:

- Table and Schema Names.

stream.kafka.broker.listbased on your broker list.

Bash

{ "tableName": "eventsnew", "tableType": "REALTIME", "segmentsConfig": { "timeColumnName": "ts", "schemaName": "eventsnew", "replicasPerPartition": "1" }, "tenants": {}, "tableIndexConfig": { "loadMode": "MMAP", "streamConfigs": { "streamType": "kafka", "stream.kafka.consumer.type": "lowlevel", "stream.kafka.topic.name": "events", "stream.kafka.decoder.class.name": "org.apache.pinot.plugin.stream.kafka.KafkaJSONMessageDecoder", "stream.kafka.consumer.factory.class.name": "org.apache.pinot.plugin.stream.kafka20.KafkaConsumerFactory", "stream.kafka.broker.list": "{hostname}or{IP}:6667", "realtime.segment.flush.threshold.rows": "0", "realtime.segment.flush.threshold.time": "1m", "realtime.segment.flush.threshold.segment.size": "50M", "stream.kafka.consumer.prop.auto.offset.reset": "smallest" } }, "metadata": { "customConfigs": {} }}Create Pinot Schema and Table

Run the following command:

Bash

bin/pinot-admin.sh AddTable -schemaFile /tmp/pinot/schema-stream.json -tableConfigFile /tmp/pinot/table-config-stream.json -controllerHost {hostname}or{IP} -controllerPort 9000 -exec

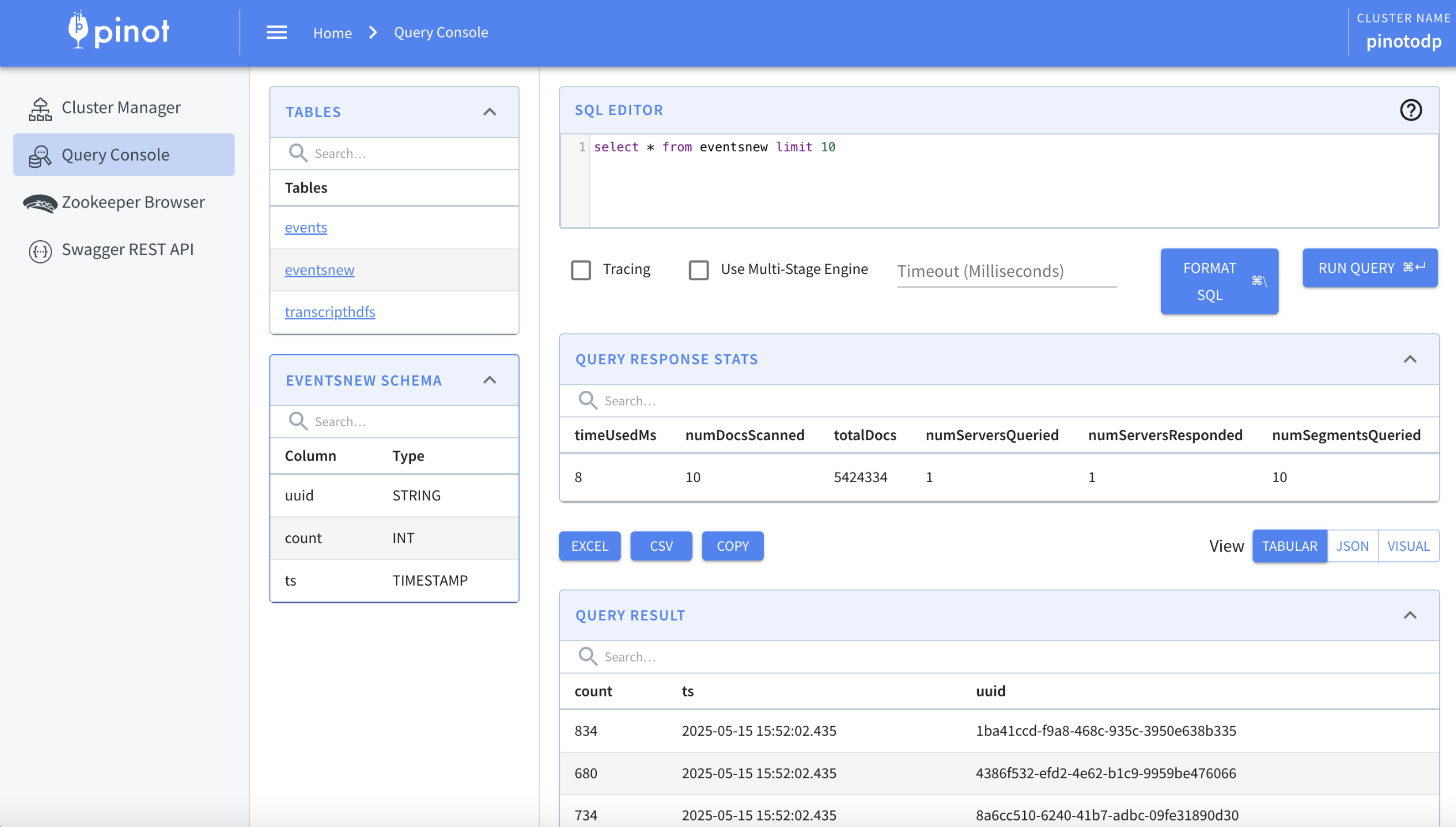

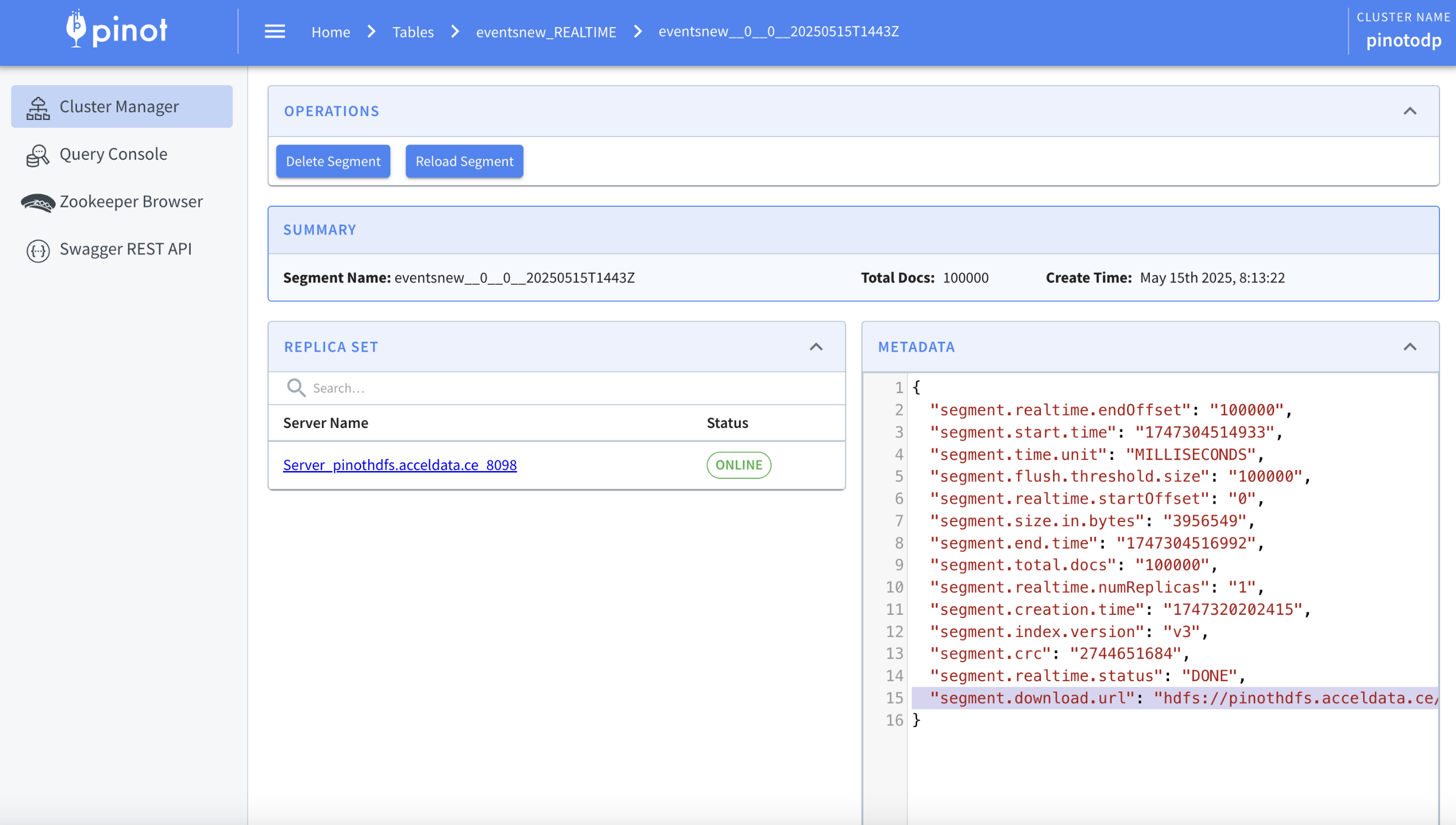

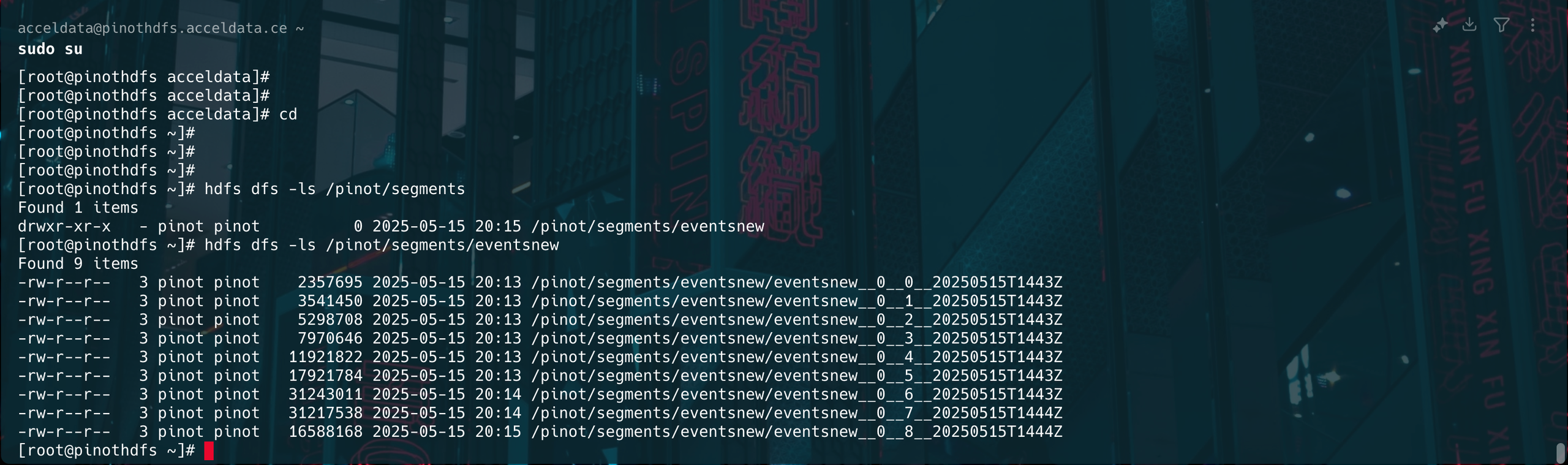

Verify the Segments on HDFS Path

The below image shows the segments of the HDFS path.

Type to search, ESC to discard

Type to search, ESC to discard

Type to search, ESC to discard

Last updated on Jan 12, 2026

Was this page helpful?

Next to read:

Pinot Known LimitationsDiscard Changes

Do you want to discard your current changes and overwrite with the template?

Archive Synced Block

Message

Create new Template

What is this template's title?

Delete Template

Message