Documentation

ODP 3.3.6.3-1

Release Notes

What is ODP

Installation

Component User guide and Installation Instructions

Getting Started

Set Up MLflow Backend

Install MLflow

Run MLflow

Configure Artifact Storage

Secure and Serve MLflow

Upgrade Instructions

Downgrade Instructions

Reference Guide

Security Guide

Troubleshooting Guide

Uninstall ODP

Title

Message

Create new category

What is the title of your new category?

Edit page index title

What is the title of the page index?

Edit category

What is the new title of your category?

Edit link

What is the new title and URL of your link?

Store MLflow Artifacts in S3

Summarize Page

Copy Markdown

Open in ChatGPT

Open in Claude

Connect to Cursor

Connect to VS Code

You can configure MLflow to store experiment artifacts such as models, images, and logs in an Amazon S3 bucket for scalable and durable storage. This is especially useful in distributed environments where multiple users or systems access the same artifacts.

Access S3 Object Using AWS CLI

Follow these steps to access, download, or view an image stored in S3, for example: s3://mlflow-artifacts-hv/my-folder/image.png.

- Verify AWS CLI installation

Bash

aws --version# If not installed:# On Ubuntu: sudo apt install awscli# On Mac: brew install awscli- Configure AWS CLI (if not already).

Bash

aws configureProvide the following details when prompted:

- AWS Access Key ID

- AWS Secret Access Key

- Default Region (e.g.,

us-east-1)

- List files in S3 path.

Bash

aws s3 ls s3://mlflow-artifacts-hv/my-folder/- Download image from S3.

Bash

aws s3 cp s3://mlflow-artifacts-hv/my-folder/image.png.This command downloads the image to your current working directory.

- View the image.

Bash

xdg-open image.png # Linuxopen image.png # macOS- (Optional) Generate a pre-signed URL.

If the bucket is private, you can generate a temporary URL to access it in a browser.

Bash

aws s3 presign s3://mlflow-artifacts-hv/my-folder/image.png --expires-in 3600This creates a secure URL valid for 1 hour (3600 seconds).

Example Use Cases

| Task | Command |

|---|---|

| List bucket | aws s3 ls s3://mlflow-artifacts-hv/ |

| List folder | aws s3 ls s3://mlflow-artifacts-hv/my-folder/ |

| Download image | aws s3 cp s3://mlflow-artifacts-hv/my-folder/image.png . |

| Generate public URL | aws s3 presign s3://mlflow-artifacts-hv/yourfile |

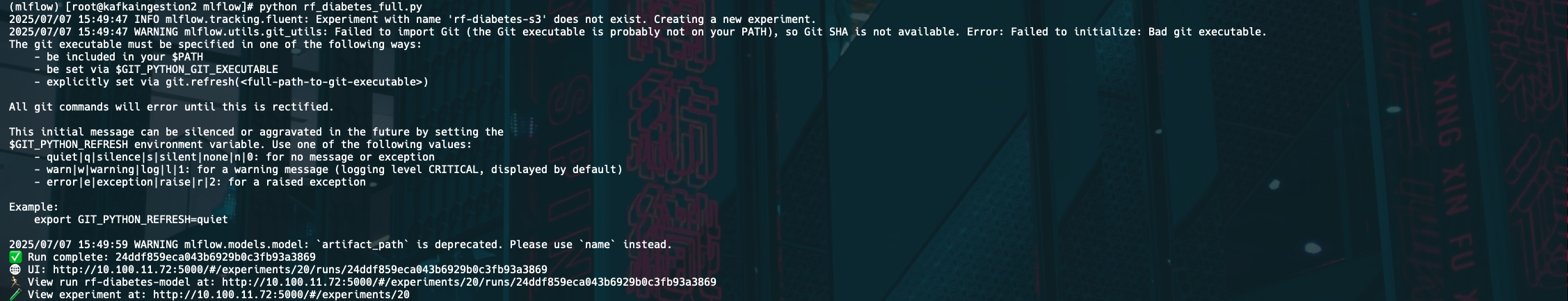

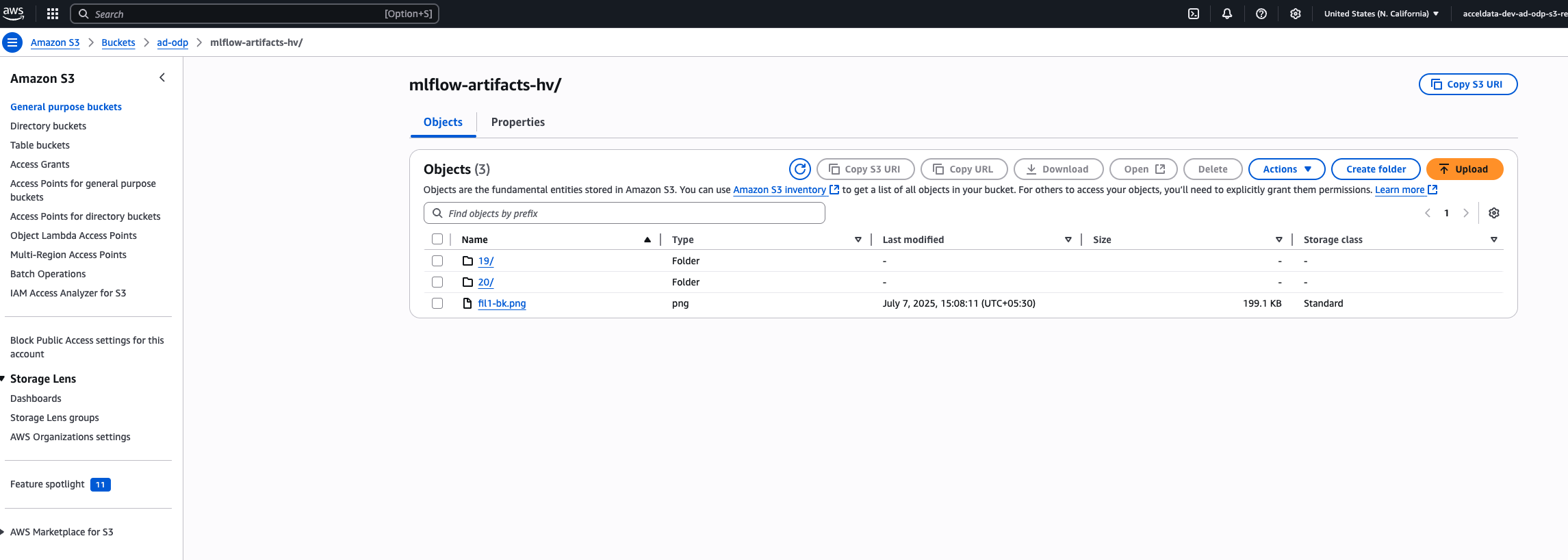

The below example shows how to store and access MLflow artifacts in S3 using the AWS CLI.

Bash

export AWS_ACCESS_KEY_ID={}export AWS_SECRET_ACCESS_KEY={}export AWS_DEFAULT_REGION=us-east-1Bash

mlflow server --backend-store-uri mysql+pymysql://mlflow:mlflow@10.100.11.70:3306/mlflow --default-artifact-root s3://ad-odp/mlflow-artifacts-hv/ --host 0.0.0.0 --port 5000Bash

pip install boto3Bash

x

import mlflowimport mlflow.sklearnfrom sklearn.datasets import load_diabetesfrom sklearn.ensemble import RandomForestRegressorfrom sklearn.model_selection import train_test_splitfrom sklearn.metrics import mean_squared_error, r2_scoreimport pandas as pdimport matplotlib.pyplot as pltimport joblibimport os# 📌 Set tracking URI and experimentmlflow.set_tracking_uri("http://10.100.11.72:5000")mlflow.set_experiment("rf-diabetes-s3")# 📦 Load datasetdata = load_diabetes()X = pd.DataFrame(data.data, columns=data.feature_names)y = pd.Series(data.target, name="target")X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)# 🎯 Start MLflow runwith mlflow.start_run(run_name="rf-diabetes-model") as run: # 🔧 Train model model = RandomForestRegressor(n_estimators=100, max_depth=6, random_state=42) model.fit(X_train, y_train) # 📈 Predict and evaluate preds = model.predict(X_test) mse = mean_squared_error(y_test, preds) r2 = r2_score(y_test, preds) # 📊 Log params, metrics mlflow.log_param("n_estimators", 100) mlflow.log_param("max_depth", 6) mlflow.log_metric("mse", mse) mlflow.log_metric("r2", r2) # 💾 Save model file model_path = "rf_model.pkl" joblib.dump(model, model_path) mlflow.log_artifact(model_path) # 📉 Save and log plot plt.figure() plt.scatter(y_test, preds) plt.xlabel("Actual") plt.ylabel("Predicted") plt.title("Actual vs Predicted") plot_path = "actual_vs_predicted.png" plt.savefig(plot_path) mlflow.log_artifact(plot_path) # ✨ Log model with signature mlflow.sklearn.log_model( sk_model=model, artifact_path="sklearn-model", input_example=X_test[:5], signature=mlflow.models.signature.infer_signature(X_test, preds), ) print(f"✅ Run complete: {run.info.run_id}") print(f"🌐 UI: http://10.100.11.72:5000/#/experiments/{run.info.experiment_id}/runs/{run.info.run_id}")

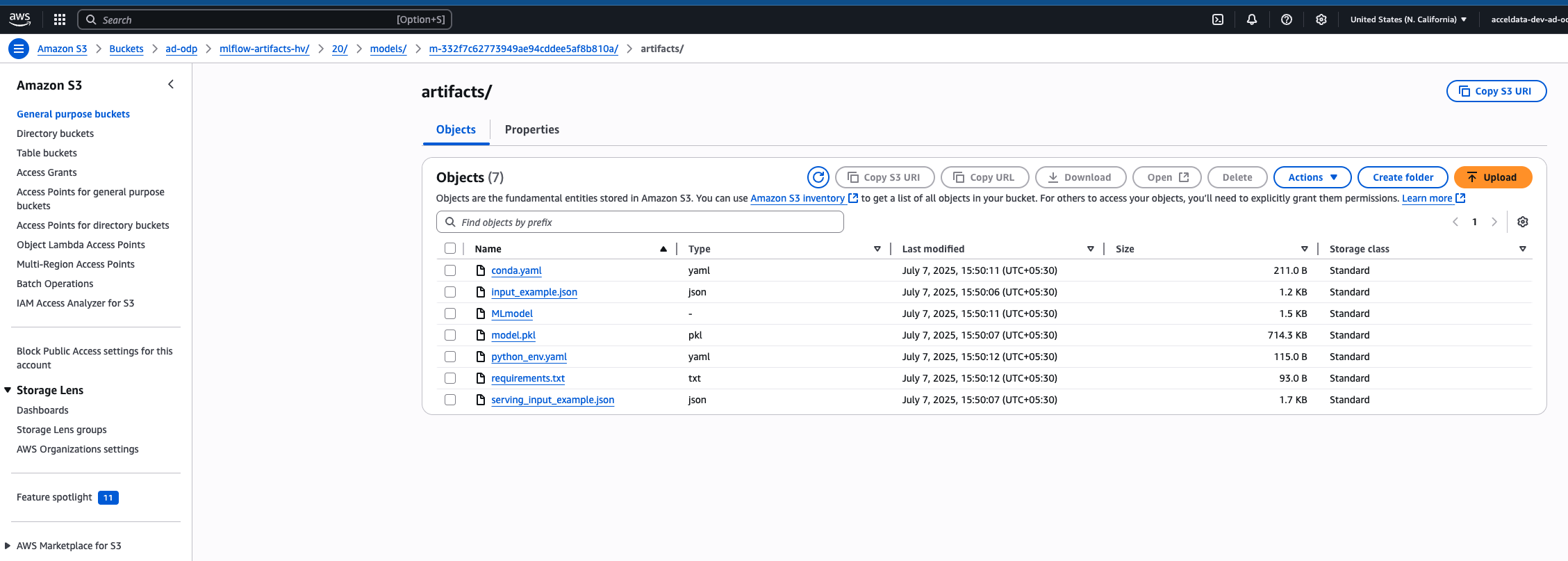

Bash

[root@kafkaingestion2 ~]# aws s3 ls s3://ad-odp/mlflow-artifacts-hv/ PRE 19/ PRE 20/2025-07-07 15:06:14 0 2025-07-07 15:08:11 203887 fil1-bk.png[root@kafkaingestion2 ~]# [root@kafkaingestion2 ~]# aws s3 ls s3://ad-odp/mlflow-artifacts-hv/PRE 20Unknown options: 20[root@kafkaingestion2 ~]# aws s3 ls "s3://ad-odp/mlflow-artifacts-hv/PRE 20"[root@kafkaingestion2 ~]# aws s3 ls s3://ad-odp/mlflow-artifacts-hv/20/ PRE 24ddf859eca043b6929b0c3fb93a3869/ PRE models/[root@kafkaingestion2 ~]# [root@kafkaingestion2 ~]# aws s3 ls s3://ad-odp/mlflow-artifacts-hv/20/models PRE models/[root@kafkaingestion2 ~]# aws s3 ls s3://ad-odp/mlflow-artifacts-hv/20/models/ PRE m-332f7c62773949ae94cddee5af8b810a/[root@kafkaingestion2 ~]# aws s3 ls s3://ad-odp/mlflow-artifacts-hv/20/models/m-332f7c62773949ae94cddee5af8b810a/ PRE artifacts/[root@kafkaingestion2 ~]# aws s3 ls s3://ad-odp/mlflow-artifacts-hv/20/models/m-332f7c62773949ae94cddee5af8b810a/artifacts PRE artifacts/[root@kafkaingestion2 ~]# aws s3 ls s3://ad-odp/mlflow-artifacts-hv/20/models/m-332f7c62773949ae94cddee5af8b810a/artifacts/2025-07-07 15:50:11 1559 MLmodel2025-07-07 15:50:11 211 conda.yaml2025-07-07 15:50:06 1183 input_example.json2025-07-07 15:50:07 731401 model.pkl2025-07-07 15:50:12 115 python_env.yaml2025-07-07 15:50:12 93 requirements.txt2025-07-07 15:50:07 1762 serving_input_example.json[root@kafkaingestion2 ~]#

Bash

cat localtest.py import mlflowimport os# Optional: set the MLflow tracking URI (only needed if not default localhost:5000)mlflow.set_tracking_uri("http://10.100.11.39:5000")# Start an experiment (or get existing one)experiment_name = "artifact_test"mlflow.set_experiment(experiment_name)with mlflow.start_run(run_name="simple_artifact_test") as run: # Log a parameter mlflow.log_param("param1", 123) # Log a metric mlflow.log_metric("accuracy", 0.95) # Log an artifact (e.g., a simple text file) os.makedirs("artifacts", exist_ok=True) with open("artifacts/output.txt", "w") as f: f.write("This is a test artifact for MLflow.") mlflow.log_artifacts("artifacts") print(f"Run ID: {run.info.run_id}") print("Check the MLflow UI or artifact location for 'output.txt'.")Bash

cat hdfstest.py import mlflowimport os# Optional: Set the tracking URI if you're not using default (e.g., if using remote MLflow server)mlflow.set_tracking_uri("http://10.100.11.39:5000")# Create or load an experimentexperiment_name = "hdfs_artifact_test"mlflow.set_experiment(experiment_name)with mlflow.start_run(run_name="hdfs_debug_run") as run: print(f"✅ Experiment Name: {experiment_name}") print(f"📌 Run ID: {run.info.run_id}") print(f"📁 Artifact URI: {mlflow.get_artifact_uri()}") # Log sample param and metric mlflow.log_param("framework", "mlflow") mlflow.log_metric("accuracy", 0.99) # Create and log a test artifact os.makedirs("artifacts", exist_ok=True) artifact_file = "artifacts/test_hdfs_artifact.txt" with open(artifact_file, "w") as f: f.write("This is a test file to verify HDFS artifact logging.") mlflow.log_artifacts("artifacts") print("\n🎯 Artifact log complete.") print("📂 Check the above HDFS path or run this on your shell:") print(f"hdfs dfs -ls -R {mlflow.get_artifact_uri().replace('hdfs://', '/')}")Bash

cat s3test.py import mlflowimport os# Optional: Set MLflow tracking URI if not defaultmlflow.set_tracking_uri("http://10.100.11.39:5000")# Set the experiment nameexperiment_name = "s3_artifact_test"mlflow.set_experiment(experiment_name)with mlflow.start_run(run_name="s3_debug_run") as run: print(f"✅ Experiment Name: {experiment_name}") print(f"📌 Run ID: {run.info.run_id}") print(f"📁 Artifact URI: {mlflow.get_artifact_uri()}") # Log param and metric mlflow.log_param("storage", "s3") mlflow.log_metric("accuracy", 0.92) # Write and log a test artifact os.makedirs("artifacts", exist_ok=True) with open("artifacts/s3_test_file.txt", "w") as f: f.write("S3 artifact upload test for bucket ad-odp/mlflow-artifacts-hv.") mlflow.log_artifacts("artifacts") print("\n🎯 Artifact logged successfully.") print("🔍 Check it via AWS CLI:") print("aws s3 ls s3://ad-odp/mlflow-artifacts-hv/ --recursive")Type to search, ESC to discard

Type to search, ESC to discard

Type to search, ESC to discard

Last updated on Aug 6, 2025

Was this page helpful?

Next to read:

Install NGINX and Configure for MLflowDiscard Changes

Do you want to discard your current changes and overwrite with the template?

Archive Synced Block

Message

Create new Template

What is this template's title?

Delete Template

Message